光学学报, 2020, 40 (16): 1611003, 网络出版: 2020-08-07

基于生成对抗网络的无透镜成像方法  下载: 1257次

下载: 1257次

Lens-Free Imaging Method Based on Generative Adversarial Networks

成像系统 无透镜成像 深度学习 生成对抗网络 相位恢复 图像重建 image systems lens-free imaging deep learning generative adversarial network phase recovery image reconstruction

摘要

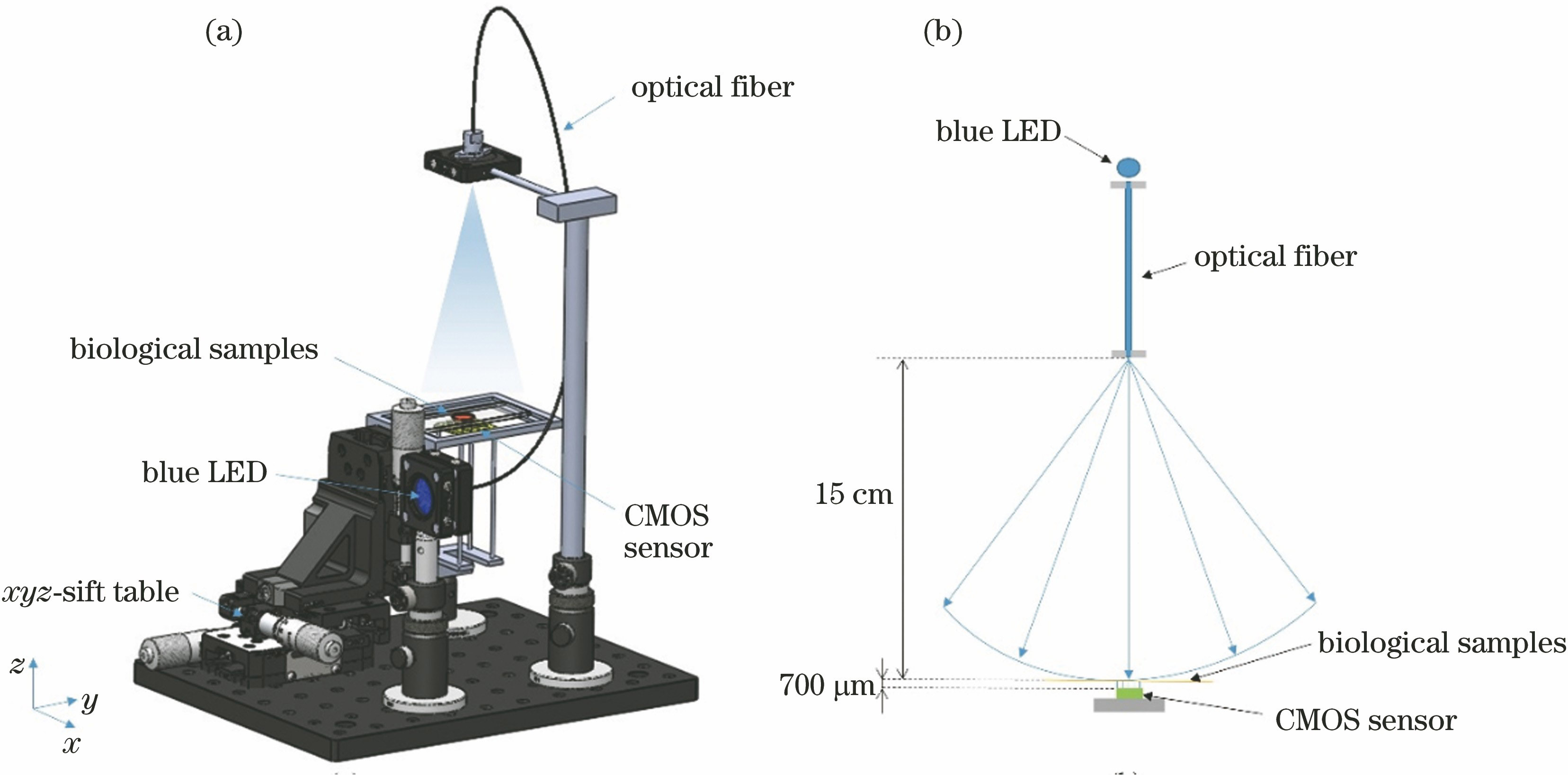

无透镜同轴全息图中包含零级像和孪生像噪声,采用基于菲涅耳衍射模型的方法进行抑制时需要多幅无透镜图像。针对此问题,提出一种基于生成对抗网络(GAN)的无透镜成像方法。首先计算部分相干光照明下无透镜图像的离焦距离,根据该离焦距离反向衍射传播,得到含零级像和孪生像的物平面图像。然后对该物平面图像与作为标准参考的商用显微镜图像进行配准,将配准后的图像作为GAN的训练样本,训练后得到GAN的核函数。最后用训练好的核函数对无透镜图像进行处理,得到清晰的目标图像。实验结果表明,所提方法可对零级像和孪生像有效抑制,图像的对比度和清晰度明显提高,效果可达4×商用显微物镜。所提方法在图像重建阶段只需单张无透镜图像且无需傅里叶变换等复杂操作,成像时间大大缩短。相比于基于卷积神经网络(CNN)的方法,所提方法需要的训练数据量更少,损失函数更易收敛,具有更高的处理效率。

Abstract

Lens-free inline holography contains zero-order image noise and twin image noise. Methods based on the Fresnel diffraction model can suppress these noise, but require many lens-free images. To resolve this problem, this paper proposes a lens-free imaging method based on generative adversarial networks (GAN). First, the defocusing distance of a lens-free image is calculated under partially coherent illumination, and the object plane image with zero-order image and twin image is reconstructed through back diffraction propagation according to the defocusing distance. Next, the object plane image is registered with commercial microscope images which are the gold standard. The registered images are taken as the training inputs of the GAN. Finally, the trained kernel function of the GAN is used for reconstructing the lens-free images, thus obtaining clear target images. The experimental results show that the proposed method can effectively suppress the zero-order image and twin image and significantly improve (up to 4×commercial microscope objective) the contrast and clarity of the image. Because the proposed method requires only a single lens-free image and omits Fourier transforms and other complex operations in the image reconstruction stage, it greatly shortens the imaging time. The proposed method requires fewer training data, better converges the loss function, and has higher processing efficiency than the method based on convolutional neural networks (CNN).

张超, 邢涛, 刘紫珍, 何昊昆, 沈华, 卞殷旭, 朱日宏. 基于生成对抗网络的无透镜成像方法[J]. 光学学报, 2020, 40(16): 1611003. Chao Zhang, Tao Xing, Zizhen Liu, Haokun He, Hua Shen, Yinxu Bian, Rihong Zhu. Lens-Free Imaging Method Based on Generative Adversarial Networks[J]. Acta Optica Sinica, 2020, 40(16): 1611003.