激光与光电子学进展, 2020, 57 (4): 041503, 网络出版: 2020-02-20

室内移动机器人双目视觉全局定位  下载: 1496次

下载: 1496次

Global Localization for Indoor Mobile Robot Based on Binocular Vision

机器视觉 双目视觉 室内移动机器人 全局定位 运动区域检测 角点提取 machine vision binocular vision indoor mobile robot global localization motion area detection corner extraction

摘要

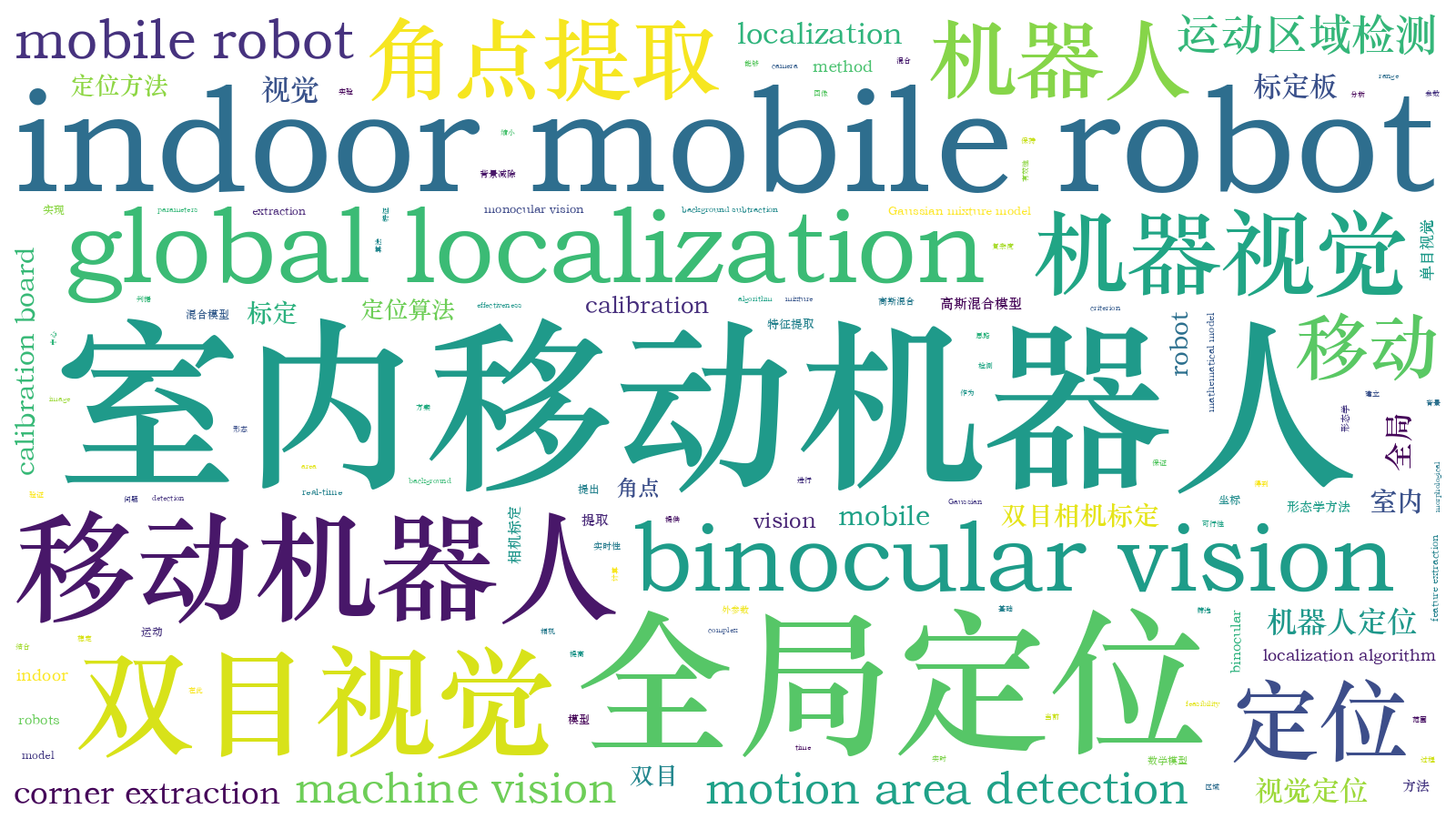

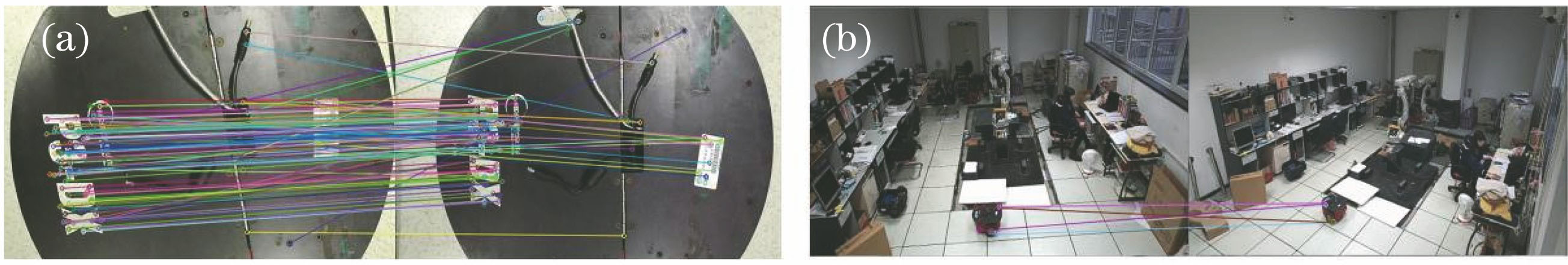

针对当前基于单目视觉的室内移动机器人全局定位算法复杂度大等问题,提出一种室内移动机器人双目视觉全局定位方法。双目视觉下,为保证室内移动机器人在运动过程中能够保持稳定的特征提取,提出基于标定板的全局定位方案,以标定板的中心作为移动机器人的定位点。在此基础上,为提高定位的实时性,缩小标定板角点的提取范围,基于高斯混合模型背景减除法和形态学方法实现了对移动机器人运动区域的检测;基于所建立的标定板角点判据,对移动机器人提取的角点进行筛选,得到了标定板四个角点的图像坐标;结合双目相机标定后的内、外参数和全局定位数学模型,实现对移动机器人定位点坐标的计算。通过实验和分析验证了所提方法的可行性和有效性,为室内移动机器人全局视觉定位提供一种新的思路。

Abstract

The global localization algorithm for an indoor mobile robot based on monocular vision is significantly complex at present. To solve this problem, this study proposes a global localization method for an indoor mobile robot based on binocular vision. To ensure stable feature extraction during the motion of the indoor mobile robot using binocular vision, a calibration board-based global localization scheme is presented. The center of the calibration board is used as the localization point of the mobile robot. Based on this, to improve real-time localization and reduce the extraction range of corner points on the calibration board, the motion area detection of the mobile robot is achieved using the Gaussian mixture model background subtraction method and morphological method. Further, according to the established criterion of corner points on the calibration board, image coordinates of four corner points on the calibration board are obtained by screening the corner points extracted from the mobile robot. The coordinates of the localization point are calculated by combining the intrinsic and extrinsic parameters of the binocular camera and the global localization mathematical model, and the feasibility and effectiveness of the proposed method are verified by experiments and analysis. This provides a new idea for the global vision localization of indoor mobile robots.

李鹏, 张洋洋. 室内移动机器人双目视觉全局定位[J]. 激光与光电子学进展, 2020, 57(4): 041503. Peng Li, Yangyang Zhang. Global Localization for Indoor Mobile Robot Based on Binocular Vision[J]. Laser & Optoelectronics Progress, 2020, 57(4): 041503.