激光与光电子学进展, 2019, 56 (14): 141008, 网络出版: 2019-07-12

基于卷积神经网络的SIFT特征描述子降维方法  下载: 1934次

下载: 1934次

Convolutional Neural Network-Based Dimensionality Reduction Method for Image Feature Descriptors Extracted Using Scale-Invariant Feature Transform

图像处理 神经网络 图像局部特征点提取 降维 image processing neural network image local feature point extraction dimensionality reduction

摘要

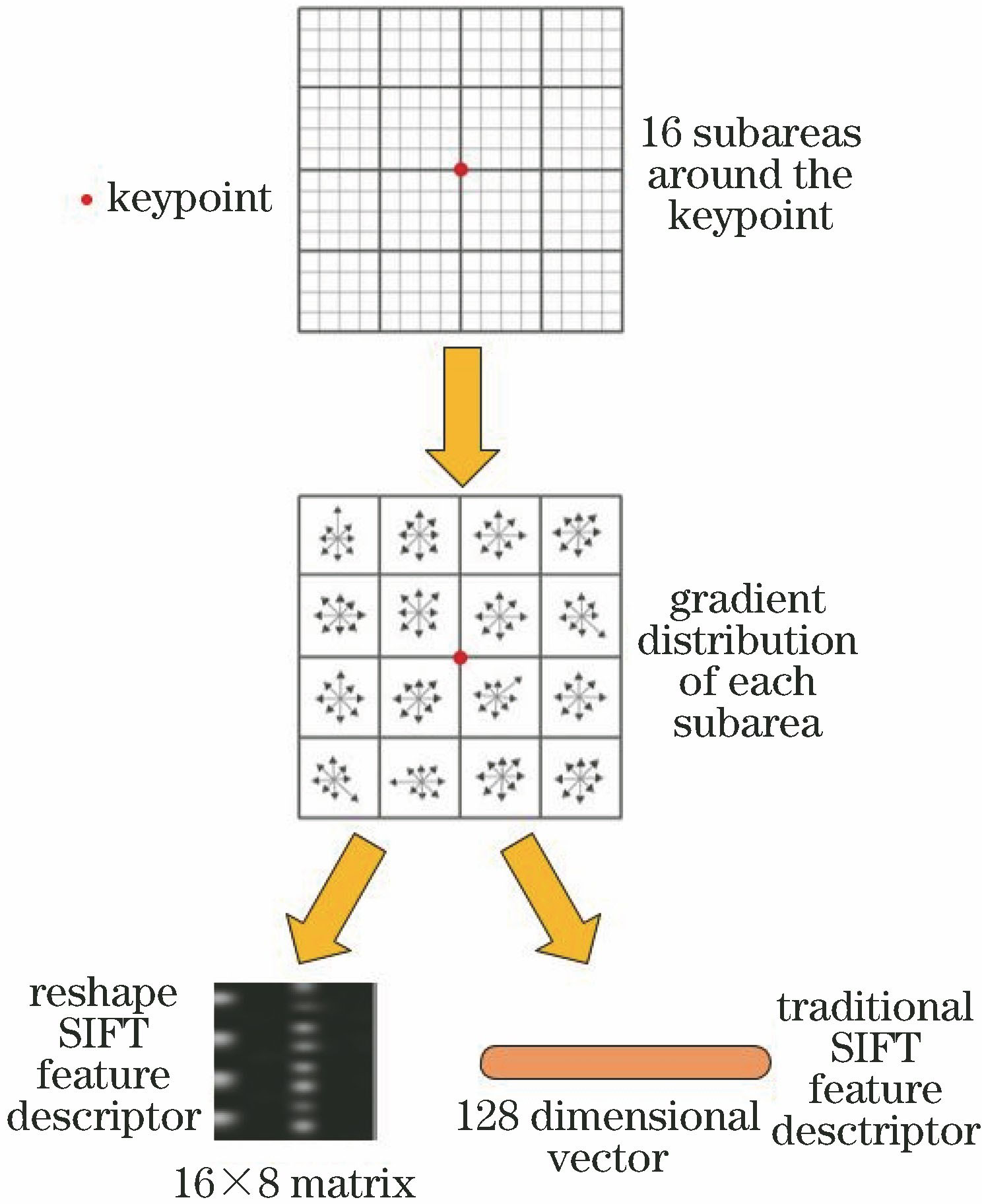

针对128维尺度不变特征变换(SIFT)特征描述子进行图像局部特征点提取时匹配时间过长,以及三维重建进行特征点配准时的应用局限性,结合深度学习方法,提出一种基于卷积神经网络的SIFT特征描述子降维方法。该方法利用卷积神经网络强大的学习能力实现了SIFT特征描述子降维,同时保留了良好的仿射变换不变性。实验结果表明,经过训练的卷积神经网络将SIFT特征描述子降至32维时,新的特征描述子在旋转、尺度、视点以及光照等仿射变换下均具有良好的匹配效果,匹配效率比传统SIFT特征描述子效率提高了5倍。

Abstract

Since local feature descriptors extracted from an image using the traditional scale-invariant feature transform (SIFT) method are 128-dimensional vectors, the matching time is too long, which limits their applicability in some cases such as feature point matching based on the three-dimensional reconstruction. To tackle this problem, a SIFT feature descriptor dimensionality reduction method based on a convolutional neural network is proposed. The powerful learning ability of the convolutional neural network is used to realize the dimensionality reduction of SIFT feature descriptors while maintaining their good affine transformation invariance. The experimental results demonstrate that the new feature descriptors obtained using the proposed method generalize well against affine transformations, such as rotation, scale, viewpoint, and illumination, after reducing their dimensionality to 32. Furthermore, the matching speed of the feature descriptors obtained using the proposed method is nearly five times faster than that of the SIFT feature descriptors.

周宏浩, 易维宁, 杜丽丽, 乔延利. 基于卷积神经网络的SIFT特征描述子降维方法[J]. 激光与光电子学进展, 2019, 56(14): 141008. Honghao Zhou, Weining Yi, Lili Du, Yanli Qiao. Convolutional Neural Network-Based Dimensionality Reduction Method for Image Feature Descriptors Extracted Using Scale-Invariant Feature Transform[J]. Laser & Optoelectronics Progress, 2019, 56(14): 141008.