激光与光电子学进展, 2019, 56 (21): 211503, 网络出版: 2019-11-02

基于卷积神经网络的足跟着地事件检测算法  下载: 570次

下载: 570次

Heel-Strike Event Detection Algorithm Based on Convolutional Neural Networks

机器视觉 步态事件检测 连续轮廓帧差图 卷积神经网络 machine vision gait event detection consecutive-silhouette difference maps convolutional neural network

摘要

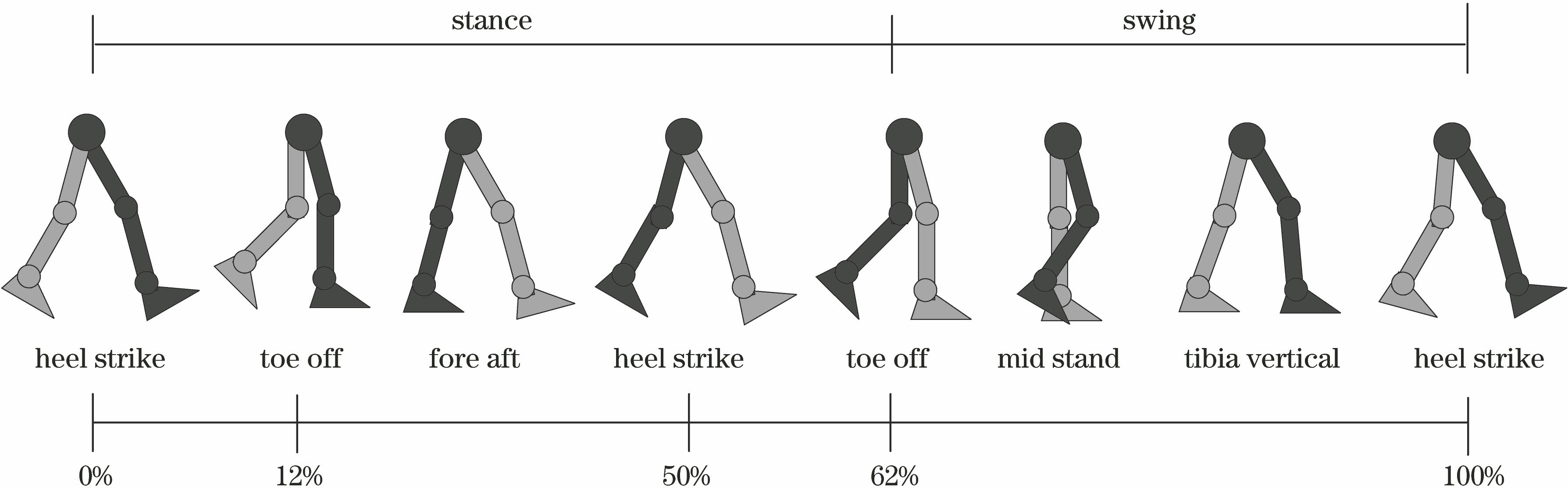

为解决基于可穿戴传感器的步态事件检测技术对个体配合程度依赖性大、能耗高、应用条件苛刻等问题,提出一种基于机器视觉的足跟着地事件检测算法,可以在不需要参与者合作的情况下,利用普通摄像机实现对足跟着地事件的精确检测。提出一种新颖的特征,即连续轮廓帧差图(CSD-maps)来表达步态模式。一个连续轮廓帧差图可以将视频帧中行人连续的轮廓二值图编码到一张特征图中,使其蕴含丰富的步态时空信息。不同数量的行人连续轮廓帧差会产生不同的连续轮廓帧差图。利用卷积神经网络对连续轮廓帧差图进行特征提取和足跟着地事件分类。在公开数据库上,对124名受试者在5个视角下不同穿着状态的视频数据进行训练和测试,实验结果表明,该方法具有良好的检测精度,识别准确率达93%以上。

Abstract

In this study, we propose an algorithm based on machine vision to detect heel-strike events for solving the problem that the gait recognition technology based on wearable sensors is considerably dependent on the cooperation of participants, with high energy consumption and harsh application conditions. The proposed algorithm can accurately detect heel-strike events using ordinary cameras without the cooperation of participants. Initially, we develop an innovative feature for representing gait patterns by designing a consecutive-silhouette difference map (CSD-map). A CSD-map can encode the binary image of several consecutive pedestrian contours extracted from the video frames and output the combination as a single feature map. Different numbers of consecutive pedestrian contour differences result in different types of CSD-map. Further, a convolutional neural network is used for feature extraction and classification of the imaged heel-strike events. In a public database of video data for training and testing, we find 124 individuals under five angles in different wear conditions, and the experimental results obtained using these images denote the accuracy of our method. The identification accuracy is observed to be greater than 93%.

李卓容, 王凯旋, 何欣龙, 糜忠良, 唐云祁. 基于卷积神经网络的足跟着地事件检测算法[J]. 激光与光电子学进展, 2019, 56(21): 211503. Zhuorong Li, Kaixuan Wang, Xinlong He, Zhongliang Mi, Yunqi Tang. Heel-Strike Event Detection Algorithm Based on Convolutional Neural Networks[J]. Laser & Optoelectronics Progress, 2019, 56(21): 211503.