激光与光电子学进展, 2020, 57 (16): 161008, 网络出版: 2020-08-05

基于残差学习和视觉显著性映射的图像融合  下载: 818次

下载: 818次

Image Fusion Based on Residual Learning and Visual Saliency Mapping

图像处理 图像融合 残差学习 视觉显著性映射 图像增强 引导滤波 image processing image fusion residual learning visual saliency mapping image enhancement guided filtering

摘要

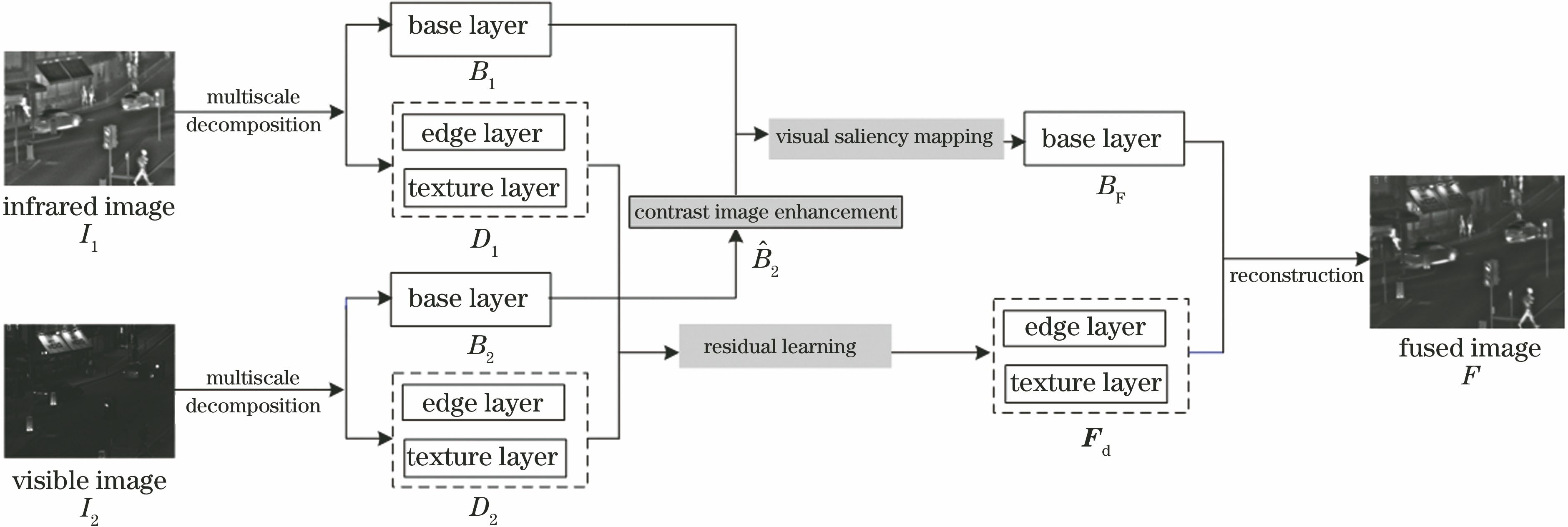

为了提高可见光图像和红外图像的融合图像的细节信息以及保留对比度,提出了一种基于残差学习和视觉显著性映射的多尺度分解图像融合方法。首先,使用高斯滤波器和引导滤波器对图像进行多尺度分解,将其分解为基本层和细节层,其中细节层分为小尺度纹理层和中尺度边缘层。然后,使用提出的改进视觉显著映射方法对基本层进行融合,对低光照图像基本层进行增强处理,使融合图像具有良好的对比度和总体外观。对于细节层,提出了对小尺度纹理层和中尺度边缘层分别进行最值化以及软最大化融合规则的残差网络深度学习融合模型。实验在TNO数据集上将所提算法与最新的6种方法针对离散余弦特征互信息、小波特征互信息、结构相似度和伪影噪声率这4个客观指标进行比较,所提算法在前3个客观指标上有所提升,在伪影噪声率上获得进一步的下降。该算法在保留图像显著特征的同时使融合图像获得了更多的细节纹理信息,具有良好的对比度,且有效地减小了伪影和噪声。

Abstract

To improve the detail information and preserve the contrast of fusion images of visible light images and infrared images, a multi-scale decomposition image fusion method based on residual learning and visual saliency mapping is proposed. First, a Gaussian filter and a guided filter are used to perform multi-scale decomposition, therefore decomposing the image into a basic layer and a detail layer. The detail layer is divided into a small-scale texture layer and a middle-scale edge layer. Then, the proposed improved visual saliency mapping method is used to fuse the base layers, and the base layer of the low-light image is enhanced to make the fused image have good contrast and overall appearance. For the detail layer, a residual network deep learning fusion model is proposed to maximize the fusion rules of the small-scale texture layer and maximize the middle-scale edge layer, respectively. The experiment compares the proposed algorithm with the latest six methods on the four objective indicators of discrete cosine feature mutual information, wavelet feature mutual information, structural similarity, and artifact noise rate on the TNO dataset. The proposed algorithm is improved in the first three objective indicators and further decreased in the artifact noise rate. This algorithm not only keeps the salient features of the image, but also makes the fused image have more detailed texture information and good contrast, therefore effectively reducing artifacts and noise.

丁罗依, 段锦, 宋宇, 祝勇, 杨晓山. 基于残差学习和视觉显著性映射的图像融合[J]. 激光与光电子学进展, 2020, 57(16): 161008. Luoyi Ding, Jin Duan, Yu Song, Yong Zhu, Xiaoshan Yang. Image Fusion Based on Residual Learning and Visual Saliency Mapping[J]. Laser & Optoelectronics Progress, 2020, 57(16): 161008.