Propagation-based incremental triangulation for multiple views 3D reconstruction  Download: 771次

Download: 771次

1. Introduction

There has been an escalation in the amount of work dealing with three-dimensional (3D) reconstruction[13" target="_self" style="display: inline;">–

Over the years, many triangulation methods have been proposed, in particular for the case of two or three views, where robust and fast methods have been developed[11,12]. It is being verified that more observations of the same scene point can improve the estimated position, but this also increases the complexity of the problem[13]. Therefore, the procedure given an arbitrary number of views and noisy observations to perform triangulation is still a difficult task. One of the most well-known triangulation actions is the midpoint method[14], choosing the midpoint of the common perpendiculars to all of the rays as the best position estimation, which is also considered as the fastest one given multiple views by far. Nevertheless, this method is not supposed to be optimal, and the final 3D localization will potentially be inaccurate due to noisy inputs.

For more accurate triangulation performance, the achievement of optimal 3D scene points is given enough attention, but most solve for very small numbers of views, such as two or three views[14]. For two views, a closed-form solution was proposed by Hartley and Sturm[15] and made more efficient and simplified by Lindstrom[16]. These methods yield all stationary points of their cost function, which are then checked to obtain the optimal solution. For three views, the closed-form triangulation method was achieved[17], and they make use of the Grobner basis to solve polynomial equations. Nevertheless, the computational complexity increases exponentially with the number of views, and there is no suitable polynomial solver for more than three views.

To ensure the global optimum, there are also some methods based on convex optimization on the -norm cost function instead of the -norm solution[18]. However, it is known that -norm optimization is extremely vulnerable to outliers[19]. Although some outlier removal method is achieved for -norm in multi-view geometry[20], the computational cost of -norm is considerable, and the convex programs have to be done at each iteration to determine the update direction[21]. Therefore, the -norm method is still a popular alternative to balance the speed and accuracy for multiple-view triangulation.

Based on the -norm methods, state-of-the-art incremental triangulation (INT) problems usually can be solved in two steps, where initialization is achieved within the first few views, and then the sequential image comes to the system one by one to establish a continuous multiple-view triangulation problem. An obvious solution is to collect all of the observations of the 3D point when encountering a coming observation, and then a new multiple-view triangulation action is performed repeatedly[22]. However, it is a time-consuming process to perform multiple-view triangulation with iterative optimization[23,24], especially in large-scale scenarios, where there are usually a significant number of scene points and cameras. The previous work[24] mainly paid attention to the triangulation issue after collecting all of the observational vectors at one time, lacking consideration of the INT and its convergence characteristics. Thus, instead of establishing an unpredictable iteration-based multiple-view cost function when encountering a new feature track one by one, a propagation-based INT method is proposed in this Letter to carve the 3D estimate only with the incoming observation.

2. Notations and Preliminaries

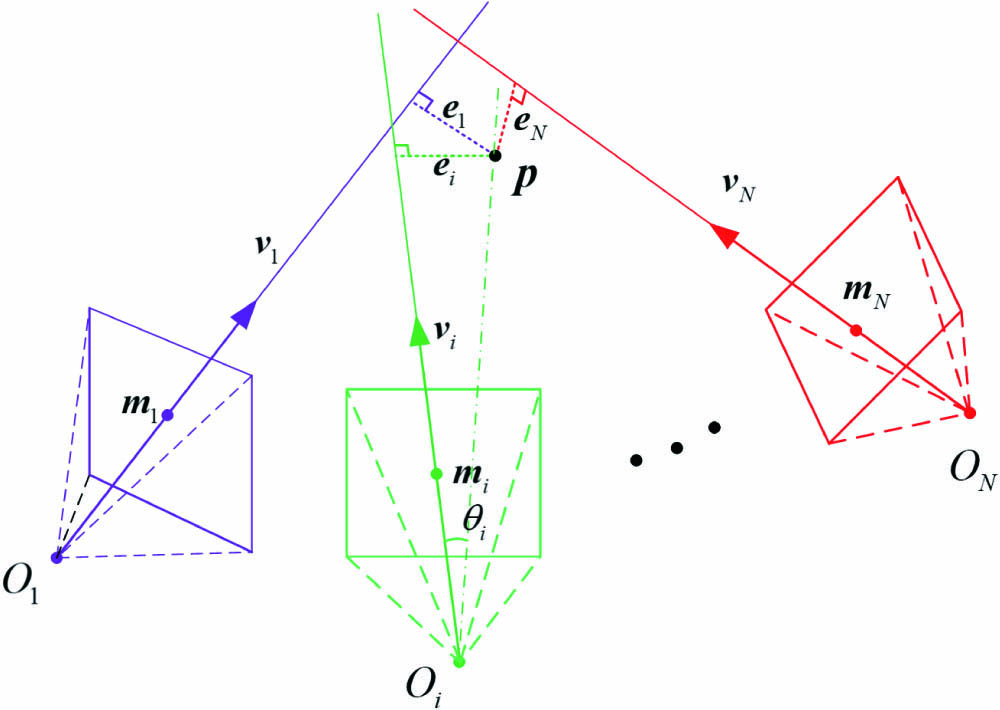

The midpoint method for triangulation has a clear geometric description, where the estimated 3D location is the midpoint of the common perpendicular to the two observation rays given a two-view triangulation. For more than two views, it also means the minimum distances from the 3D point to all of the observation vectors. As shown in Fig. 1, the location of the 3D point can be approached by minimizing the accumulation of the distance .

Thus, the 3D point is obtained by minimizing the sum of squares of the distance for the 3D point to all the observation vectors:where is the camera center, and is the projection distance transformation from the point to the observational vector .

3. Propagation-Based Incremental Triangulation

Although the midpoint method is fast enough for multiple-view triangulation, it is considered inaccurate when the camera views are nearly parallel. Moreover, as depicted in Fig. 1, when the 3D point moves far away from the camera center , the error increases. Nevertheless, all the 3D points that lay on the line coincide with the same observation on the 2D image plane. Therefore, the midpoint method is biased, and it is prone to overestimate the error for the 3D point that is far away from the camera center.

To address the biased estimation of the midpoint method by formulating their optimization problem in terms of the image reprojection error, the angular reprojection error is another popular choice, and it is supposed to own superior adaptability to different camera models[25]. Inspired by these angular reprojection errors methods, a weight that is proportional to the distance between the 3D point and the camera center is proposed. Thus, the unbiased temporal cost function for multiple-view triangulation is reformed as the following:where an explicit weight is assigned by the inversely proportional function to the distance between the 3D point and the camera:

According to Eq. (2) derived from the -norm reprojection error, it is interesting to find that it is a close approximation to the angular error model, where

Thus, the cost function is unbiased for all of the points lying on the same observational vector, regardless of the depth about the 3D point to be estimated. Besides, is considered as a close approximation to exact angular error (as shown in Fig. 1) when obtaining an accurate 3D point estimation. Compared to the optimization problems in terms of the -norm image reprojection error, this optimization model is more robust for multiple triangulation when encountering fisheye or omnidirectional cameras.

To minimize the unbiased view triangulation problem in Eq. (2), its gradient can be derived as

Thus, the target 3D point is derived from , resulting in the following equation:

In most SfM or SLAM applications, it is well known that temporal triangulation is a repeated work when new 2D observations are extracted from incoming images. More exactly, the 3D scene point may be triangulated in the first few images, and then these available 3D points would be applied to estimate the pose of a new coming image by perspective-n-point (PnP)[26], followed by more scene points with triangulation operations repeatedly. During these processes, INT is carried out with the feature track lasting for many frames with the same 3D point.

To address the large-scale and sequential image situations, an efficient INT is expected for multiple-view reconstruction based on the initial 3D point estimation. Fortunately, based on the initial estimation in Eq. (1), the next 3D point can be propagated based on the former when encountering sequential feature tracks without iteration, where

It has been confirmed that the achievable reconstruction error decays quadratically as more views are added to the system[13], and thus INT would improve the accuracy of the 3D scene with more observations. Besides, to bound the accumulation of propagation error during INT, an iteration-limited INT (ININT) is also proposed to achieve a more accurate 3D location estimation based on the propagated result. As depicted in Eq. (8), is derived from the INT result , and the iteration operations continue until the given tolerance is satisfied:

In addition to the subsequent INT, the propagation-based theme is applied when more observations of the same 3D point arrived based on a reliable initial estimation. Increasing observational information will further optimize the existing triangulation results[13]. Therefore, the proposed INT (ININT) method inherits the convergence property of the unbiased midpoint triangulation method by propagating the current image observation.

4. Experiments

Experimental results are presented to validate the accuracy and efficiency of the proposed methods. The other four benchmarks of multiple triangulation methods are compared. The first one is the native multiple-view middle point (MVMP) method; it is currently the fastest method for multiple-view triangulation, though it is labeled as inaccurate. The second method is the unbiased midpoint method (IRMP)[24]. The third one is considered a benchmark by minimizing the cost function of Eq. (2) using the naive Newton (NN) method. The fourth method is the gradient-based minimization of the reprojection errors, which is abbreviated as the standard gradient minimization of the reprojection errors (GMREs)[14] and popularly used in the recent state-of-the-art SLAM system. All of the experiments are carried out using MATLAB on a single thread on an Intel i7-8550U CPU at 1.80 GHz with 8G memory.

4.1. Experiments on synthetic data

In the synthetic dataset, the same camera calibration parameters are used with an image size of pixels and a focal length of 400 pixels. Four scenarios for multiple-view INT are simulated.

Fig. 2. Four types of synthetic triangulation instances. (a) Type A: cameras and 3D points are randomly distributed; (b) Type B: the camera moves along a curved trajectory around 3D points; (c) Type C: the camera moves on a circle while 3D points are located at the center; (d) Type D: the camera moves along a curved trajectory towards the 3D scene.

As illustrated in Fig. 2, 100 cameras and 1000 3D points are generated as different synthetic scenarios. Then, these 3D points are projected back to the image planes with the calibrated camera matrix, corrupting by Gaussian noise with a 5 pixel covariance. Based on the initialization from unbiased temporal triangulation, the INT problem can be deduced with a successive coming image until all of the sequential images are processed.

To perform a quantitative evaluation on the proposed propagation-based INT (ININT) method, MVMP, IRMP, NN, and GMRE are applied to perform incremental multiple-view triangulation, respectively. During the INT process, these four compared methods carry out the 3D point estimation repeatedly when a new feature observation arrives, lacking the use of the previous triangulated results. In contrast, the proposed INT method considers the consequence of the unbiased temporal triangulation as the seed point, and then only the propagation is executed when encountering a more featured track. It is known that the accuracy of the 3D point is proportional to the number of its observations, and thus the last estimated 3D point with all of the observations is deemed as the most accurate one. Therefore, these 3D points derived from all their observations are projected back to the image planes again to compute the mean 2D reprojection errors. Moreover, the mean 3D errors, the Euclidean distances between generated 3D points with the triangulated ones, are also taken into consideration.

Experimental results are illustrated in Table 1; because iteration is avoided when new observations are added to the INT, the propagation-based INT method is always the fast one. Moreover, both the 3D and 2D errors on different synthetic datasets derived from the proposed INT are superior to the classic MVMP method. Although the seed point of the INT is derived from the unbiased temporal triangulation IRMP, the final 3D results after recursive propagations are close to the continuous IRMP. Compared to the proposed INT method, the GMRE method can obtain slightly better triangulation accuracy, but it takes an enormous amount of runtime. We also find that the accuracy performances of IRMP and NN methods in INT scenarios are almost the same except the runtime, which is also consistent with our previous convergence analysis.

Table 1. Comparisons between Different Incremental Triangulation Performances

| |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

In addition to INT, the ININT method based on a few iterations is applied after the propagated 3D point. Thus, the runtime is a bit longer than the INT method with a superior 3D accuracy. Both the proposed INT and ININT methods can balance the runtime and accuracy in large-scale INTs. The experimental results also demonstrate the convergence ability of the proposed method in different situations.

For further verification of the robustness to varied noises, different Gaussian noise levels are generated to corrupt the 2D observations. Based on the Type D dataset, 2, 5, and 8 pixels covariances are simulated, respectively, while different 3D points are generated, and the performance of different INTs is illustrated in Fig. 3. It is easy to find that the proposed INT (ININT) method is faster than all other methods while obtaining comparable 3D and 2D accuracy, except for the inaccurate MVMP method.

Fig. 3. Time and accuracy analysis with different noise levels on Type D synthetic data. (a), (b), and (c) are the results of the time, 3D error, and 2D error when the Gaussian covariance is set as 2 pixels; (d), (e), and (f) are the results of the time, 3D error, and 2D error when the Gaussian covariance is set as 5 pixels; (g), (h), and (i) are the results of the time, 3D error, and 2D error when the Gaussian covariance is set as 8 pixels.

Compared to the previous synthetic dataset Types A, B, and C, the superior performance on the runtime is obvious because the number of feature tracks during INT is large in the Type D dataset. The results also illustrate that the proposed INT(ININT) methods can provide an alternative for large-scale INTs.

In addition to the efficiency and accuracy evaluation of the proposed method, the convergence performance during INT is verified in this section, where the 3D locations of the synthetic points are assumed as the ground truth, and INT is carried out when new images arrive one by one. Other methods, such as MVMP, IRMP, NN, and GMRE, are also applied to perform the multiple-view triangulation repeatedly after collecting all of the observation vectors.

Due to the length of the feature track for every 3D point being varied during INT, the overall convergence performance of the INT (ININT) method is characterized with the percentage of the views regardless of the number of views, where the total number of the feature track is seen as the 100%, and the mean 3D errors along with INT are calculated with the mean 3D error. Given different synthetic datasets, the results of INT are depicted in Fig. 4. It is easy to find that all the triangulation methods can achieve a higher 3D accuracy with more increased feature observations, and the achievable 3D error seemly decays quadratically as more views are added to the system[13]. Compared to other methods (MVMP, IRMP, NN, and GMRE), by collecting all of the observations of the 3D points, the proposed propagation-based INT (ININT) with a recursive update about the current observation also achieves similar convergence performance.

Fig. 4. Overall convergence of INT with different datasets. (a) Convergence curve of Type A dataset; (b) convergence curve of Type B dataset; (c) convergence curve of Type C dataset; (d) convergence curve of Type D dataset.

4.2. Experiments on real data

We also evaluate our INT method by the publicly available large-scale dataset[27]. During the experiments, more views are thus added to the system one by one after initialization, and then the performance of the different methods with the real datasets is illustrated in Table 2 and Fig. 5. The results show that the proposed INT (ININT) is more efficient than the other methods. Even compared to the inaccurate MVMP, the runtime of INT has a comparative advantage. In addition, the 3D errors of the scene points are also calculated to illustrate the accuracy performance of the proposed methods. For convenient evaluation, the 3D point locations provided by the dataset are considered as the ground truth. It is also obvious to find that the proposed INT (ININT) can approach a reliable triangulation performance during INT.

Table 2. Runtime Comparisons of Different Methods with Real Datasetsa

| |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

Fig. 5. INT based on real datasets. (a) Lund Cathedral, (b) Orebro Castle, (c) Ystad Monestary, and (d) Skansen Kronan.

5. Conclusion

An efficient INT method based on propagation for sequential multiple views is proposed. Instead of collecting all of the observations of the 3D point to be located, the proposed INT method only updates the observations of the current view, greatly outperforming the current popular -norm triangulation method in processing time. Besides, based on the result of INT as an initial value, a novel ININT method with few iterations is derived to obtain a better INT accuracy. Experimental results are presented in detail, which validate that the proposed method is more efficient while achieving comparable accuracy in INT. Nevertheless, as a two-step -norm-based triangulation method, the accuracy of the proposed method needs reliable initial 3D point estimation. The proposed method can be integrated to accelerate other multiple-view geometry problems, such as the camera pose estimation derived from the PnP, providing high-efficiency 3D reconstruction for robot localization and visual measurements, which is also our future work.

[15] R. Hartley, P. Sturm. Triangulation. Comput. Vis. Image Und., 1997, 68: 146.

[26] V. Lepetit, F. Moreno-Noguer, P. Fua. EPnP: an accurateo(n) solution to the pnp problem. Int. J. Comput. Vision, 2009, 81: 155.

Article Outline

Wei Fang, Kui Yang, Haiyuan Li. Propagation-based incremental triangulation for multiple views 3D reconstruction[J]. Chinese Optics Letters, 2021, 19(2): 021101.