Incoherent Fourier ptychographic photography using structured light  Download: 1458次

Download: 1458次

1. INTRODUCTION

Structured light illumination is important in computational photography and image-based rendering. It has been widely used in object recognition [1,2], 3D shape recovery [3], 4D light field rendering [4], synthetic aperture confocal imaging [5], and dual photography [6]. For example, Microsoft Kinect uses an IR projector to project a structured dense light pattern on the sample and a postprocessing algorithm to recover the 3D image of the object. A 3D laser light scanner projects a pattern of parallel stripes on the object and recovers the 3D information based on the geometrical deformation of the strips. In dual photography [6], a video projector is used to generate multiplexed patterns for sample illumination. The acquired information is then used to recover the transport matrix describing how light from each pixel of the projector arrives at each pixel of the camera. This matrix can be used to generate a new photorealistic image from the point of view of the projector.

The above-mentioned research directions and applications share a similar strategy on the system design: projecting multiple light patterns onto the sample and recovering new information based on the acquired images. However, in these approaches, the frequency mixing between the object and the illumination pattern has not been considered in the reconstruction process, and the image resolution is determined by the cutoff frequency of the employed photographic lens. In this article, we propose an incoherent photography imaging approach, termed Fourier ptychographic photography (FPP), that uses the frequency mixing effect for super-resolution imaging. The concept of the reported approach is similar to the structured illumination microscopy (SIM) approach, where a sinusoidal pattern is used to modulate the high-frequency sample information into the low-frequency passband [7]. In our implementation, we project a number of high-frequency random patterns onto the sample and acquire the corresponding images of the object. Similar to SIM, the frequency mixing between the sample and the random illumination pattern shifts the high-frequency component to the passband of the collection optics. Therefore, each raw image contains information that is beyond the cutoff frequency of the photographic lens. Based on multiple images acquired under different structured light patterns, we used the Fourier ptychographic algorithm to recover both the high-resolution object image and the unknown illumination pattern. Similar to SIM, the resolution of the recovered image using the reported approach is better than the resolution limit of the lens’s aperture.

We note that the use of the frequency mixing effect for super-resolution imaging is not a new idea. This concept is well known in the microscopy community [7]. It has also been used in telescope settings [8,9]. In this paper, we will use such a frequency mixing effect for super-resolution photographic imaging. In particular, we will demonstrate, for the first time to our knowledge, the use of the Fourier ptychographic recovery scheme for the photographic imaging setting. We will show that the reported approach is able to recover both the super-resolution image of the object and the unknown illumination pattern at the same time. Since the system design of the reported approach is compatible with many illumination-based imaging platforms, it may provide new insight for the development of computational photography and find applications in remote sensing, active-illumination night vision systems, and imaging radar.

In the following, we will first introduce the principle and the setup of the reported approach. We will then demonstrate the imaging performance using various targets. Finally, we will discuss the advantages and limitations of the reported approach.

2. FOURIER PTYCHOGRAPHIC PHOTOGRAPHY

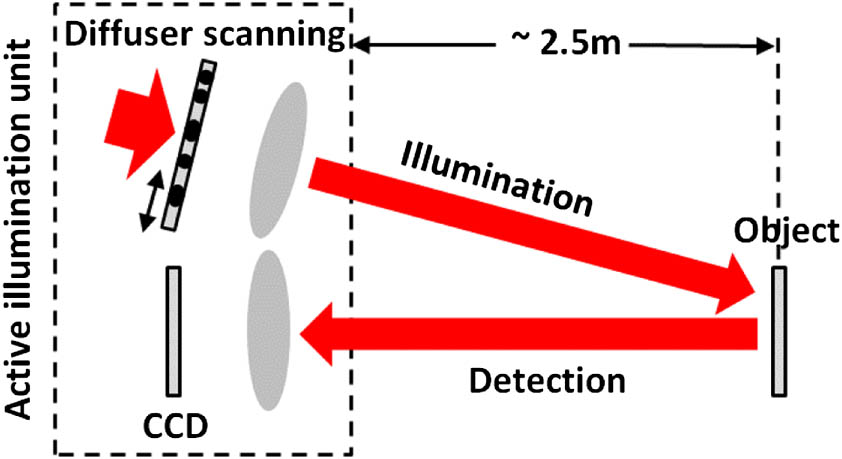

Figure

Fig. 1. Schematic of the FPP setup. In the illumination path, a diffused LED was used for incoherent illumination (red arrow on the left). A semitransparent diffuser was placed in front of the diffused LED, and its image was projected onto the object. In the detection path, a photographic lens (Nikon, 50 mm) was used to collect the reflected light from the object.

In our implementation, we used the iterative Fourier ptychographic algorithm to recover both

Fig. 2. Recovery procedures of the coherent and incoherent FP approaches. For the case of incoherent FP, the updating processes in steps 3 and 4 can be expressed as Eqs. (1 3 ).

The updating process in the Fourier domain (step 3, Fig.

The iterative updating process using Eqs. (

3. IMAGING PERFORMANCE OF THE FPP

To demonstrate the imaging performance of the reported FPP approach, we first used a resolution target as the sample and followed the FPP procedures to recover the super-resolution image.

Figure

Fig. 3. Imaging performance of the reported FPP platform. (a) The reference image captured under uniform illumination, (b1) the captured raw image under pattern illumination, (b2) the recovered image using 100 raw images, (b3) the recovered illumination pattern, (b4) line traces of (a) and (b2). Also refer to Media 1 .

Fig. 4. Image reconstruction using different numbers of raw images. The solution converges with the 16 raw images. We used 15–20 loops in this experiment.

We also used the reported platform to image different objects, and the results are shown in Fig.

Fig. 5. Demonstration of the reported platform for different objects: a dollar bill, a quick response code, and an insect. (a1)–(c1) The reference images under uniform illumination, (a2)–(c2) the recovered images using the reported platform. We used 100 raw images and 15 loops for the reconstruction. Also refer to Media 2 .

In Fig.

Fig. 6. Imaging a color object using the reported platform. (a1)–(a3) Reference images using uniform R/G/B illumination, (b) combined reference color image, (c1)–(c3) recovered super-resolution images using the reported platform, (d) combined super-resolution color image.

4. CONCLUSION AND DISCUSSION

In conclusion, we have demonstrated a photographic imaging approach that uses computational illumination for super-resolution imaging. Our setup is similar to many existing computational illumination platforms, where structured light patterns are projected onto the sample and corresponding images are used to recover the hidden information of the object. In the reported approach, we used the structured light pattern to modulate the high-frequency component of the object into the low-frequency passband. We then used the Fourier ptychographic algorithm to recover the object image. We show that the reported approach is able to improve the resolution beyond the resolution limit of the photographic lens.

The design focus of conventional photographic imaging platforms is the collection optics. More and more lens elements are used in the optical design to correct for aberrations. This effort is required mainly due to the use of a 2D planar image sensor. If the sensor surface itself is curved instead of the lens elements, image capture solutions with large apertures can easily be realized by means of a simple lens system with a lower number of optical elements. However, an image sensor with a curved surface is not compatible with the existing workflow in the semiconductor industry. In the reported platform, on the other hand, we can make a semitransparent diffuser on a curved surface. Therefore, we can project high-frequency speckle patterns onto the object with a simple large-aperture lens system. We can then capture the corresponding images and use the iterative algorithm to recover the high-resolution image of the object.

There are also three limitations associated with the reported platform. (1) It is computationally intensive. Real-time reconstruction needs to be implemented using a graphical processing unit. (2) Multiple frames are needed for high-resolution reconstruction. One future direction is to design an optimal projection pattern to minimize the number of acquisitions. (3) We assume the object is static in our implementation. If the object moves during acquisition, the motion of the object may provide a mean of mechanical scanning (for example, products on a conveyer belt). In this case, we may need to track the motion of the object instead of projecting the translated patterns.

Finally, we reiterate that the use of the frequency mixing effect for super-resolution imaging is well known in the microscopy community. In this paper, we have demonstrated, for the first time to our knowledge, the use of FP for incoherent photographic imaging settings. The results of this paper may provide some insights for the computational photography and computer vision communities. It may also find applications in remote sensing, active-illumination night vision systems, and imaging radar.

[1] K.-C. Lee, J. Ho, D. Kriegman. Nine points of light: acquiring subspaces for face recognition under variable lighting. IEEE Comput. Soc. Conf. Comput. Vis. Pattern Recogn., 2001, 1: 519-526.

[3]

[5] M. Levoy, B. Chen, V. Vaish, M. Horowitz, I. McDowall, M. Bolas. Synthetic aperture confocal imaging. ACM Trans. Graph., 2004, 23: 825-834.

[6] P. Sen, B. Chen, G. Garg, S. R. Marschner, M. Horowitz, M. Levoy, H. Lensch. Dual photography. ACM Trans. Graph., 2005, 24: 745-755.

[9] A. J. Lambert, D. Fraser. Superresolution in imagery arising from observation through anisoplanatic distortion. Proc. SPIE, 2004, 5562: 65-75.

[17] R. W. Gerchberg, W. O. Saxton. A practical algorithm for the determination of phase from image and diffraction plane pictures. Optik, 1972, 35: 237-250.

[20] J. R. Fienup. Phase retrieval algorithms: a comparison. Appl. Opt., 1982, 21: 2758-2769.

Article Outline

Siyuan Dong, Pariksheet Nanda, Kaikai Guo, Jun Liao, Guoan Zheng. Incoherent Fourier ptychographic photography using structured light[J]. Photonics Research, 2015, 3(1): 01000019.