High-speed dual-view band-limited illumination profilometry using temporally interlaced acquisition  Download: 652次

Download: 652次

1. INTRODUCTION

Three-dimensional (3D) surface imaging has been extensively applied in numerous fields in industry, entertainment, and biomedicine [1,2]. Among existing methods, structured-light profilometry has gained increasing popularity in measuring dynamic 3D objects because of its high measurement accuracy and high imaging speeds [3–6]. As the most widely used method in structured-light profilometry, phase-shifting fringe projection profilometry (PSFPP) uses a set of sinusoidal fringe patterns as the basis for coordinate encoding. In contrast to other approaches of structured light, such as binary pattern projection [7], the pixel-level information carried by the phase of the fringe patterns is insensitive to variations in reflectivity across an object’s surface, which enables high accuracy in 3D measurements [8]. The sinusoidal fringes employed in PSFPP are commonly generated using digital micromirror devices (DMDs). Each micromirror on the DMD can be independently tilted to either or from its surface normal to generate binary patterns at up to tens of kilohertz. Despite being a binary amplitude spatial light modulator [9], the DMD can be used to generate grayscale fringe patterns at high speeds [10–14]. The conventional dithering method controls the average reflectance rate of each micromirror to form a grayscale image. However, the projection rate of fringe patterns is clamped at hundreds of hertz. To improve the projection speed, binary defocusing techniques [13] have been developed to produce a quasi-sinusoidal pattern by slightly defocusing a single binary DMD pattern. Nonetheless, the image is generated at a plane unconjugate to the DMD, which compromises the depth-sensing range and is less convenient to operate with fringe patterns of different frequencies. Recently, these limitations are lifted by the development of band-limited illumination [14], which controls the system bandwidth by placing a pinhole low-pass filter at the Fourier plane of a imaging system. Both the binary defocusing method and the band-limited illumination scheme allow the generation of one grayscale sinusoidal fringe pattern from a single binary DMD pattern. Thus, the fringe projection speed matches the DMD’s refresh rate.

High-speed image acquisition is also indispensable to DMD-based PSFPP. In the standard phase-shifting methods, extra calibration patterns must be used to avoid phase ambiguity [15], which reduces the overall 3D imaging speed [16]. A solution to this problem is to place multiple cameras at both sides of the projector to simultaneously capture the full sequence of fringe patterns [17–20]. These multiview approaches bring in enriched observation of 3D objects in data acquisition. Pixel matching between different views is achieved with various assistance, including epipolar line rectification [17], measurement-volume-dependent geometry [18], and wrapped phase monotonicity [19]. Using these methods, the object’s 3D surface information can be directly retrieved from the wrapped phase maps [5]. Consequently, the necessity of calibration patterns is eliminated in data acquisition and phase unwrapping. This advancement, along with the incessantly increasing imaging speeds of cameras [21–24], has endowed multiview PSFPP systems with image acquisition rates that keep up with the DMD’s refresh rates.

Despite these advantages, existing multiview PSFPP systems have two main limitations. First, each camera captures the full sequence of fringe patterns. This requirement imposes redundancy in data acquisition, which ultimately clamps the systems’ imaging speeds. Given the finite readout rates of camera sensors, a sacrifice of the field of view (FOV) is inevitable for higher imaging speeds. Although advanced signal processing approaches such as image interpolation [25] and compressed sensing [26] have been applied to mitigate this trade-off, they usually are accompanied by high computational complexity and reduced image quality [27]. Second, the cameras are mostly placed on different sides of the projector. This arrangement could induce a large intensity difference from the directional scattering light and the shadow effect from the occlusion by local surface features, both of which reduce the reconstruction accuracy and pose challenges in imaging non-Lambertian surfaces [20].

To overcome these limitations, we have developed dual-view band-limited illumination profilometry (BLIP) with temporally interlaced acquisition (TIA). A new algorithm is developed for coordinate-based 3D point matching from different views. Implemented with two cameras, TIA allows each to capture half of the sequence of the phase-shifted patterns, reducing the data transfer load of each camera by 50%. This freed capacity is used either to transfer data from more pixels on each camera’s sensor or to support using higher frame rates of both cameras. In addition, the two cameras are placed as close as possible on the same side of the projector, which largely mitigates the intensity difference and shadow effects. Leveraging these advantages, TIA–BLIP has enabled high-speed 3D imaging of glass vibration induced by sound and glass breakage by a hammer.

2. METHOD

2.1 A. Setup

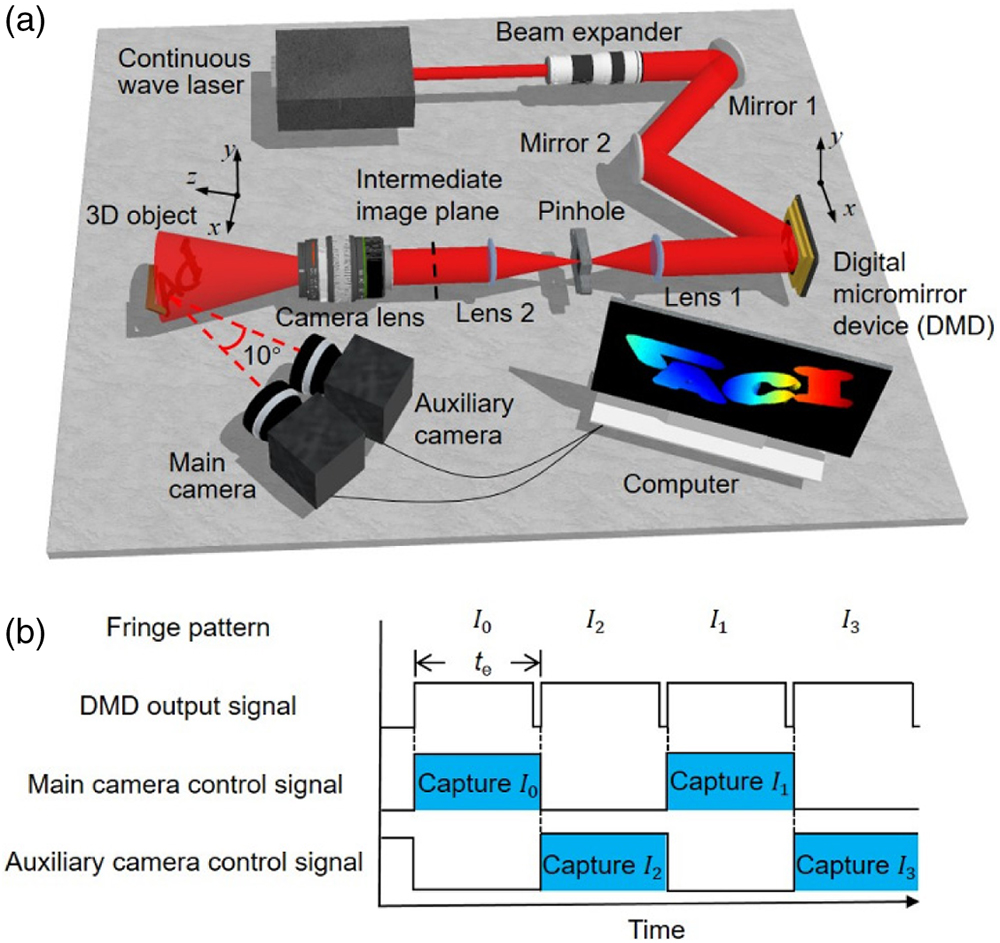

The schematic of the TIA–BLIP system is shown in Fig. 1(a). A 200 mW continuous-wave laser (wavelength , MRL-III-671, CNI Lasers) is used as the light source. After expansion and collimation, the laser beam is directed to a 0.45” DMD (AJD-4500, Ajile Light Industries) at an incident angle of to its surface normal. Four phase-shifting binary patterns, generated by an error diffusion algorithm [28] from their corresponding grayscale sinusoidal patterns, are loaded onto the DMD. A band-limited imaging system that consists of two lenses [Lens 1 and Lens 2 in Fig. 1(a)] and one pinhole converts these binary patterns to grayscale fringes at the intermediate image plane. The two lenses have focal lengths of and . The pinhole works as a low-pass filter. Its diameter, determined by the system bandwidth, is calculated as

Fig. 1. Operating principle of TIA–BLIP. (a) System schematic. (b) Timing diagram and acquisition sequence.

2.2 B. System Calibration

To recover 3D information from the mutually incomplete images provided by the interlaced acquisition, TIA–BLIP relies on a coordinate-based understanding of the spatial relationship of the projector and both cameras in image formation. In particular, a “pinhole” model [29],

Based on the pinhole model, both cameras and the projector can be calibrated to determine the values of these parameters. Using a checkerboard as the calibration object, we adopted the established calibration procedure and software toolbox [30]. Since the direct image acquisition is not possible for a projector, the phase-based mapping method [29] was used to synthesize projector-centered images of the calibration object. These images were subsequently sent to the toolbox with calibration proceeding in the same manner as for the cameras.

2.3 C. Coordinate-Based 3D Point Determination

In the context of the pinhole model of Eq. (2), a coordinate-based method is used to recover 3D information from a calibrated imaging system. Two independent coordinates correspond to a point on a 3D object with the coordinates : for the camera and for the projector. In a calibrated PSFPP system, any three of these coordinates [i.e., ] can be determined. Then a linear system of the form is derived. The elements of and are found by using each device’s calibration parameters as well as by using the scalar factors and the three determined coordinates [31]. In this way, the 3D information of an object point can be extracted via matrix inversion.

This analysis can be adapted to dual-view TIA–BLIP. First, images from a selected calibrated camera are used to provide the coordinates of a point on a 3D object. Along with the system’s calibration parameters, an epipolar line is determined on the other camera. The horizontal coordinate in the images of this camera is recovered using search-based algorithms along this epipolar line—a procedure commonly referred to as stereo vision. Second, by substituting a calibrated projector for the secondary camera, structured light methods use the intensity values of the pixel across a sequence of images to recover information about a coordinate of the projector. By incorporating both aspects, 3D information can be extracted pixel by pixel based on interlaced image acquisition.

1. Data Acquisition

In data acquisition, four fringe patterns, whose phases are equally shifted by , illuminate a 3D object. The intensity value for the pixel in the th acquired image, , is expressed as

Equation (3) allows the analysis of two types of intensity-matching conditions for the order of pattern projection shown in Fig. 1(b). The coordinates of a selected pixel in the images of the main camera are denoted by . For a pixel in the images of the auxiliary camera that perfectly corresponds with , Eq. (3) allows us to write

2. Image Reconstruction

We developed a four-step algorithm to recover the 3D image of the object pixel by pixel. In brief, for a selected pixel of the main camera, the algorithm locates a matching point in the images of the auxiliary camera. From knowledge of the camera calibration, this point then enables the determination of estimated 3D coordinates as well as the ability to recover a wrapped phase. Using knowledge of the projector calibration, this phase value is used to calculate a horizontal coordinate on the projector’s plane. A final 3D point is then recovered using the coordinate-based method. A flowchart of this algorithm is provided in Fig. 2(a).

Fig. 2. Coordinate-based 3D point determination algorithm. (a) Flowchart of the algorithm.

In the first step, and are calculated. Then, a threshold intensity, calculated from a selected background region, is used to eliminate pixels with low intensities. The thresholding results in a binary quality map [see Step I in Fig. 2(a)]. Subsequently, only pixels that fall within the quality map of the main camera are considered for 3D information recovery.

In the second step, the selected pixel determines an epipolar line containing the matching point within the auxiliary camera’s images. Then, the algorithm extracts the candidates that satisfy the intensity-matching condition [i.e., Eq. (5); illustrative data shown in Fig. 2(b)] in addition to three constraints [see Step II in Fig. 2(a)]. The subscript “” denotes the th candidate. As displayed in the illustrative data in Fig. 2(c), the first constraint requires candidates to fall within the quality map of the auxiliary camera. The second constraint requires that candidates occur within a segment of the epipolar line determined by a fixed transformation that approximates the location of the matching point. This approximation is provided by a 2D projective transformation (or homography) that determines the estimated corresponding point by [32]

The third constraint requires the selected point and candidates to have the same sign of their wrapped phases [Fig. 2(d)]. Estimates of the wrapped phases are obtained using the technique of Fourier transform profilometry [5]. In particular, by bandpass filtering the left side of Eq. (5), i.e., , the intensity of pixel in the filtered image is

In the third step, three criteria are used to calculate penalty scores for each candidate, as shown in Step III in Fig. 2(a). The scheme is shown in Fig. 2(e). The first and primary criterion compares the phase values of the candidates using two methods. First, the phase inferred from the intensities of candidates and the pixel is calculated by

To improve the robustness of the algorithm, two additional criteria are implemented using data available from the second step. is a normalized distance score favoring candidates located closer to the estimated matching point , which is calculated by

In the final step, the algorithm determines the final 3D coordinates [see Step IV in Fig. 2(a) and the scheme in Fig. 2(e)]. First, is unwrapped as , where is an integer making . Then, the coordinate on the projector’s plane, , is recovered with subpixel resolution as

3. RESULTS

3.1 A. Quantification of Depth Resolution

To quantify the depth resolution of TIA–BLIP with different exposure times, we imaged two stacked planar surfaces offset by (Fig. 3). Reconstructed results at four representative exposure times (denoted as ) are shown in Fig. 3(a). One area on each surface [marked as white solid boxes in Fig. 3(a)] was selected in the reconstructed image. The depth information on the axis was calculated by averaging the depth values along the axis. The difference in depths between these two surfaces is denoted by . In addition, the noise is defined as the averaged value of the standard deviations in depth from both surfaces. The depth resolution is defined as when equals twice the system’s noise level. As shown in the four plots in Fig. 3(a), the reconstruction results deteriorate with shorter exposure time, manifested by increased noise levels and more points incapable of retrieving 3D information. As a result, the depth resolution degrades from 0.06 mm at to 0.45 mm at [Fig. 3(b)]. At , TIA–BLIP fails in 3D measurements. The region of unsuccessful reconstruction prevails across most of the planar surfaces. The noise dominates the calculated depth difference, which is attributed to the low signal-to-noise ratio in the captured images.

Fig. 3. Quantification of TIA–BLIP’s depth resolution. (a) 3D images of the planar surfaces (top image) and measured depth difference (bottom plot) at four exposure times. The white boxes represent the selected regions for analysis. (b) Relation between measured depth differences and their corresponding exposure times.

3.2 B. Imaging of Static 3D Objects

To examine the feasibility of TIA–BLIP, we imaged various static 3D objects. First, two sets of 3D distributed letter toys that composed the words “LACI” and “INRS” were imaged. Shown in Fig. 4(a), the two perspective views of the reconstructed results reveal the 3D position of each letter toy. The detailed surface structures are illustrated by the selected depth profiles [see the white dashed lines and the magenta dashed boxes in Fig. 4(a)]. We also conducted a proof-of-concept experiment on three cube toys with fine structures (with a depth of ) on the surfaces. Depicted in Fig. 4(b), the detailed structural information of these cube toys is recovered by TIA–BLIP.

Fig. 4. TIA–BLIP of static 3D objects. (a) Reconstructed results of letter toys. Two perspective views are shown in the top row. Selected depth profiles (marked by the white dashed lines in the top images) and close-up views are shown in the bottom row. (b) Two perspective views of the reconstruction results of three toy cubes.

3.3 C. Imaging of Dynamic 3D Objects

To verify high-speed 3D surface profilometry, we used TIA–BLIP to image two dynamic scenes: a moving hand and three bouncing balls. The fringe patterns were projected at 4 kHz. The exposure times of both cameras were . Under these experimental conditions, TIA–BLIP had a 3D imaging speed of 1 thousand frames per second (kfps), an FOV of 180 mm × 130 mm (corresponding to ) in captured images, and a depth resolution of 0.24 mm. Figure 5(a) shows the reconstructed 3D images of the moving hand at five time points from 0 ms to 60 ms with a time interval of 15 ms (see the full evolution in

![TIA–BLIP of dynamic objects. (a) Reconstructed 3D images of a moving hand at five time points. (b) Movement traces of four fingertips [marked in the first panel in (a)]. (c) Front view of the reconstructed 3D image of the bouncing balls at five different time points. (d) Evolution of 3D positions of the three balls [marked in the third panel in (c)].](/richHtml/prj/2020/8/11/11001808/img_005.jpg)

Fig. 5. TIA–BLIP of dynamic objects. (a) Reconstructed 3D images of a moving hand at five time points. (b) Movement traces of four fingertips [marked in the first panel in (a)]. (c) Front view of the reconstructed 3D image of the bouncing balls at five different time points. (d) Evolution of 3D positions of the three balls [marked in the third panel in (c)].

In the second experiment, three white balls, each of which was marked by a different letter on its surface, bounced in an inclined transparent container. Figure 5(c) shows five representative reconstructed images from 8 ms to 28 ms with a time interval of 5 ms. The changes of the letter “C” on and the letter “L” on [marked in the third panel of Fig. 5(c)] clearly show the rotation of the two balls (see the full evolution in

Under the same experimental settings and pattern sequence choice, TIA–BLIP surpasses the existing PSFPP techniques in pixel counts and hence the imaging FOV. At the 1 kfps 3D imaging speed, the systems of standard single-camera PSFPP [14] and multiview PSFPP [19] would restrict their imaging FOV to and , respectively [33]. In contrast, TIA, with a frame size of pixels, increases the FOV by 3.87 and 2.07 times, respectively.

3.4 D. Imaging of Sound-Induced Vibration on Glass

To highlight the broad utility of TIA–BLIP, we imaged sound-induced vibration on glass. In this experiment [Fig. 6(a)], a glass cup was fixed on a table. The glass’s surface was painted white. A function generator drove a speaker to produce single-frequency sound signals (from 450 Hz to 550 Hz with a step of 10 Hz) through a sound channel placed close to the cup’s wall. To image the vibration dynamics, fringe patterns were projected at 4.8 kHz. The cameras had an exposure time of . This configuration enabled a 3D imaging speed of 1.2 kfps, an FOV of 146 mm × 130 mm (corresponding to ) in captured images, and a depth resolution of 0.31 mm. Figure 6(b) shows four representative 3D images of the instantaneous shapes of the glass cup driven by the 500 Hz sound signal (the full sequence is shown in

Fig. 6. TIA–BLIP of sound-induced vibration on glass. (a) Schematic of the experimental setup. The field of view is marked by the red dashed box. (b) Four reconstructed 3D images of the cup driven by a 500-Hz sound signal. (c) Evolution of the depth change of five points marked in the first panel of (b) with the fitted result. (d) Evolution of the averaged depth change with the fitted results under the driving frequencies of 490 Hz, 500 Hz, and 510 Hz. Error bar: standard deviation of

We further analyzed time histories of averaged depth displacements under different sound frequencies. Figure 6(d) shows the results at the driving frequencies of 490 Hz, 500 Hz, and 510 Hz. Each result was fitted by a sinusoidal function with a frequency of 490.0 Hz, 499.4 Hz, and 508.6 Hz, respectively. These results show that the rigid glass cup vibrated in compliance with the driving frequency. Moreover, the amplitudes of fitted results were used to determine the relationship between the depth displacement and the sound frequency [Fig. 6(e)]. We fitted this result by the Lorentz function, which determined the resonant frequency of this glass cup to 499.0 Hz.

It is worth noting that this phenomenon would be difficult to be captured by using previous methods given the same experimental settings and pattern sequence choice. With frame size of , the maximum frame rate for the used cameras is 2.4 kfps, which transfers to a 3D imaging speed of 480 fps for single-camera-based PSFPP and 600 fps for multiview PSFPP. Neither provides sufficient imaging speed to visualize the glass vibration at the resonance frequency. In contrast, TIA improves the 3D imaging speed to 1.2 kfps, which is fully capable of sampling the glass vibration dynamics in the tested frequency range of 450–550 Hz.

3.5 E. Imaging of Glass Breakage

To further apply TIA–BLIP to recording nonrepeatable 3D dynamics, we imaged the process of glass breaking by a hammer (the full sequence is shown in

Fig. 7. TIA–BLIP of glass breakage. (a) Six reconstructed 3D images showing a glass cup broken by a hammer. (b) Evolution of 3D velocities of four selected fragments marked in the fourth and fifth panels in (a). (c) Evolution of the corresponding 3D accelerations of the four selected fragments.

4. DISCUSSION AND CONCLUSIONS

We have developed TIA–BLIP with a kfps-level 3D imaging speed over an FOV of up to 180 mm × 130 mm (corresponding to ) in captured images. This technique implements TIA in multiview 3D PSFPP systems, which allows each camera to capture half of the sequence of the phase-shifting fringes. Leveraging the characteristics indicated in the intensity-matching condition [i.e., Eq. (5)], the newly developed algorithm applies constraints in geometry and phase to find the matching pair of points in the main and auxiliary cameras and guides phase unwrapping to extract the depth information. TIA–BLIP has empowered the 3D visualization of glass vibration induced by sound and the glass breakage by a hammer.

TIA–BLIP possesses many advantages. First, TIA eliminates the redundant capture of fringe patterns in data acquisition. The roles of the main camera and the auxiliary camera are interchangeable. Despite being demonstrated only with high-speed cameras, TIA–BLIP is a universal imaging paradigm easily adaptable to other multiview PSFPP systems. Second, TIA reduces the workload for each camera employed in the multiview systems. The freed capacity is used to enhance the technical specifications in PSFPP. In particular, at a certain frame rate, more pixels on the sensors of the deployed cameras can be used, which increases the imaging FOV. Alternatively, if the FOV is fixed, TIA supports these cameras to have higher frame rates, which thus increases the 3D imaging speed. Both advantages shed light on implementing TIA–BLIP with an array of cameras to simultaneously accomplish high accuracy and high-speed 3D imaging over a larger FOV. Third, the two cameras deployed in the current TIA–BLIP system are placed side by side. Compared with the existing dual-view PSFPP systems that mostly place the cameras at different sides of the projector, the arrangement in TIA–BLIP circumvents the intensity difference induced by the directional scattering light from the 3D object and reduces the shadow effect by occlusion. Both merits support robust pixel matching in the image reconstruction algorithm to recover 3D information on non-Lambertian surfaces.

Future work will be carried out in the following aspects. First, we plan to further improve TIA–BLIP’s imaging speed and FOV in three ways: by separating the workload to an array of cameras, by implementing a faster DMD, and by using a more powerful laser. Moreover, we will implement depth-range estimation and online feedback to reduce the time in candidate discovery. Furthermore, parallel computing will be used to increase the speed of image reconstruction toward real-time operation [34]. Finally, to robustly image 3D objects with different sizes and with incoherent light sources, we will generate fringe patterns with adaptive periods by using a slit or a pinhole array as the spatial filter [35]. Automated size calculation [36] also will be integrated into the imaging processing software to facilitate the determination of the proper fringe period.

Besides technical improvements, we will continue to explore new applications of TIA–BLIP. For example, it could be integrated into structure illumination microscopy [37] and frequency-resolved multidimensional imaging [38]. TIA–BLIP could also be implemented in the study of the dynamic characterization of glass in its interaction with the external forces in nonrepeatable safety test analysis [39–41]. As another example, TIA–BLIP could trace and recognize the hand gesture in 3D space to provide information for human–computer interaction [42]. Furthermore, in robotics, TIA–BLIP could provide a dual-view 3D vision for object tracking and reaction guidance [43]. Finally, TIA–BLIP can function as an imaging accelerometer for vibration monitoring in rotating machinery [44] and for behavior quantification in biological science [45].

5 Acknowledgment

Acknowledgment. The authors thank Xianglei Liu for experimental assistance.

[6]

[8] J. Geng. Structured-light 3D surface imaging: a tutorial. Adv. Opt. Photon., 2011, 3: 128-160.

[30]

[32]

[33]

[41]

[44] R. B. Randall. State of the art in monitoring rotating machinery-part 1. Sound Vibr., 2004, 38: 14-21.

Article Outline

Cheng Jiang, Patrick Kilcullen, Yingming Lai, Tsuneyuki Ozaki, Jinyang Liang. High-speed dual-view band-limited illumination profilometry using temporally interlaced acquisition[J]. Photonics Research, 2020, 8(11): 11001808.