基于水平树结构的可变权重代价聚合立体匹配算法  下载: 971次

下载: 971次

1 引言

立体匹配是根据同一场景内不同视角下的两幅或多幅图像,通过寻找像素之间的对应关系来获得物体三维深度信息的过程,是计算机视觉领域中存在已久且尚未解决的问题之一[1]。Scharstein等[2]将立体匹配算法分为全局算法和局部算法两类。现行的全局匹配算法通常考虑局部的颜色与结构信息,并为图像建立全局能量代价函数,通过置信传播[3]、图割法[4]、动态规划[5]等优化方法为每个像素分配视差值。局部算法利用基于窗口或树结构的代价聚合计算得到像素的视差值,如自适应窗口[6]。虽然其精度不如全局算法,但计算复杂度低,效率高,易于实现。局部算法主要包含匹配代价计算、代价聚合、计算视差值和视差后处理4个步骤。

代价聚合是局部算法中最重要的一个步骤,其隐含的基本假设为图像局部块具有视差平滑性,因此可以将其看成是在匹配代价基础上进行滤波处理。最简单快速的局部滤波器为盒滤波,但是图像边界会出现视差模糊,即前景膨胀。之后,具有边界保留效果的双边滤波[7]和引导滤波[8]被引入代价聚合,可得到与全局方法相媲美的视差图,然而局部方法需要预先定义支持窗口的大小,大的支持窗口会使边界区域过于平滑,而小的支持窗口会导致纹理区域的误匹配。为了解决聚合阶段受预先定义的支持窗口大小限制的问题,相继提出了基于递归[9]、水平树[10-11]、最小生成树[12]、分割树[13-14]的非局部方法,对图像中任一像素选择的支持窗口为整幅图像,有效避免了在无纹理区域支持窗口过小的情况。与局部方法相比,非局部方法在运算速度和无纹理区域的视差精度方面都有较大的改善。然而,当前大多数非局部方法只利用颜色信息判断相邻像素是否属于相同的视差值,未考虑实际场景中颜色相同而视差不同或颜色不同而视差相同的情况,从而导致图像背景区域和相同颜色边界区域视差估计不准确。

视差后处理是立体匹配算法中的最后一个步骤,其视差精度决定着立体匹配的最终精度。加权中值滤波[15-16]是应用非常广泛的一种视差后处理方法,通过对相邻区域的视差值进行直方图加权,选择中值对应的视差值作为当前像素的视差,能有效抑制错误的视差值。Yang[12]基于最小生成树提出了一种非局部视差后处理方法,通过左右一致性匹配将所有像素分成稳定点和非稳定点,基于稳定点重新构造匹配代价量,再次代价聚合得到最终视差值。与加权中值滤波相比,该方法计算速度快,视差精度高,但是忽视了不满足左右一致性匹配而视差估计准确的像素点,因此在新匹配代价量的计算中没有发挥任何作用。

针对图像中颜色边界未必是视差边界的问题,本文提出了一种基于水平树结构的可变权重代价聚合立体匹配算法。该算法对传统的非局部方法只利用颜色信息计算相邻像素的权重进行了改进,引入代价聚合后的初始视差值,通过再次代价聚合使算法在边界区域取得很好的匹配结果,与未引入初始视差值得到的结果相比,精度有所提高。在视差后处理步骤中,提出了一种改进的匹配代价量构造方式,在未增加额外计算量的条件下,提高了视差精度。

2 算法描述

2.1 匹配代价计算

匹配代价用来衡量同一场景中不同视角下的两幅或多幅图像,在不同视差下对应像素点之间的相似关系。匹配代价计算用

对于图像中每一个像素点(

式中

利用(2)式计算

2.2 代价聚合

由于单个像素的匹配代价区分度不强,且易受噪声影响,基于局部视差平滑性假设,利用相邻像素的信息进行代价聚合,以提高视差区分度。代价聚合可表示为

式中

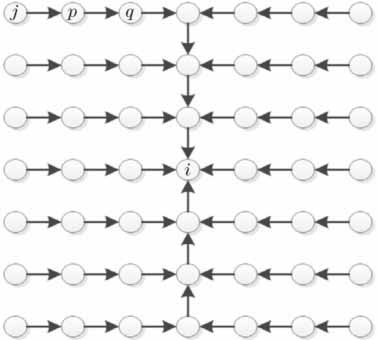

计算每对像素之间的支持权重,采用基于水平树结构的权值传播代价聚合算法[10],首先基于引导图像

式中

式中R、G、B为像素3个通道;

绝大多数代价聚合方法只是在单独视差下对匹配代价进行滤波,未考虑倾斜平面不满足图像局部区域视差相近原则的情况,因此采用文献[

11]提出的方法,将正则项引入匹配代价聚合,并基于上述建立的树结构,匹配代价沿着

式中

式中

2.3 代价再聚合

只基于颜色信息选择像素权重支持区域对图像边界视差估计不准确,初始视差图中还存在大量误匹配,因此引入初始视差重构水平树结构,从而改善匹配精度。与2.2节代价聚合方式不同,代价再聚合重构水平树中相邻两节点之间的支持权重定义为

式中

图 2. 权值支持区域图(红色方框为中心像素,邻域像素亮度值表示对中心像素的支持权重)。(a)图像局部块;(b)未采用代价再聚合的支持权重图;(c)采用代价再聚合的支持权重图

Fig. 2. Support weights for selected regions (the brightness value of neighborhood pixel represents the support weight for central pixel which is marked with red box). (a) Image partial block; (b) support weight map without iterative cost aggregation; (c) support weight map after iterative cost aggregation

大,只利用颜色信息计算支持权重得到如

如

图 3. 采用代价再聚合前后的视差变化图。(a) Reindeer左图像;(b) Reindeer右图像;(c)未采用代价再聚合得到的左视差图;(d)采用代价再聚合得到的左视差图

Fig. 3. Disparity variation maps computed before and after iterative cost aggregation. (a) Reindeer left image; (b) Reindeer right image; (c) left disparity map computed before iterative cost aggregation; (d) left disparity map computed after iterative cost aggregation

2.4 视差后处理

通过上述方法得到的初始视差在遮挡区域(左右图像不能同时观测区域)存在大量误匹配,需要进行后处理。首先通过左右一致性来检测匹配异常点, 判定公式为

式中

式中

图 4. 视差后处理的机理。(a) Dolls左图像;(b) Dolls右图像;(c)左右一致性检测;(d)未进行视差后处理的初始左视差图

Fig. 4. Principle of disparity refinement. (a) Dolls left image; (b) Dolls right image; (c) left-right consistence test; (d) initial left disparity map without disparity refinement

![采用不同视差后处理方法得到的视差图。(a)文献[11]的视差后处理方法;(b)改进的视差后处理方法](/richHtml/gxxb/2018/38/1/0115002/img_5.jpg)

图 5. 采用不同视差后处理方法得到的视差图。(a)文献[ 11]的视差后处理方法;(b)改进的视差后处理方法

Fig. 5. Disparity maps with different disparity refinement methods. (a) Disparity refinement method in Ref. [11]; (b) improved disparity refinement method

3 实验结果与分析

为了验证算法的有效性,选用Middlebury测试平台提供的图像进行测试。计算机配置为Pentium E6700 3.20 GHz主频CPU、2G内存。实验中算法涉及的具体参数如

表 1. 本文立体匹配算法参数

Table 1. Parameters of the proposed stereo matching algorithm

|

3.1 代价聚合算法性能测试

为了验证本文提出的代价聚合算法的性能,将

所提算法与近年来的前沿局部立体匹配算法进行比较,包括最小生成树(MST)[12]、引导滤波(GF)[8]、跨尺度立体匹配[17][其中包含跨尺度最小生成树(CS-MST)、跨尺度分割树(CS-ST)]以及基于水平树平滑正则化的代价聚合立体匹配算法(LSECVR)[11]。评判标准为代价聚合后的初始视差图在非遮挡区域的匹配精度,其中设置误差阈值为1。为了更好地展示对比实验的效果,将误匹配像素点用红色标记,实验结果如

![不同代价聚合算法得到的视差图(红色区域为误匹配像素点)。(a)真实视差图;(b)最小生成树;(c)跨尺度最小生成树;(d)引导滤波;(e)跨尺度分割树;(f)文献[11]代价聚合算法;(g)改进的代价聚合算法](/richHtml/gxxb/2018/38/1/0115002/img_6.jpg)

图 6. 不同代价聚合算法得到的视差图(红色区域为误匹配像素点)。(a)真实视差图;(b)最小生成树;(c)跨尺度最小生成树;(d)引导滤波;(e)跨尺度分割树;(f)文献[ 11]代价聚合算法;(g)改进的代价聚合算法

Fig. 6. Disparity maps obtained by different cost aggregation algorithms (mismatched pixels are marked in red area). (a) Real disparity map; (b) by minimum spanning tree; (c) by cross-scale minimum spanning tree; (d) by guided filtering; (e) by cross-scale segment tree; (f) by cost aggregation algorithm in Ref. [11]; (g) by improved cost aggregation algorithm

表 2. 不同立体匹配算法在未进行视差后处理的非遮挡区域中的匹配误差(单位:%)

Table 2. Error of different stereo matching methods in non-occluded areas without disparity refinement (unit: %)

|

的排列顺序。从

3.2 视差后处理算法性能测试

为了验证本文提出的视差后处理算法的性能,将所提视差后处理算法与文献[

11]的后处理方法进行比较,其中匹配代价计算和代价聚合与本文采用方法相同,避免了匹配代价计算和代价聚合方式的不同对实验结果的影响。在Middlebury数据集的31对图像上进行测试,实验结果如

表 3. 不同立体匹配算法视差后处理在全图像区域的匹配误差(单位:%)

Table 3. Matching error of different stereo matching methods in image areas with disparity refinement (unit: %)

|

![Middlebury 标准图像实验结果。(a)左参考图像;(b)右参考图像;(c)左真实视差图;(d)文献[11]的视差后处理方法;(e)改进的视差后处理方法](/richHtml/gxxb/2018/38/1/0115002/img_7.jpg)

图 7. Middlebury 标准图像实验结果。(a)左参考图像;(b)右参考图像;(c)左真实视差图;(d)文献[ 11]的视差后处理方法;(e)改进的视差后处理方法

Fig. 7. Experimental results of the Middlebury benchmark images. (a) Left reference images; (b) right reference images; (c) left real disparity maps; (d) by disparity refinement method in Ref. [11]; (e) by improved disparity refinement method

4 结论

针对初始匹配视差在图像颜色边界存在的误匹配问题,提出了一种基于水平树结构的可变权重代价聚合立体匹配算法。将初始代价聚合获得的视差图引入到水平权值树构造中,有效缓解了图像在颜色相同但深度不同以及颜色不同但深度相同区域的误匹配问题。在视差后处理阶段,引入视差对匹配代价量的权重系数,有效降低了左右视差不一致区域的误匹配率。本文算法在非遮挡区域的匹配精度高于其他基于滤波器的方法,而在遮挡区域的匹配精度与某些全局算法有一定差距。在今后的研究中,将重点关注提高遮挡区域的匹配精度。

[1] BleyerM, BreitenederC. Stereo matching—state-of-the-art and research challenges[M]. Advanced topics in computer vision. London: Springer, 2013: 143- 179.

BleyerM, BreitenederC. Stereo matching—state-of-the-art and research challenges[M]. Advanced topics in computer vision. London: Springer, 2013: 143- 179.

BleyerM, BreitenederC. Stereo matching—state-of-the-art and research challenges[M]. Advanced topics in computer vision. London: Springer, 2013: 143- 179.

[5] BleyerM, GelautzM. Simple buteffective tree structures for dynamic programming-based stereo matching[C]∥International Conference on Computer Vision Theory and Applications, Funchal, Portugal, 2008: 415- 422.

BleyerM, GelautzM. Simple buteffective tree structures for dynamic programming-based stereo matching[C]∥International Conference on Computer Vision Theory and Applications, Funchal, Portugal, 2008: 415- 422.

BleyerM, GelautzM. Simple buteffective tree structures for dynamic programming-based stereo matching[C]∥International Conference on Computer Vision Theory and Applications, Funchal, Portugal, 2008: 415- 422.

[6] 祝世平, 李政. 基于改进梯度和自适应窗口的立体匹配算法[J]. 光学学报, 2015, 35(1): 0110003.

祝世平, 李政. 基于改进梯度和自适应窗口的立体匹配算法[J]. 光学学报, 2015, 35(1): 0110003.

祝世平, 李政. 基于改进梯度和自适应窗口的立体匹配算法[J]. 光学学报, 2015, 35(1): 0110003.

[9] CiglaC. Recursive edge-aware filters for stereo matching[C]∥Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, 2015: 27- 34.

CiglaC. Recursive edge-aware filters for stereo matching[C]∥Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, 2015: 27- 34.

CiglaC. Recursive edge-aware filters for stereo matching[C]∥Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, 2015: 27- 34.

[12] YangQ. A non-local cost aggregation method for stereo matching[C]∥IEEE Conference on Computer Vision and Pattern Recognition, 2012: 1402- 1409.

YangQ. A non-local cost aggregation method for stereo matching[C]∥IEEE Conference on Computer Vision and Pattern Recognition, 2012: 1402- 1409.

YangQ. A non-local cost aggregation method for stereo matching[C]∥IEEE Conference on Computer Vision and Pattern Recognition, 2012: 1402- 1409.

[13] MeiX, SunX, Dong WM, et al. Segment-tree based cost aggregation for stereo matching[C]∥Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2013: 313- 320.

MeiX, SunX, Dong WM, et al. Segment-tree based cost aggregation for stereo matching[C]∥Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2013: 313- 320.

MeiX, SunX, Dong WM, et al. Segment-tree based cost aggregation for stereo matching[C]∥Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2013: 313- 320.

[14] YaoP, ZhangH, XueY, et al. Segment-tree based cost aggregation for stereo matching with enhanced segmentation advantage[C]∥IEEE International Conference on Acoustics, Speech and Signal Processing, 2017: 2027- 2031.

YaoP, ZhangH, XueY, et al. Segment-tree based cost aggregation for stereo matching with enhanced segmentation advantage[C]∥IEEE International Conference on Acoustics, Speech and Signal Processing, 2017: 2027- 2031.

YaoP, ZhangH, XueY, et al. Segment-tree based cost aggregation for stereo matching with enhanced segmentation advantage[C]∥IEEE International Conference on Acoustics, Speech and Signal Processing, 2017: 2027- 2031.

[15] Ma ZY, He KM, Wei YC, et al. Constant time weighted median filtering for stereo matching and beyond[C]∥Proceedings of the IEEE International Conference on Computer Vision, 2013: 49- 56.

Ma ZY, He KM, Wei YC, et al. Constant time weighted median filtering for stereo matching and beyond[C]∥Proceedings of the IEEE International Conference on Computer Vision, 2013: 49- 56.

Ma ZY, He KM, Wei YC, et al. Constant time weighted median filtering for stereo matching and beyond[C]∥Proceedings of the IEEE International Conference on Computer Vision, 2013: 49- 56.

[16] SunX, MeiX, Jiao SH, et al. Stereo matching with reliable disparity propagation[C]∥IEEE International Conference on 3D Imaging, Modeling, Processing, Visualization and Transmission, 2011: 132- 139.

SunX, MeiX, Jiao SH, et al. Stereo matching with reliable disparity propagation[C]∥IEEE International Conference on 3D Imaging, Modeling, Processing, Visualization and Transmission, 2011: 132- 139.

SunX, MeiX, Jiao SH, et al. Stereo matching with reliable disparity propagation[C]∥IEEE International Conference on 3D Imaging, Modeling, Processing, Visualization and Transmission, 2011: 132- 139.

[17] ZhangK, FangY, MinD, et al. Cross-scale cost aggregation for stereo matching[C]∥Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2014: 1590- 1597.

ZhangK, FangY, MinD, et al. Cross-scale cost aggregation for stereo matching[C]∥Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2014: 1590- 1597.

ZhangK, FangY, MinD, et al. Cross-scale cost aggregation for stereo matching[C]∥Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2014: 1590- 1597.

Article Outline

彭建建, 白瑞林. 基于水平树结构的可变权重代价聚合立体匹配算法[J]. 光学学报, 2018, 38(1): 0115002. Jianjian Peng, Ruilin Bai. Variable Weight Cost Aggregation Algorithm for Stereo Matching Based on Horizontal Tree Structure[J]. Acta Optica Sinica, 2018, 38(1): 0115002.