In situ optical backpropagation training of diffractive optical neural networks  Download: 916次

Download: 916次

1. INTRODUCTION

Artificial neural networks (ANNs) have achieved significant success in performing various machine learning tasks [1], diverse from computer science applications (e.g., image classification [2], speech recognition [3], game playing [4]) to scientific research (e.g., medical diagnostics [5], intelligent imaging [6], behavioral neuroscience [7]). The explosive growth of machine learning is due primarily to the recent advancements in neural network architectures and hardware computing platforms, which enable us to train larger-scale and more complicated models [8,9]. A significant amount of effort has been spent on constructing different application-specific ANN architectures with semiconductor electronics [10,11], the performance of which is inherently limited by the fundamental tradeoff between energy efficiency and computing power in electronic computing [12]. As the scale of an electronic transistor approaches its physical limit, it is necessary to investigate and develop the next-generation computing modality during the post-Moore’s law era [13,14]. Using photons instead of electrons as the information carrier to perform optical computing has potential properties to provide high energy efficiency, low crosstalk, light-speed processing, and massive parallelism. It has the potential to overcome problems inherent in electronics and is considered to be the disruptive technology for modern computing [15,16].

Recent works on the optical neural network (ONN) have made substantial progress in performing large-scale complex computing and high optical integrability by using state-of-the-art intelligent design approaches and fabrication techniques [1719" target="_self" style="display: inline;">–

Proper training of the ANN with algorithms, such as error backpropagation [41], is the most critical aspect of making a reliable model and guarantees accurate network inference. Current ONN architectures are typically trained in silico on an electronic computer to obtain its designs for physical implementation. By modeling the light–matter interaction along with computer-aided intelligent design, the network parameters are learned, and the structure is determined to be deployed on photonic devices. However, due to the high computational complexity of the network training, such in silico training approaches fail to exploit the speed, efficiency, and massive parallel advantage of optical computing, which results in long training time and limited scalability. For example, it takes approximately 8 h to train a five-layer diffractive ONN configured with 0.2 million neurons as a digit classifier running on a high-end modern desktop computer [22]. Furthermore, different error sources in practical implementation will deviate the in silico trained model and degenerate inference accuracy. In situ training, in contrast, can overcome these limitations by physically implementing the training process directly inside the optical system. Recent works have demonstrated the success of in situ backpropagation for training the optical interference neural network [42] and physical recurrent neural network [43,44]. Nevertheless, these approaches either require strict lossless assumptions for calculating the time-reversed adjoint field or work only for a real-valued network by modeling the amplitude of the field, which cannot be applied to the diffractive ONN due to the complex-valued inherency and the presenting of diffractive loss. Another line of work based on the volumetric hologram [45,46] requires an undesirable light beam in the hologram recording and size-1 training batch, which dramatically restricts the network scalability and computational complexity. In this work, we propose an approach for in situ training of the large-scale diffractive ONN for complex inference tasks that can overcome the lossless assumption by modeling and measuring the forward and backward propagations of the diffractive optical field for its gradient calculation.

The proposed optical error backpropagation for in situ training of the diffractive ONN is based on light reciprocity and phase conjunction principles, which allow the optical backpropagation of the network residual errors by backward propagating the error optical field. We demonstrate that the gradient of the network at individual diffractive layers can be successively calculated highly parallel to measurements of the forward and backward propagated optical fields. We design a reprogrammable system with off-the-shelf photonic equipment by simulation for implementing the proposed in situ optical training, where phase-shifting digital holography is used for optical field measurement, and the error optical field is generated from a complex field generation module. Different from in silico training, by programming the multilayer SLMs for iteratively updating the network diffractive modulation coefficients during training, the proposed optical learning architecture can adapt to system imperfections, accelerate the training speed, and improve the training energy efficiency on core computing modules. Also, diffractive ONNs implemented with multilayer SLMs can be easily reconfigured to perform different inference tasks at the speed of light. The numerical simulations on the proposed reconfigurable diffractive ONN system demonstrate the high accuracy of our in situ optical training method for different applications, including light-speed object classification, optical matrix-vector multiplier, and all-optical imaging through scattering media.

2. OPTICAL ERROR BACKPROPAGATION

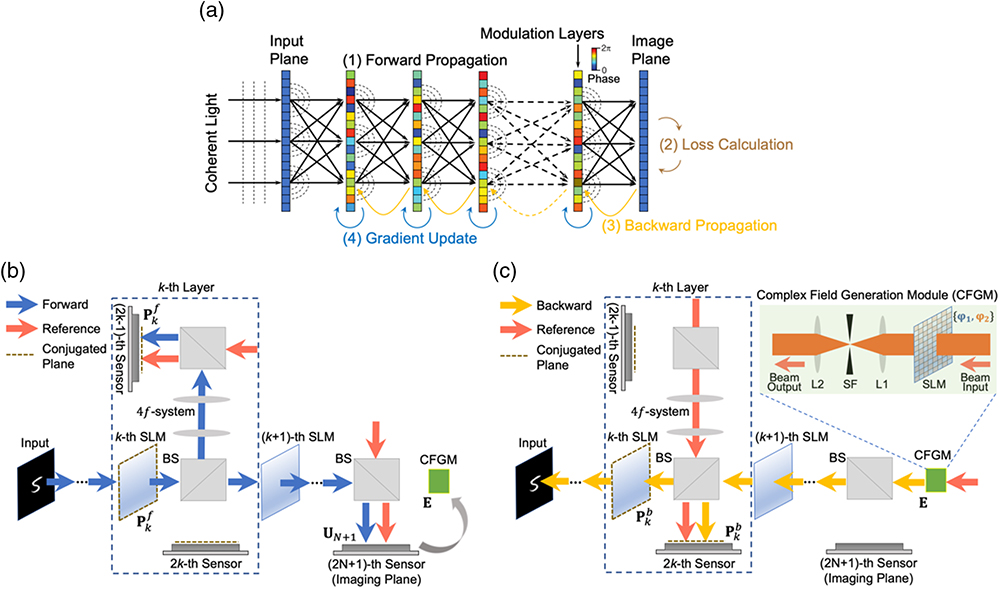

The diffractive ONN framework proposed in Ref. [22] comprises the cascading of multiple diffractive modulation layers, as shown in Fig.

Fig. 1. Optical training of diffractive ONN. (a) The diffractive ONN architecture is physically implemented by cascading spatial light modulators (SLMs), which can be programmed for tuning diffractive coefficients of the network towards a specific task. The programmable capability makes it possible for in situ optical training of diffractive ONNs with error backpropagation algorithms. Each iteration of the training for updating the phase modulation coefficients of diffractive layers includes four steps: forward propagation, error calculation, backward propagation, and gradient update. (b) The forward propagated optical field is modulated by the phase coefficients of multilayer SLMs and measured by the image sensors with phase-shifted reference beams at the output image plane as well as at the individual layers. The image sensor is set to be conjugated to the diffractive layer relayed by a 1:1 beam splitter (BS) and a

In this section, we derive the optical error backpropagation model for linear diffractive ONN, and its extension to the nonlinear diffractive ONN can be found in Section

As shown in Fig.

3. EXPERIMENTAL SYSTEM DESIGN AND CONFIGURATION

We propose an optical system design composed of off-the-shelf photonic equipment to implement the optical error backpropagation for in situ training of diffractive ONN, as shown in Figs.

3.2 A. Measuring the Network Optical Field

We adopted four-step phase-shifting digital holography [47] to measure the optical field at individual layers. Assume represents the forward propagated optical field or the backward propagated optical field at the -th layer, where refers to its amplitude, and refers to its phase. The network optical field was interfered with a four-step phase-shifted reference beam , i.e., the phase value of the wavefront , where is the amplitude with a constant value. The corresponding intensity distributions of the interference results were sequentially measured with an image sensor, with which the amplitude and phase of the optical field at the -th layer can be accurately calculated as where is a constant value.

3.3 B. Generating the Error Optical Field

The error optical field was generated with a complex field generation module (CFGM) that acts as the source of a backward propagated optical field. In this paper, we implemented it with a phase-only field generator SLM and a low-pass filtering system [48], as shown in Fig.

With the proposed system design to measure the forward propagated input optical field and backward propagated error optical field for in situ training of the network, the gradient at each diffractive layer is successively calculated, and the phase coefficients of SLMs are iteratively updated by adding the phase increment , where is a constant network parameter determining the learning rate of the training. Since there are constant scale factors during the measurement of the network optical field and the generation of the error optical field, i.e., and , respectively, we tune the value of during the training so that the phase coefficients are updated at an appropriate step size. Different from the brute force in situ training approach [20] that computes the gradient of ONNs by sequentially perturbing the coefficient of individual neurons, the optical error backpropagation approach proposed in this paper allows for tuning the network coefficients in parallel. This enables us to effectively train the large-scale network coefficients and significantly enhances the scalability of the diffractive ONN. Furthermore, since our framework directly measures the optical field of a network at individual layers, it avoids performing the interference between the forward and backward optical fields and eliminates the assumption of losslessness used in Ref. [42]. This is important for the optical training of diffractive ONNs because of the inherent diffraction loss on the network periphery caused by freespace light propagation.

4. NUMERICAL SIMULATIONS AND APPLICATIONS

In this section, we numerically validate the effectiveness of the proposed optical error backpropagation and demonstrate the success of in situ optical training of simulated diffractive ONNs for different applications, including light-speed object classification, optical matrix-vector multiplication, and all-optical imaging through scattering media.

4.2 A. Light-Speed Object Classification

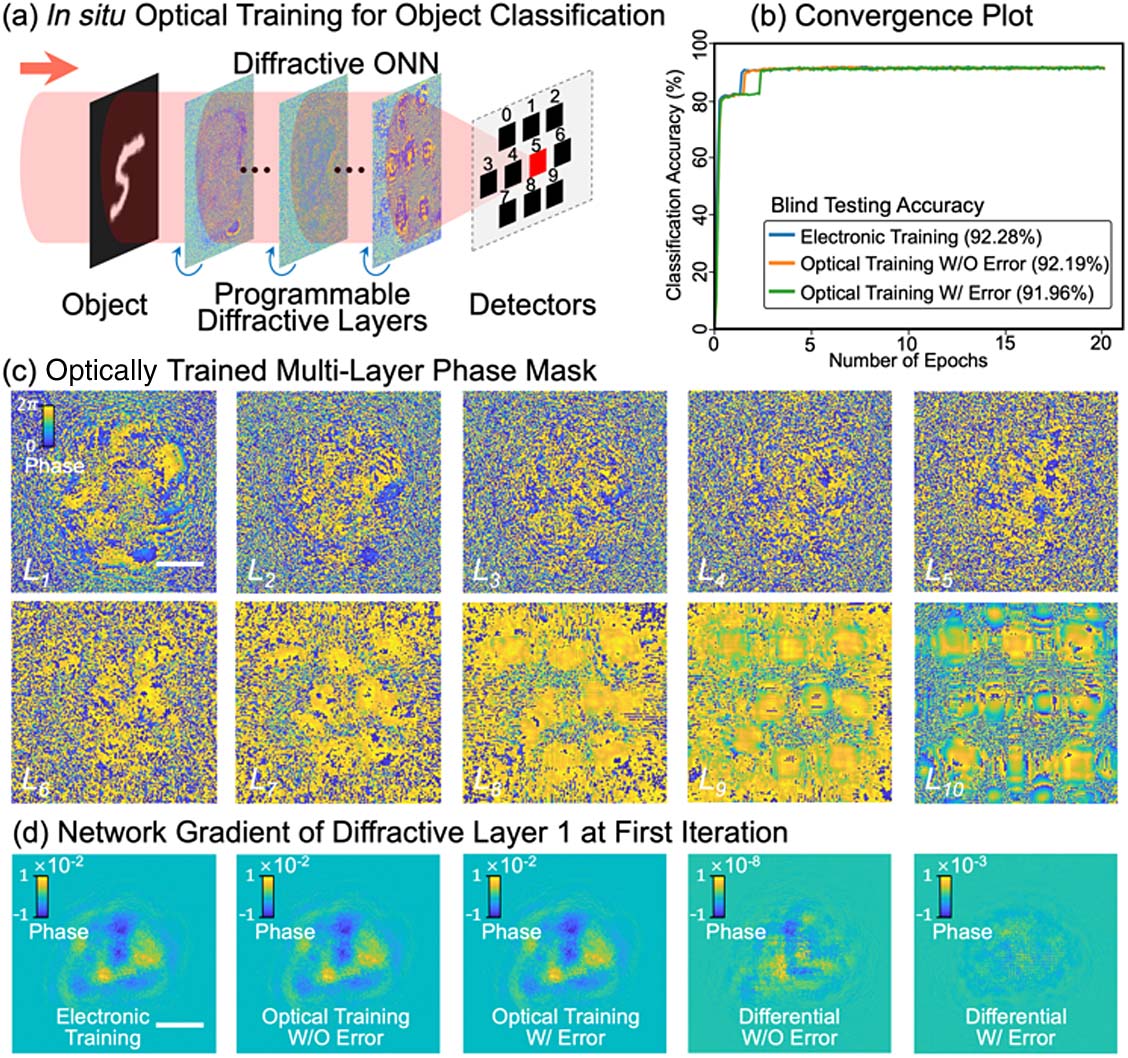

Object classification is a critical task in computer vision and is also one of the most successful applications of ANNs. The conventional object classification paradigm typically requires to capture and store large-scale scene information as an image by using an optoelectronic sensor and compute with artificial intelligence algorithms in an electronic computer. Such a storage and computing separation paradigm places significant limitation on the processing speed. Our all-optical machine learning framework based on diffractive ONNs performs the light-speed computing directly on the object optical wavefront so that the detectors need to measure only the classification result, e.g., 10 measurements for 10 classes on the MNIST dataset, as shown in Fig.

Fig. 2. In situ optical training of the diffractive ONN for object classification on the MNIST dataset. (a) By in situ dynamically adjusting the network coefficients with programmable diffractive layers, the diffractive ONN is optically trained with the MNIST dataset to perform object classification of the handwritten digits. (b) The numerical simulations on 10-layer diffractive ONN show the blind testing classification accuracy of 92.19% and 91.96% for the proposed in situ optical training approach without and with the CFGM error, respectively, which achieves a performance comparable to the electronic training approach (classification accuracy of 92.28%). (c) After the optical training (with CFGM error), phase modulation patterns on 10 different diffractive layers (

The effectiveness of the proposed approach was validated by comparing the performance between in situ optical training and in silico electronic training [22] of a 10-layer diffractive ONN (see Section

The convergence plots of in silico electronic and in situ optical training of 10-layer diffractive ONN by using the MNIST training dataset and evaluating on the validation dataset are shown in Fig.

4.3 B. Optical Matrix-Vector Multiplication

Matrix-vector multiplication is one of the fundamental operations in artificial neural networks, which is the most time- and energy-consuming component implemented with electronic computing platforms due to the use of a limited clock rate and large numbers of data movement. The intrinsic parallelism of optical computing allows large-scale matrix multiplication to be implemented at the speed of light with high energy efficiency without the use of the system clock or data movement. Previous works [20,49] on optical matrix-vector multiplication have limited degrees of freedom for constructing the matrix operator and required solving an optimization problem in electronic computers to derive the design before deploying with photonic equipment. Our in situ optical training approach eliminates the requirement for electronic optimization and has a much higher degree of freedom to achieve the desired matrix operator, which not only improves the optimization efficiency but also enhances the scalability of the operation.

The computational architecture of in situ optical training of diffractive ONN for matrix-vector multiplication is shown in Fig.

![In situ optical training of the diffractive ONN as an optical matrix-vector multiplier. (a) By encoding the input and output vectors to the input and output planes of the network, respectively, the diffractive ONN can be optically trained as a matrix-vector multiplier to perform an arbitrary matrix operation. (b) A four-layer diffractive ONN is trained as a 16×16 matrix operator [shown in the last column of (c)], the phase modulation patterns (L1,L2,L3,L4) of which are shown and can be reconfigured to achieve different matrices by programming the SLM modulations. (c) With an exemplar input vector on the input plane of the trained network (first column), the network outputs the matrix-vector multiplication result (second column), which achieves comparable results with respect to the ground truth (third column). (d) The relative error between the network output vector and ground truth vector is 1.15%, showing the high accuracy of our optical matrix-vector architecture. (e) By increasing the number of modulation layers, the relative error is decreased, and matrix multiplier accuracy can be further improved. Scale bar: 1 mm.](/richHtml/prj/2020/8/6/06000940/img_003.jpg)

Fig. 3. In situ optical training of the diffractive ONN as an optical matrix-vector multiplier. (a) By encoding the input and output vectors to the input and output planes of the network, respectively, the diffractive ONN can be optically trained as a matrix-vector multiplier to perform an arbitrary matrix operation. (b) A four-layer diffractive ONN is trained as a

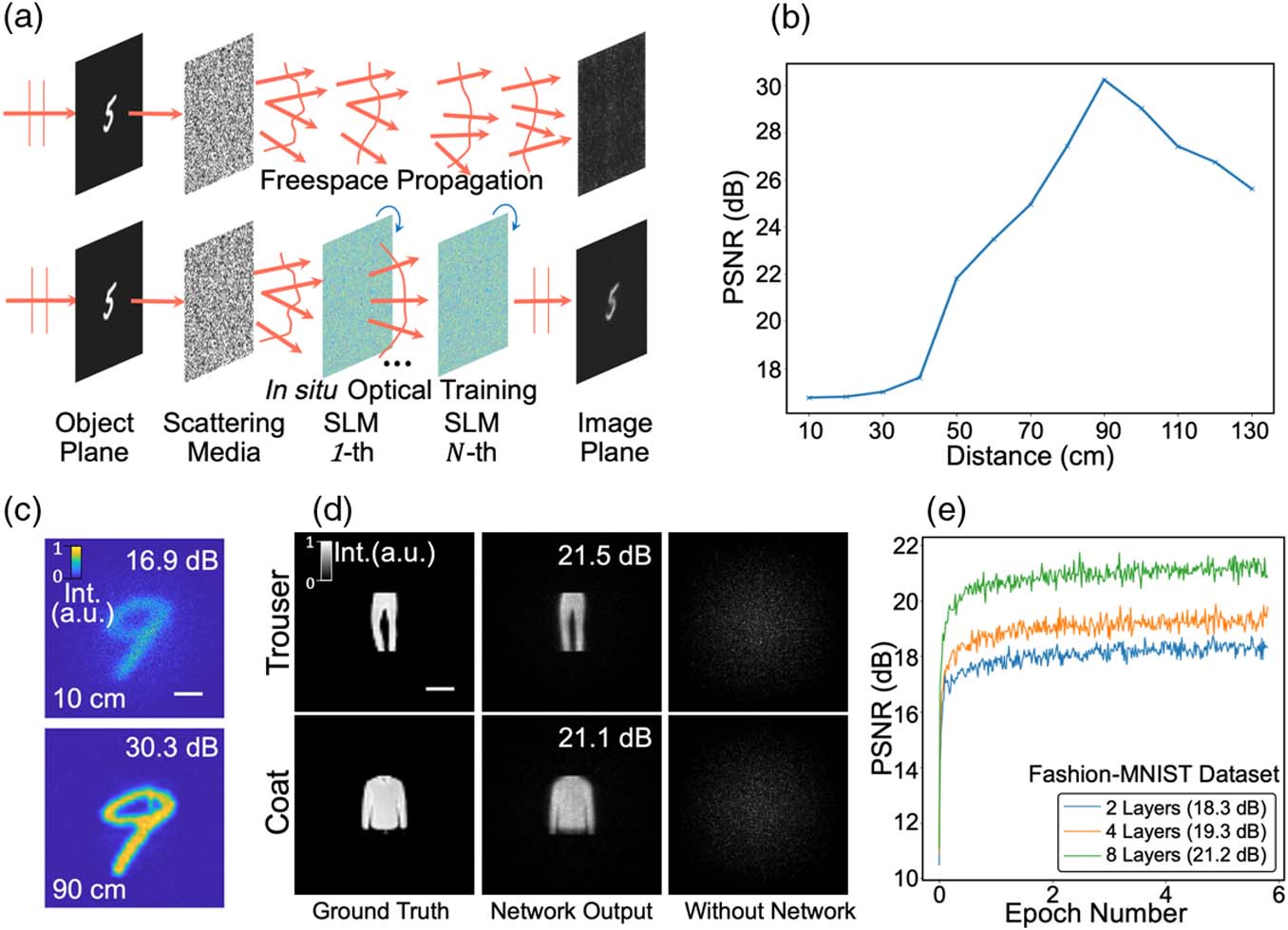

4.4 C. All-Optical Imaging Through Scattering Media

Imaging through scattering media has been one of the difficult challenges with essential applications in many fields [5052" target="_self" style="display: inline;">–

The numerical simulation results of using diffractive ONN for imaging through translucent scattering media are demonstrated in Fig.

Fig. 4. Instantaneous imaging through scattering media with in situ optical training of the diffractive ONN. (a) The wavefront of the object is distorted by the scattering media and generates the speckle pattern on the detector under freespace propagation (top row). The diffractive ONN is in situ optically trained to take the distorted optical field as an input and perform the instantaneous de-scattering for object reconstruction (bottom row). (b) The MNIST dataset is used to train a two-layer diffractive ONN. The performance of the trained model is evaluated by calculating the peak signal-to-noise ratio (PSNR) of the de-scattering results on the testing dataset, which increases with the reasonably increasing layer distance. (c) The network de-scattering result on the handwritten digit “9” from the MNIST testing dataset shows PNSRs of 16.9 dB and 30.3 dB at layer distances of 10 cm and 90 cm, respectively. (d) An eight-layer diffractive ONN trained with the Fashion-MNIST dataset successfully reconstructs the objects of “Trouser” and “Coat” (images of the testing dataset) from their distorted optical wavefront. (e) Convergence plots of the two-, four-, and eight-layer diffractive ONN trained with the Fashion-MNIST dataset, which achieves PSNRs of 18.3 dB, 19.3 dB, and 21.2 dB on the testing dataset, respectively. Scale bar: 1 mm.

5. DISCUSSION

5.1 A. Optical Training Speed and Energy Efficiency

The optical error backpropagation architecture proposed in this paper allows us to accelerate the training speed and improve the energy efficiency on core computing modules compared with electronic training. Without considering the power consumption of peripheral drivers, the theoretical calculation of training speed and energy efficiency for the proposed optical training of diffractive ONN can be found in Section

To calculate the optical energy efficiency, as the power range of CNI MRL-FN-698 laser source at a working wavelength of 698 nm is , we set the power of the laser source to 1 mW, i.e., . It offers 0.12 μW light power even after 13 diffractive layers considering a 50% transmission rate of BS, which can still provide hundreds of photons per pixel per microsecond for the Andor Zyla 5.5 sCMOS sensor and can achieve sufficient SNR measurements for in situ computing. According to the formulation in Eq. (

Table 1. Computational Performance of the Proposed Optical Training Architecturea

|

5.2 B. System Calibration Under Misalignment Error

The architecture of in silico electronic training of diffractive ONN is confronted with the great challenge of physical implementation of the trained model, since different error sources in practice will deteriorate the model. For example, with an increasing layer number, the alignment complexity of diffractive layers will be significantly increased, which restricts the network scalability. To address this issue, we propose the in situ optical training architecture for physically implementing the optical error backpropagation directly inside the optical system, which enables the network to adapt to system imperfections and avoids the alignment between successive layers. Nevertheless, at each layer, the gradient calculation in optical error backpropagation requires measurements of the forward and backward propagated optical fields; the misalignment between the forward and backward measurements will lead to errors in the calculated gradient and deteriorate the training model. For example, the numerical evaluation demonstrates that the misalignment of 8 μm on the measurements at each layer decreases the classification accuracy of in situ optical training from 91.96% to 89.45% with CFGM error. Different from the in silico electronic training, the alignment needs to be performed only within the layer, and the alignment complexity is independent of the network layer number.

Furthermore, such misalignment can be calibrated out by optically calculating the gradient of each layer to estimate the amount of misalignment, as demonstrated in Section

6. CONCLUSION

In conclusion, we have demonstrated that the diffractive ONN can be in situ trained at high speed and with high energy efficiency with the proposed optical error backpropagation architecture. Our approach can adapt to system imperfectness and achieve highly accurate gradient calculation, which offers the prospect of reconfigurable and robust implementation of large-scale diffractive ONN. The numerical evaluations by using the simulated experimental system, configured with multilayer programmable SLMs, for three different applications, including light-speed object classification, optical matrix-vector multiplication, and all-optical imaging through scattering media, demonstrate the effectiveness of the proposed approach. The architecture can be easily extended to nonlinear diffractive ONNs by measuring the optical field at nonlinear layers and calculating additional nonlinear gradients (details in Section

Limitations of the proposed in situ optical training system include the sequential read-in mode and the relatively high cost of the existing SLM. These could be alleviated with integrated photonics: with the emergence of programmable on-chip optoelectronic devices, e.g., tunable metasurface SLMs [53], the proposed architecture could potentially be implemented at the chip scale to achieve the in-memory optical computing machine learning platform with high-density integration and be more cost effective. Due to the ubiquitous use of analog devices and the imperative trending of in situ learning architecture in modern neuromorphic computing [54], we believe the proposed optical error backpropagation approach for in situ training of ONNs provides essential support in neuromorphic photonics for building next-generation high-performance large-scale brain-inspired photonic computers.

[1] Y. LeCun, Y. Bengio, G. Hinton. Deep learning. Nature, 2015, 521: 436-444.

[14]

[16] D. R. Solli, B. Jalali. Analog optical computing. Nat. Photonics, 2015, 9: 704-706.

[36] Y. Zuo, B. Li, Y. Zhao, Y. Jiang, Y.-C. Chen, P. Chen, G.-B. Jo, J. Liu, S. Du. All optical neural network with nonlinear activation functions. Optica, 2019, 6: 1132-1137.

[45] K. Wagner, P. Demetri. Multilayer optical learning networks. Appl. Opt., 1987, 26: 5061-5076.

[47] I. Yamaguchi, T. Zhang. Phase-shifting digital holography. Opt. Lett., 1997, 22: 1268-1270.

Article Outline

Tiankuang Zhou, Lu Fang, Tao Yan, Jiamin Wu, Yipeng Li, Jingtao Fan, Huaqiang Wu, Xing Lin, Qionghai Dai. In situ optical backpropagation training of diffractive optical neural networks[J]. Photonics Research, 2020, 8(6): 06000940.