光子学报, 2024, 53 (1): 0111002, 网络出版: 2024-02-01

基于红外与激光雷达融合的鸟瞰图空间三维目标检测算法

BEV Space 3D Object Detection Algorithm Based on Fusion of Infrared Camera and LiDAR

摘要

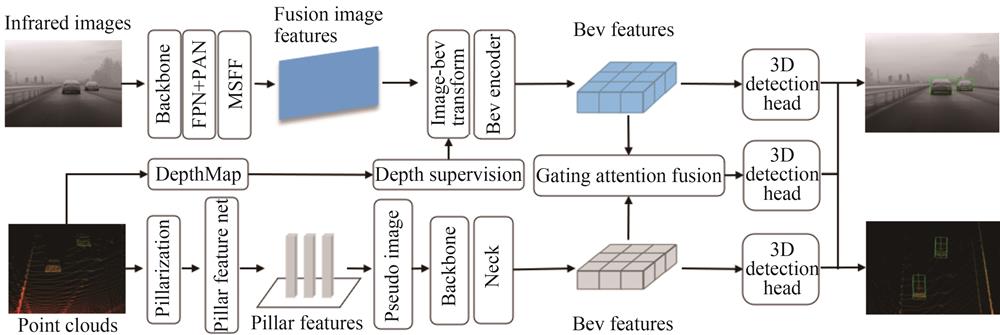

结合MEMS激光雷达和红外相机的优势,设计了一种简单轻量、易于扩展、易于部署的可分离融合感知系统实现三维目标检测任务,将激光雷达和红外相机分别设置成独立的分支,两者不仅能独立工作也能融合工作,提升了模型的部署能力。模型使用鸟瞰图空间作为两种不同模态的统一表示,相机分支和雷达分支分别将二维空间和三维空间统一到鸟瞰图空间下,融合分支使用门控注意力融合机制将来自不同分支的特征进行融合。通过实际场景测试验证了算法的有效性。

Abstract

In recent years, with the rapid development of AI, the field of autonomous driving is already booming around the world. Autonomous driving is considered to be an inevitable trend in the future development of the automotive industry and will fundamentally change the way we travel in the future. Perception function is the key link of autonomous driving, and it is the guarantee of driving intelligence and safety. Accurate and real-time 3D object detection is the core function of autonomous vehicles to accurately perceive and understand the surrounding complex environment. It is also the basis of decision control processes such as path planning, motion prediction and emergency obstacle avoidance. 3D object detection task should not only predict the category of the target, but also predict the size, distance, position, direction and other 3D information of the target. China's traffic road situation is very complex, to achieve high-level autonomous driving requires a variety of sensors to work together. The use of multi-sensor fusion sensing scheme can improve the vehicle's ability to interact with the real world. At present, the advanced multi-sensor fusion detection models are too complex and have poor scalability. Once one of the sensors is wrong, the whole system will not work. This limits the ability to deploy high-level autonomous driving application scenarios. Then, the visible light sensor has some disadvantages in night, rain, snow, fog, backlighting and other scenes, which reduces the safety of driving. To solve the above problems, this paper based on the advantages of the MEMS LiDAR and the infrared camera to design a separable fusion sensing system, which is simple, lightweight, easy to expand and easy to deploy, which realizes 3D object detection task. Set the LiDAR and the infrared camera as separate branch. The both can not only work independently but also work together, decoupling the interdependence of the LiDAR and the infrared camera. If a sensor fails, the other sensor will not be affected, which improves the deployment capability of the model. The model uses the Bird's Eye View(BEV) space as a unified representation of the two different modes. The advantage of BEV space is to simplify complex 3D space into 2D space and unify the coordinate system. It makes cross-camera fusion, multi-view camera merging and multi-mode fusion easier to achieve. The camera branch and the LiDAR branch unify the 2D space and the 3D space into the BEV space respectively, and solve the difference problem of the data structure representation and spatial coordinate system of the two different sensors. The camera branch chooses YOLOv5 algorithm as the feature extraction network. The YOLOv5 algorithm is widely used in the engineering field and is easy to deploy. Accurate depth estimation is the key for the camera branch to transform image features into BEV features. So, this paper improves the camera branch. It introduces the pointclouds Depth Supervision(DSV) module and the Camera Parameter Prior(CPP) module to enhance the depth estimation ability of the camera branch. The GPU accelerated kernel is designed in image-BEV view transformation to improve the speed of model detection. Although the camera branch performance is limited, when applied to the fusion branch, the fusion branch can significantly improve the performance of single-mode branch. The LiDAR branch can choose any SOTA pointclouds detection model. The fusion branch use a Gating Attention Fusion(GAF) mechanism to fuse BEV features from different branches, and then completes the 3D object detection task. If one of the sensors fails, the camera branch or the LiDAR branch can independently complete the 3D object detection task. This model has been successfully deployed to an embedded AI computing platform: MIIVII APEX AD10. The experimental results show that the proposed model is effective, easy to extend and easy to deploy.

王五岳, 徐召飞, 曲春燕, 林颖, 陈玉峰, 廖键. 基于红外与激光雷达融合的鸟瞰图空间三维目标检测算法[J]. 光子学报, 2024, 53(1): 0111002. Wuyue WANG, Zhaofei XU, Chunyan QU, Ying LIN, Yufeng CHEN, Jian LIAO. BEV Space 3D Object Detection Algorithm Based on Fusion of Infrared Camera and LiDAR[J]. ACTA PHOTONICA SINICA, 2024, 53(1): 0111002.