Autonomous aeroamphibious invisibility cloak with stochastic-evolution learning

1 Introduction

The ability of rendering an object invisible will bestow humans with high survival value to disguise themselves and protect a broad range of instruments in spacecraft circuits, electronic shielding, and radomes.1 In physics, the essence of an invisibility cloak aims to suppress the electromagnetic (EM) scattering of a hidden object or to reconstruct its scattering characteristics to imitate those generated by the pure background. Over the centuries, substantial efforts have been devoted to achieving this long-standing dream, including early adoption of EM absorption2 and camouflage clothing.3 Two decades ago, the seminal works of transformation optics and metamaterials kicked off the modern prelude to invisibility cloaks.4 Based on the form invariance of Maxwell’s equations, transformation optics guide the flow of light around the hidden object by adding a bulky metamaterial shell.5 Despite the theoretical elegance, its practical implementation is hampered by the extreme metamaterial compositions with both anisotropy and inhomogeneity.6 Concurrently, other invisibility schemes, such as ultrathin metasurface cloaks7 and zero-refractive-index invisible channels,8 have been proposed with respective strengths and weaknesses tailored for specific application scenarios.9

However, the state-of-the-art methods and existing invisibility cloaks suffer from a common limitation: they work in single direction, prior-defined EM illumination, and stationary background because the active metasurfaces have restricted reflection/transmission states, and the amplitude and phase are coupled.15 Such a fascinating trait would usher in a new era of intelligent cloaks that cater to dynamic, nondeterministic surrounding landscape in real-world applications. However, achieving this goal will encounter multifaceted challenges from fundamental physical principles and intelligent algorithms, to an all-in-one system. First, a majority of invisibility cloaks are idealized to work in a single and homogeneous background, but the fact is not like that. Switching to different scenarios, such as deserts, sea, and air, will bring distinct scattering characteristics that deteriorate the well-defined cloaking effect. Although active metasurfaces provide a dynamic range of reflection/transmission responses, they are more about phase regulation while the amplitude keeps unchanged or entangled with the phase.16 Second, unearthing the elusive relationship among metasurface cloak, EM illumination, and the surrounding environment plays a pivotal role for intelligent cloaks. For a customer-defined cloaking effect, brute-force search in tandem with lengthy case-by-case full-wave simulations is suboptimal because it inevitably degrades the working efficiency of the invisibility cloak.17

Here we present a probabilistic inference-based autonomous aeroamphibious cloak capable of adapting to kaleidoscopic environments and neutralizing external stimuli. The idea is rooted in the platform of an unmanned drone, adorned with ultrathin reconfigurable metasurfaces at microwave levels. We impart spatiotemporal modulation into metasurfaces to synthesize a great number of equivalent reflection states to cover the entire phase diagram, which lays a physical foundation to integrate exotic functionalities and versatile cloaking modalities.23,24 To automate the invisible drone, we introduce a generation-elimination network, termed as stochastic-evolution learning, to swiftly output the control command for spatiotemporal metasurfaces. The network consists of a conditional variational autoencoder (CVAE) to automatically generate a constellation of output candidates and a forward neural network to eliminate all inferior ones. The strong built-in stochastic sampling capability effectively addresses complex, nonuniqueness correspondence in inverse design (the accuracy reaches 97.8%). In the experiment, an all-in-one invisible drone freely flies across a conical detection region with near-zero backscattering, appearing as if nothing existed. Amidst an amphibious background, we benchmark the invisible drone by arbitrarily disguising it into pure background or arbitrary user-defined illusive patterns (the similarity is up to 95%). Our work heralds a new genus of sea–land–air intelligent cloak and spurs a myriad of hitherto inaccessible concepts to off-the-shelf applications,25

2 Methods

2.1 Training of the Generation-Elimination Network

In the example of Fig. 3, 100,000 data are collected with metasurfaces spectra. The data are shuffled, where 80% are blindly selected as the training set and the remaining 20% are used for validation and testing. The generation-elimination network is trained using Python version 3.7.11 and TensorFlow framework version 2.7.0 (Google Inc.) on a server (GeForce RTX 4090 GPU and an AMD Ryzen Threadripper PRO 5975WX 32-Cores with 128 GB RAM, running on a Windows operating system). Different optimization strategies, e.g., the dimension of the latent space, have been tried in Supplementary Notes 5 and 6 in the Supplementary Material.

2.2 Architecture of the Intelligent Invisible Drone

For the intelligent invisible drone, we use the Jetson Xavier NX as the core processing platform. First, the Jetson reads the detected information from the three onboard sensors (camera, EM detector, and gyroscope). The detected information, together with on-demand cloaking pictures, is input into the pretrained generation-elimination network to output different temporal sequences that meet the invisible requirement. The temporal sequence is then conveyed into the metasurface inclusions by RS485 bus. Here the stm32f103 chip is used as the controller of the metasurface system, and the signal lines of the metasurface board are connected to the I/O of the stm32. According to the temporal sequence, the stm32 outputs 3.3 or 0 V in real time.

3 Results

3.1 System Architecture of the Autonomous Aeroamphibious Cloak

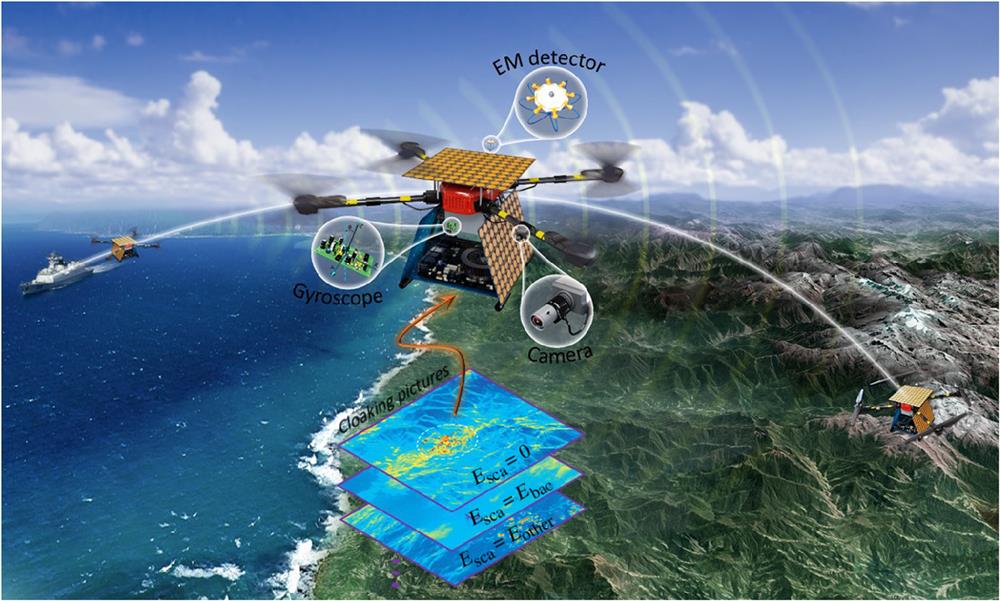

The ultimate stage for an invisibility cloak should be directed to cluttered, dynamic, and unseen environments, and neutralize various detection means. It remains out of reach for almost all existing metamaterial-based invisibility cloaks, as they are too idealistic to be generalized into a practical implementation, and thus are restricted to fixed environments and morphologies. In this regard, illustrated in Fig. 1 is our proposed autonomous aeroamphibious cloak, equipped with perception, decision, and action modules. An autonomous invisible drone takes off from sea level, hovers in the sky, and lands in the mountains, during which it maintains invisibility at all times. The foe radars cannot detect it or identify it as another dissimilar object. Such vision is similar to many natural animals, such as the chameleon and octopus, that automatically modify their body colors or textures to match the habitat. We decorate the unmanned drone with ultrathin reconfigurable metasurfaces to dynamically tune the local reflection spectra. When the tuning speed is comparable to the EM illumination frequency, reconfigurable metasurfaces will transmute into time-varying or spatiotemporal metasurfaces. The space–time duality in Maxwell’s equations reveals that applying time modulation to the reflection spectra can expand the ability of sculpting EM waves to both space and frequency domains. Many exotic effects are facilitated, such as the breakdown of Lorentz reciprocity and Doppler-like frequency shift.30

Fig. 1. Schematic of autonomous aeroamphibious invisibility cloak. The invisible drone is integrated with perception, decision, and action modules to allow it to self-adapt to kaleidoscopic environments and offset external detection without human intervention. The perception module mainly includes a custom-built EM detector for capturing incoming waves, a gyroscope for sensing attitude, acceleration speed, and angular velocity, and a camera for obtaining the surrounding environment. The detected information, together with user-defined cloaking pictures, is input into a pretrained deep-learning model to instruct the drone to make action at a millisecond scale. According to the output, the reconfigurable spatiotemporal metasurface veneers globally manipulate the scattering wave by directly controlling the temporal sequence of each meta-atom. As a consequence, when freely shuttling among sea, land, and air, the drone can maintain invisibility at all times or disguise itself into other illusive scattering appearances. Such an aeroamphibious cloak constitutes a big milestone to assist conventional proof-of-concept metamaterials-based invisibility cloaks to go out of laboratories.

Under the hood of the invisible drone, a perception module is indispensable to sense the surrounding environment and its position/state in real time, mainly including three onboard detectors. A home-made intelligent EM detector is utilized to precept the incident angle, frequency, and polarization of incoming waves;33 see Supplementary Note 9 in the Supplementary Material. A gyroscope is installed for sensing the attitude, acceleration speed, and angular velocity of the drone itself, and a camera is mounted for obtaining the surrounding environment. All of this information is fed into a pretrained deep-learning model (decision module) to orchestrate the spatiotemporal metasurface inclusion (action module) and make a fast reaction. Any customized scattering appearance can be directly actualized by the invisible drone without human intervention, for example, camouflaging the drone into a rabbit in the mountains.34 So far, although a portion of works have embedded active components and responsive materials into metasurfaces to actualize tunable invisibility cloaks,35 they still necessitate external assistance for a specific task. Very recently, we proposed the concept of an intelligent cloak, yet, the proof-of-concept experimental demonstration only works against high-reflection backgrounds.15 There is still a large distance to genuinely transpose an intelligent cloak into mind-bending applications.

3.2 Design and Working Mechanism of Spatiotemporal Metasurfaces

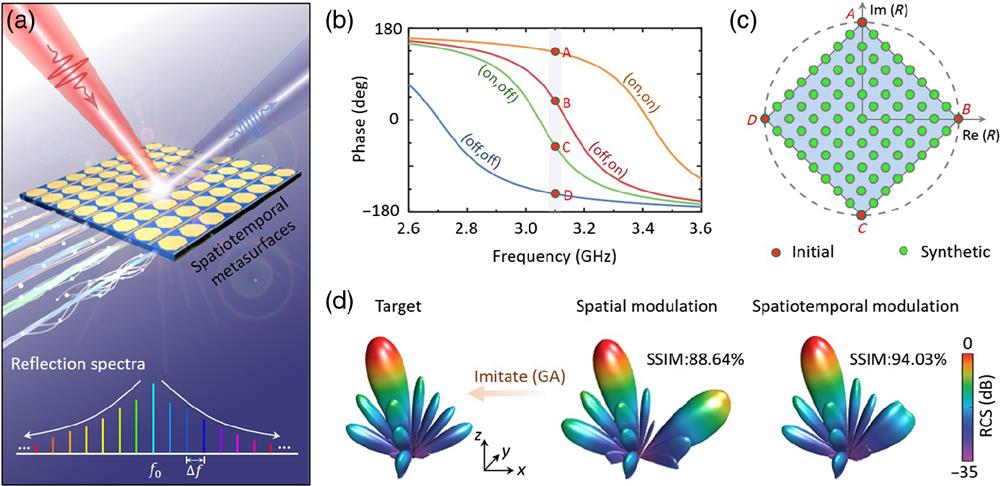

In microwave, we consider spatiotemporal metasurfaces consisting of an asymmetric hexagonal metallic patch and two metallic strips mounted on the dielectric substrate, backed by a ground plane [Fig. 2(a)]. By applying different direct-current bias voltages to the two welded electronic positive–intrinsic–negative (PIN) diodes, the reflection response of the meta-atom can be dynamically switched among four discrete states, i.e., (on, on), (on, off), (off, on), and (off, off). We optimize the geometries of the meta-atom to attain an interval of among the four states while maintaining the amplitude as high as possible, so as to guarantee a high manipulation efficiency. The period of the meta-atom is ultimately chosen as 40 mm; see Supplementary Note 1 in the Supplementary Material for the details of the meta-atom. As shown in Fig. 2(b), it is clear that the phase difference of the four states uniformly distributes at 3.1 GHz (labeled as , corresponding to the working wavelength ); the amplitude is higher than 0.95.

Fig. 2. Design and working mechanism of spatiotemporal metasurfaces. (a) The spatiotemporal metasurfaces are composed of an array of reconfigurable meta-atoms at microwave, each of which incorporates two PIN diodes. The specific geometries of metasurfaces are located in Supplementary Note 1 in the Supplementary Material . By feeding periodic time-varying voltage sequences, the spatiotemporal metasurfaces generate a series of reflected harmonic waves with customized scattering pattern and power distribution. (b) Reflection response of metasurfaces by applying different bias voltages across the loaded diodes. Two diodes correspond to four reflected states. At 3.1 GHz, the reflected phase has a uniform interval, while the reflected amplitude keeps high. (c) Synthetic reflection states at center frequency. By controlling the time-varying sequences (the period is 8) of the meta-atom, a constellation of equivalent reflection states is synthesized to occupy the complex plane. Here we want to underscore that one time-varying series can only induce one equivalent state; however, one equivalent state can be induced by more-than-one time-varying series. (d) Comparison between spatial and spatiotemporal metasurfaces. For a given three-dimensional scattering pattern, the spatiotemporal modulation allows a high degree of freedom to mimic the ground truth with the SSIM of 94.03%, in contrast to 88.64% by spatial-only modulation (with only four initial reflected states). Here conventional GA is adopted to optimize the profiles of metasurfaces. SSIM, structural similarity and GA, genetic algorithm.

Illuminated under a plane wave with the frequency , spatiotemporal metasurfaces can generate a multitude of harmonic waves by imposing periodical time-varying series. According to Fourier theory, periodic square-wave series can be decomposed into the summation of a series of orthogonal sine functions with different angular frequencies.24 The length of the square-wave series () determines the frequency of the harmonic waves, and its specific sequence determines the reflection response; see Supplementary Note 2 in the Supplementary Material. We infer that the modulation period , and the ’th harmonic frequency is , where is the time duration of the basic gate function and . If becomes 1, the spatiotemporal metasurfaces will be degenerated to the basic spatial gradient metasurfaces. In this study, we consider , and on the basis of the four discrete reflection states, it can synthetize 81 equivalent states at the frequency , as shown in Fig. 2(c). In fact, these equivalent states are virtual working states derived from the Fourier coefficients of the square-wave series, which is conceptualized to facilitate the following inverse design. We can observe that the equivalent states occupy almost the entire complex plane, offering us more degrees of freedom. By carefully designing the square-wave series, the reflection waves at center and harmonic frequency can be manipulated synergistically. To benchmark the superiority of spatiotemporal metasurfaces, we carry out a numerical experiment on far-field customization with metasurfaces. As shown in Fig. 2(d), we randomly set up a far-field target and mimic it with spatial-only and spatiotemporal metasurfaces using a genetic algorithm. It turns out that the structural similarity (SSIM) is improved from 88.64% to 94.03%, indicating that spatiotemporal metasurfaces hold a strong ability to manipulate EM waves.

3.3 Stochastic-Evolution Learning

We then discuss how to unlock the intricate relationship between the spatiotemporal metasurfaces that cover the unmanned drone and the generated scattering field. To this end, “brute-force” search and heuristic optimization methods call for a vast number of numerical simulations and inevitably encounter many failed cases that are often discarded, leading to a huge waste of computing resources. Probably, the designed spatiotemporal metasurfaces are satisfactory, but they are innately flawed by the trial-and-error manner and the convergence speed. Moreover, in practice, complex radio devices are required to monitor the far field, making it hard to be generalized into a convenient strategy. Recently, deep learning is poised to expedite on-demand photonic design and mitigate the imperfections in conventional methods.17

Despite these exciting achievements, it is a big challenge to make them applicable for our work due to the following reasons. First, the nonuniqueness (one-to-many) issue is ubiquitous in inverse design, meaning that different metasurface distributions may generate the same or highly similar far field. This is difficult for an orthodox neural network to converge because conflict training samples will be found. Although tandem networks can alleviate the nonuniqueness issue by relaxing the converging requirement, it does not fundamentally solve it and there are still conflicting gradients.36 Second, almost all related works are based on the default premise that the input should be given in a complete form. Yet, the practice is not always like that. In this case, if the missing part is artificially repaired, the output result will be largely contingent on the stochastic repaired versions. Third, in most cases, we prefer to have multiple viable solutions, rather than a single solution. This way, the model will become more agile and feature redemption ability if the output seems to be wrong or has another special preference.

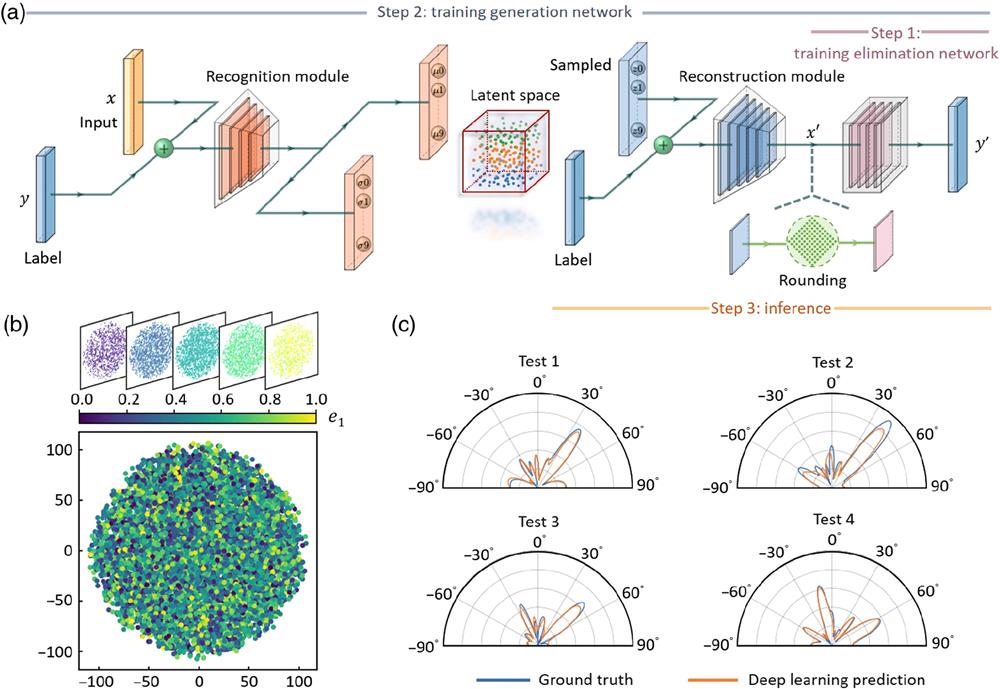

To empower the intelligent invisible drone, we propose a stochastic-evolution learning (generation-elimination network) that contains two cascaded networks, the generation network (CVAE) and the elimination network (fully connected neural network), as depicted in Fig. 3(a). To facilitate the understanding, we label the spatiotemporal metasurfaces distribution as (the input) and the far-field pattern as (the label). In addition, the latent variable is introduced to build up the probabilistic relationship between and .37,38 CVAE, composed of a recognition module, a latent space, and a reconstruction module, aims to acquire the intractable probability distribution and produce a family of candidates, rather than a single-valued mapping. For a given far-field pattern, the generation network serves as a producer to manufacture metasurface constellation, and the elimination network acts as an inspector to pick out the optimal one. Such a process is similar to biological evolution in nature, thus termed as stochastic-evolution learning. In the training phase, we first train the elimination network (), which is a single-valued function without the nonuniqueness issue. In the second step, we freeze the elimination network and attach it to the generation network. The input and the label information are encoded by the recognition module and give birth to 10 sets of Gaussian variational parameters , constituting the latent space. The key point is that, the true posterior distribution of the latent variable conditioned on and is trained to approach the prior probability because it is imitated by the approximate posterior and is the variational parameters of multivariate Gaussian distribution. Then the latent Gaussian variables are sampled from the latent space and decoded into the reconstructed output . They are finally retrieved into by the elimination network. The spectral difference between and is calculated as the prediction loss (Supplementary Note 5 in the Supplementary Material). As an example, we consider that the spatiotemporal metasurfaces encompass 10 columns. In the last step of the inference phase, the round operation is carried out to enforce the output to match 81 equivalent states before final elimination; see Fig. S7 in the Supplementary Material. After rounding, the best candidate is selected, reaching an accuracy of 97.68%. Figure 3(b) visualizes the latent space with a -distributed stochastic neighbor embedding (t-SNE approach39) to reduce the dimension from ten to two and compress the high-dimensional data into continuous one-dimensional data using an autoencoder. Whatever is assigned, a Gaussian distribution will be extracted, which accords with the predefined standard Gaussian prior distribution , and each point can be decoded into a spatiotemporal metasurface distribution.

Fig. 3. Architecture of stochastic-evolution learning that drives the autonomous invisible drone. (a) The proposed network consists of two cascaded networks, namely, the generation network and the elimination network. The CVAE-based generation network, composed of a recognition module, a latent space, and a reconstruction module, is used to produce diverse candidates, and the elimination network, a fully connected neural network, is launched to filter all inferior candidates. The layer-level illustration of the network and complete training process are given in Supplementary Note 5 in the Supplementary Material . The 10 sets of Gaussian variational parameters generated from the preceding layer of the recognition module constitute the latent space. (b) Latent space visualization. For each point in the extracted Gaussian distribution, the output metasurface distribution is retrieved by concatenating the sampled point (i.e., latent variable) with the far-field pattern (i.e., label information) and then going through the reconstruction module. (c) Test instances. The deep-learning prediction is filtered by the elimination network to retain the best one. One prominent advantage is that such a framework can effectively address the nonuniqueness issue in inverse design and provide users with more than one answer.

3.4 Experimental Measurement for the Flying Invisible Drone

Figure 4(b) shows the experimental picture of the intelligent invisible drone, which is adorned by four veneers of spatiotemporal metasurfaces. The top panel has 10 columns, the bottom panel has 10 columns, and each lateral panel has 8 columns. Every column (incorporating 10 meta-atoms for top panel and 11 meta-atoms for other panels) along the direction shares the same bias voltage. The drone has the dimension of about , and the loading capacity of 15 kg. We integrate the pretrained deep-learning model into Jetson Xavier to promptly send out instructions to the spatiotemporal metasurfaces according to the detected information. The microwave EM detector is composed of a four-port wideband coplanar antenna distributed along a hexadecagonal array. For an incoming wave, the induced surface voltages are fed into the machine-learning model for simultaneous acquisition of frequency, direction of arrival, and polarization;33 see Supplementary Note 9 in the Supplementary Material. The design and result about the camera and gyroscope are detailed in Supplementary Notes 7 and 8 in the Supplementary Material, respectively. Note that for a complete cycle, the reaction is completed on a millisecond time scale, in which the EM detection takes up the most time.

![Experimental measurement of autonomous invisible drone flying in the sky. (a) Experimental setup of the intelligent invisible drone outside the laboratory. The invisible drone freely flies in the sky and passes through a conical detection region excited by a transmitting antenna, during which three antennas detect the scattering waves in real time. The dotted curve shows the flight trajectory. VNA, vector network analyzer. (b) Photograph of intelligent invisible drone. (c), (d) Simulation results when the cloaked/bare drone is impinged by an obliquely incident wave. Evidently, the bare drone produces strong scattering field that exposes it to foe radar, while the cloaked drone largely absorbs the incident wave. (e) Experimental time-varying electric field by the three receivers. Interestingly, the signal remains almost stable and matches with the background when the cloaked drone flies from the left to the right, in stark contrast to the erratic fluctuation in the uncloaked case (Video 1, mp4, 28.9 MB [URL: https://doi.org/10.1117/1.AP.6.1.016001.s1]; Video 2, mp4, 22.4 MB [URL: https://doi.org/10.1117/1.AP.6.1.016001.s2]).](/richHtml/ap/2024/6/1/016001/img_004.png)

Fig. 4. Experimental measurement of autonomous invisible drone flying in the sky. (a) Experimental setup of the intelligent invisible drone outside the laboratory. The invisible drone freely flies in the sky and passes through a conical detection region excited by a transmitting antenna, during which three antennas detect the scattering waves in real time. The dotted curve shows the flight trajectory. VNA, vector network analyzer. (b) Photograph of intelligent invisible drone. (c), (d) Simulation results when the cloaked/bare drone is impinged by an obliquely incident wave. Evidently, the bare drone produces strong scattering field that exposes it to foe radar, while the cloaked drone largely absorbs the incident wave. (e) Experimental time-varying electric field by the three receivers. Interestingly, the signal remains almost stable and matches with the background when the cloaked drone flies from the left to the right, in stark contrast to the erratic fluctuation in the uncloaked case (Video 1 , mp4, 28.9 MB [URL: https://doi.org/10.1117/1.AP.6.1.016001.s1 ]; Video 2 , mp4, 22.4 MB [URL: https://doi.org/10.1117/1.AP.6.1.016001.s2 ]).

We carry out the experiment at the outdoor test site. The airborne drone glides through the sky and passes through a conical detection region formed by a transmitting antenna, during which multiple receiving antennas located at random positions monitor the scattering wave in real time. The feasibility is assessed in both real-world flight and numerical simulation. In the simulation [Fig. 4(c)], the cloaked drone hardly produces scattering waves because the spatiotemporal metasurfaces are tailored to operate at the original point of Fig. 2(c) (ideally, zero reflectivity). In stark contrast, the bare drone generates strong scattering waves to render it discernible [Fig. 4(d)]. In the experiment, the drone is formulated to take off from the lake, enter the conical region ( to 8 s), and land on the right. For the cloaked drone, the time-varying scattering signal sensed by the three receivers remains almost stable, as if there was nothing flying through the conical region [Fig. 4(e)]. However, the bare drone makes the detected signal fluctuate dramatically, distinct from that of the background; see full dynamics in Videos 1 and 2. The measurement is conducted at the working frequency of around 3.1 GHz. One may conceive that the spatiotemporal metasurfaces also produce other harmonic waves to expose the drone. Yet, the amplitudes of harmonics are actually very small, which in return can also be engineered to produce a Doppler cloaking effect.31

3.5 Autonomous Invisible Drone against an Amphibious Background

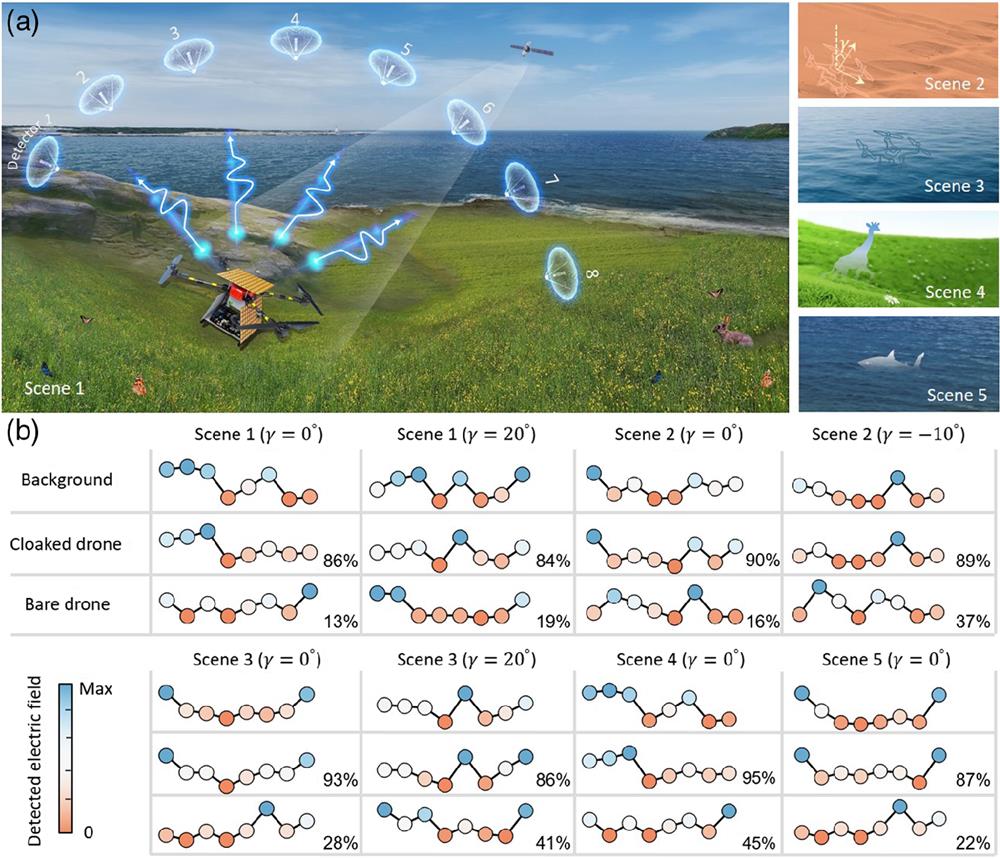

We further assess the performance of the intelligent invisible drone against kaleidoscopic terrains (e.g., grassland, sand, and sea). The goal is to conceal the drone from the background or camouflage it as other objects. Figure 5(a) schematically illustrates the experimental setup, where eight receiving antennas are spatially distributed along the arc to detect the scattering wave. The concrete operation procedure is discussed in Supplementary Note 12 in the Supplementary Material. In each terrain, the intelligent invisible drone perches with a random tilt attitude () to benchmark the robustness. The experimental results are shown in Fig. 5(b), and eight circles represent the detected value by the eight antennas. Obviously, in scenes 1, 2, and 3, the detected electric value of the cloaked drone with the attitudes is consistent with that of the background, distinct from that of the bare drone. For an illustrative purpose, we employ the Pearson correlation coefficient29 to elucidate the similarity between the background and the cloaked/bare drone. The performance of the invisible drone is revealed with an accuracy of over 84%, in striking contrast with the case of the bare drone (about 40% or worse). In addition, other user-defined illusive scattering patterns can also be successfully reached with the intelligent invisible drone, for example, a giraffe on the grassland and a shark in the sea [scenes 4 and 5 in Fig. 5(a)]. The high accuracy in scenes 4 and 5 further highlights the versatility and scalability of our intelligent drone.

Fig. 5. Experimental demonstration of autonomous invisible drone amidst amphibious background. (a) Schematic illustration of the intelligent invisible drone when it lands on grassland. Eight receiving antennas are randomly distributed along the arc to detect the surrounding scattered wave. The right insets show different scenes, including sand and sea.

4 Conclusion

To sum up, we have demonstrated how data science converges with spatiotemporal metasurfaces to boost a fully intelligent invisibility cloak that is able to self-adapt to kaleidoscopic environments and offset various detection manners. On the technical side, a self-driving invisible drone equipped with sensing, decision, and actuation ingredients has been achieved to maintain invisible and customize on-demand scattering behaviors against the paradigmatic background of sea, land, and air. The built-in generation-elimination network acts like a savvy commander to unlock the intricate EM waves–metasurfaces interaction, also blazing an “off-piste” path for real-time inverse design of one-to-many and many-to-many correspondences. Amid mounting invisibility cloaks, our conclusive experiment evidence highlights iconic advancements of an intelligent invisible drone, bypassing tiresome human intervention and sculpting scattering field in space and time. The platform can be tailored to combine with wide bandwidth40 and gain metasurfaces41 to usher in a surrealistic intelligent cloak.

The age of intelligent cloak and intelligent metasurfaces is just dawning. Endeavors in achieving this goal have been embodied throughout streamlining photonics design, capturing latent physics, and automating metadevices. Our work points the way toward the revamped paradigm of the intelligent cloak, inciting a flurry of new classes of intelligent metadevices that adopt spatiotemporally controlled structures for advanced on-demand functionalities. In the long term, we envision that the knowledge among foggy scenarios can be migrated by sharing common experiences in transfer technique,42 and the new breakthroughs of deep learning greatly improve the robustness in data-scare and model-agnostic cases.43 Finally, the outcomes could have ramifications in a broad range of applications, such as cross-wavelength imaging, energy harvesting, and wireless communication.44

Chao Qian received a PhD degree from Zhejiang University, Hangzhou, in 2020. He was a visiting PhD student at California Institute of Technology from 2019 to 2020. In 2021, he joined the Zhejiang University/ University of Illinois at Urbana-Champaign Institute as an assistant professor. Chao’s research interests are on metamaterials/metasurfaces, electromagnetic scattering, inverse design, and deep learning. He has published more than 40 papers in high-profile journals, including Nature Photonics, Nature Communications, Science Advances, Physical Review Letters, etc. He is an associate editor of the journal Progress in Electromagnetic Research (PIER) and reviews for more than 10 renowned academic journals. He is included in the 2023 Stanford University's World Top 2% Scientists and Emerging Leaders 2024 list.

Hongsheng Chen (Fellow, IEEE) received the BSc and PhD degree from Zhejiang University, Hangzhou, China, in 2000 and 2005, respectively. In 2005, he joined the College of Information Science and Electronic Engineering, Zhejiang University, and was promoted to Full Professor in 2011. He was a visiting scientist (from 2006 to 2008) and a visiting professor (from 2013 to 2014) with the Research Laboratory of Electronics, Massachusetts Institute of Technology, USA. In 2014, he was honored with the distinguished Cheung-Kong Scholar award. Currently, he serves as the Dean of College of Information Science and Electronic Engineering, Zhejiang University. He received the National Science Foundation for Distinguished Young Scholars of China in 2016, and the Natural Science Award (first class) from the Ministry of Education, China, in 2020. His current research interests are in the areas of metamaterials, invisibility cloaking, transformation optics, and topological electromagnetics. He is the coauthor of more than 300 international refereed journal papers. His works have been highlighted by many scientific magazines and public media, including Nature, Scientific American, MIT Technology Review, The Guardian, and so on. He serves as topical editor at the Journal of Optics, deputy editor-in-chief at PIER, and editorial board member for Nature’s Scientific Reports, Nanomaterials, and Electromagnetic Science. He served as the general co-chair of the Photonics & Electromagnetics Research Symposium in 2022, and the general co-chair of the International Applied Computational Electromagnetics Symposium in 2023.

Biographies of the other authors are not available.

[7] M. Gharghi, et al.. A carpet cloak for visible light. Nano Lett., 2011, 11: 2825-2828.

[11] Z. Qin, et al.. Superscattering of water waves. Natl. Sci. Rev., 2022, 10: nwac255.

[24] L. Zhang, et al.. Space-time-coding digital metasurfaces. Nat. Commun., 2018, 9: 4334.

[39] L. van der Maaten, G. Hinton. Visualizing data using t-SNE. J. Mach. Learn. Res., 2008, 9: 2579-2605.

Article Outline

Chao Qian, Yuetian Jia, Zhedong Wang, Jieting Chen, Pujing Lin, Xiaoyue Zhu, Erping Li, Hongsheng Chen. Autonomous aeroamphibious invisibility cloak with stochastic-evolution learning[J]. Advanced Photonics, 2024, 6(1): 016001.