基于相移条纹投影的动态3D测量误差补偿技术

1 Introduction

The 3D measurement technology based on structured light has the advantages of non-contact, high efficiency, and low cost, which is widely used in industrial measurement, mold manufacturing, medical image, cultural relic reconstruction and other fields[1-4]. The 3D measurement technology based on phase-shift and fringe projection has good measurement accuracy, density, and anti-interference ability, and is most widely used in high-precision static measurement[5]. However, in the dynamic 3D measurement, the movement of the object changes the ideal correspondence between the object points, image points, and phases in different fringe images. Under this condition, the application of traditional phase formulas will produce significant phase measurement errors, which will greatly reduce the accuracy of the 3D measurement.

For the dynamic 3D measurement, most researches tend to use single-frame structured light projection technology[6-8], but the inherent shortcomings of this technology in terms of measurement accuracy and anti-interference ability cannot be ignored. Some researches apply high-speed photography technology to reduce the time interval of different fringe images, so as to suppress the dynamic 3D measurement errors[9-12]. This technology is mainly suitable for the situation where the object movement speed is low, and the measurement accuracy is not high. LIU Y J[13] and WEISE T[14] propose the dynamic 3D measurement error compensation technology based on phase-shifting and fringe projection, which accurately restores the phase by analyzing the equivalent phase-shifting errors. The process of this technology is generally as follows. First, the phase distribution of each image and the phase-shifting between different images are calculated by the Fourier assisted phase-shifting method[15-16]. Second, the phase distribution of the moving object is calculated by using the equal-step phase-shifting method or the random step-size phase-shifting algorithm based on least squares[17-18]. The main problem of this technology is that the phase-shifting between different images calculated by Fourier assisted phase-shifting method has not enough precision. In fact, there is no essential difference between the phase calculation accuracy of this technology and that of the single-frame structured light projection technology.

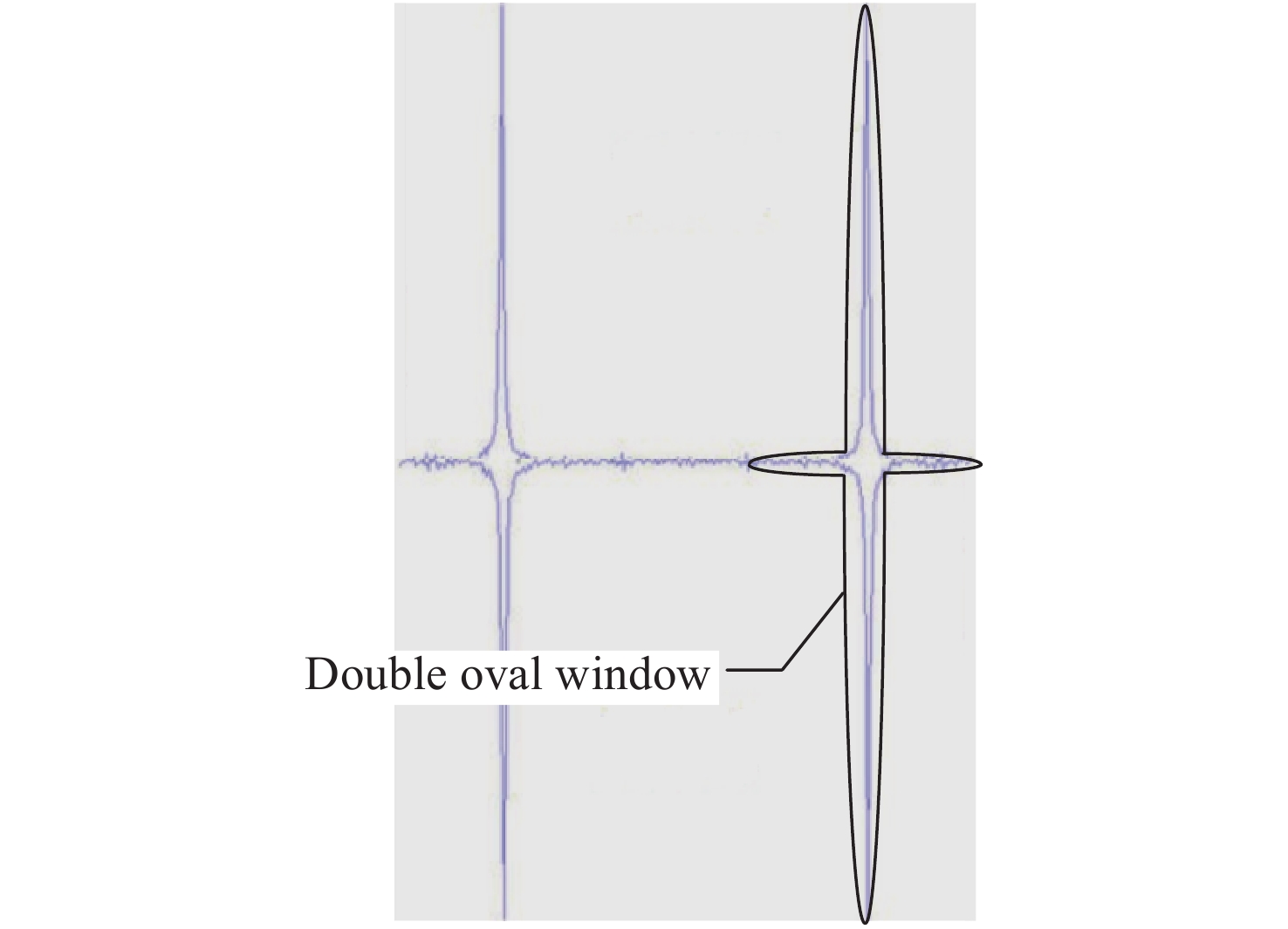

A new dynamic 3D measurement error compensation technology based on phase-shifting and fringe projection is proposed in this paper. Fristly, by using the improved Fourier assisted phase shift method and the improved iterative algorithm based on the least square method, the calculation accuracy of the phase-shifting between different images is improved, and then the calculation accuracy of the phase of moving object is improved. In the process, the improved Fourier assisted phase-shifting method introduces a double elliptical filter window, which not only fully retains the spectrum information, but also greatly reduces the influence of redundant noise data, effectively suppresses the error diffusion of mutation position, and improves the accuracy of phase shift analysis. The advanced iterative algorithm based on least squares[19] takes the equivalent phase-shifting obtained by the improved Fourier assisted phase-shifting method as the initial value, and uses the random step-size phase-shifting algorithm to iteratively calculate the equivalent phase shift and phase until the accuracy meets the requirements. For the mature technologies in static 3D measurement, such as calibration technology[20-24] and phase unwrapping technology[25-26], will not be discussed in detail in this paper.

2 Principle

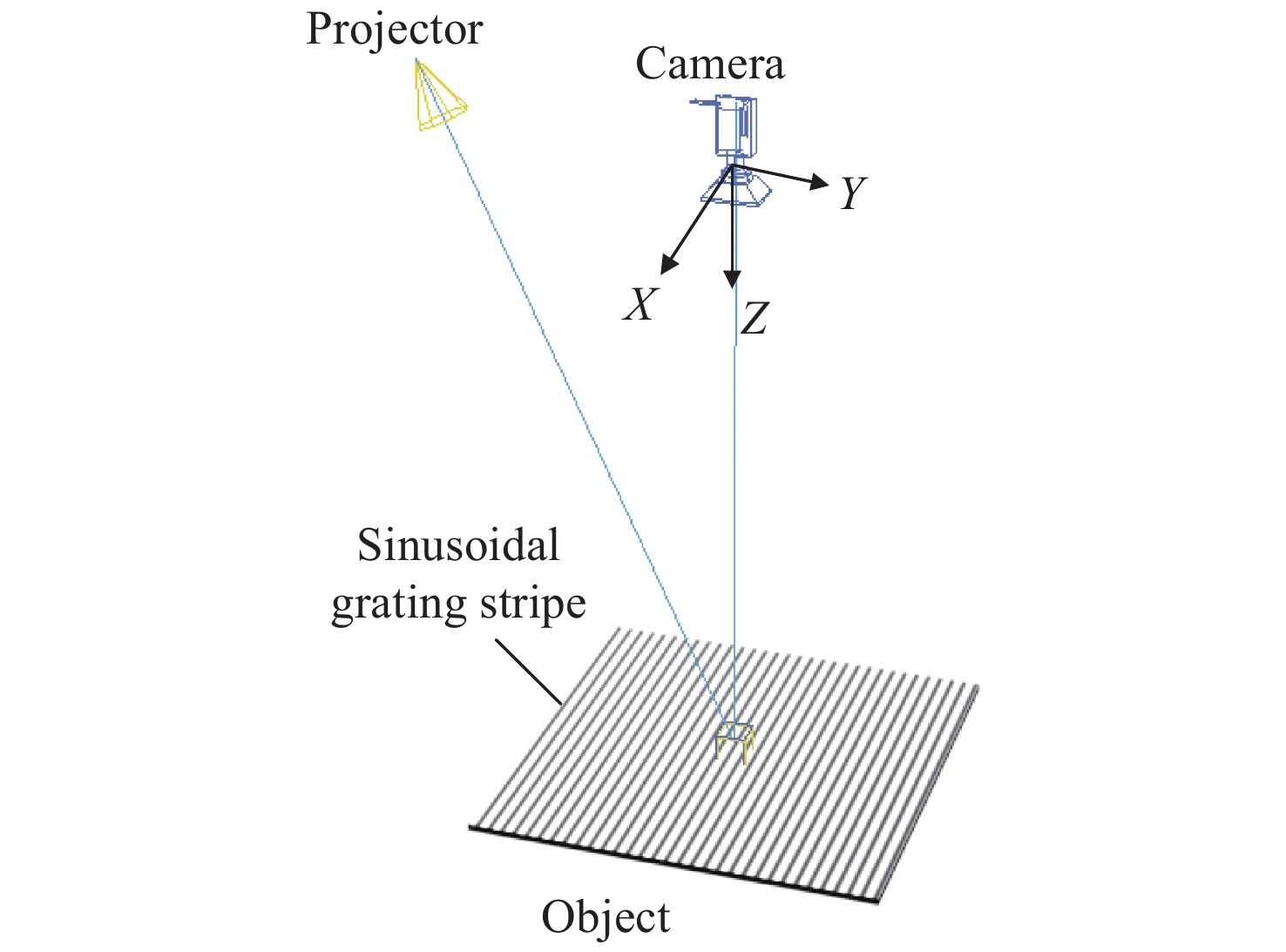

The 3D measurement system based on phase-shifting and fringe projection is shown in Fig. 1. The basic principle of the dynamic 3D measurement errors is introduced by taking the three-step phase-shifting method as an example.

For a static object, the pixel coordinates of a point on the object in different images are the same, and the image grayscales of this point are as follows:

in the formula, Id andIe represent the background intensity and modulation intensity, respectively, and

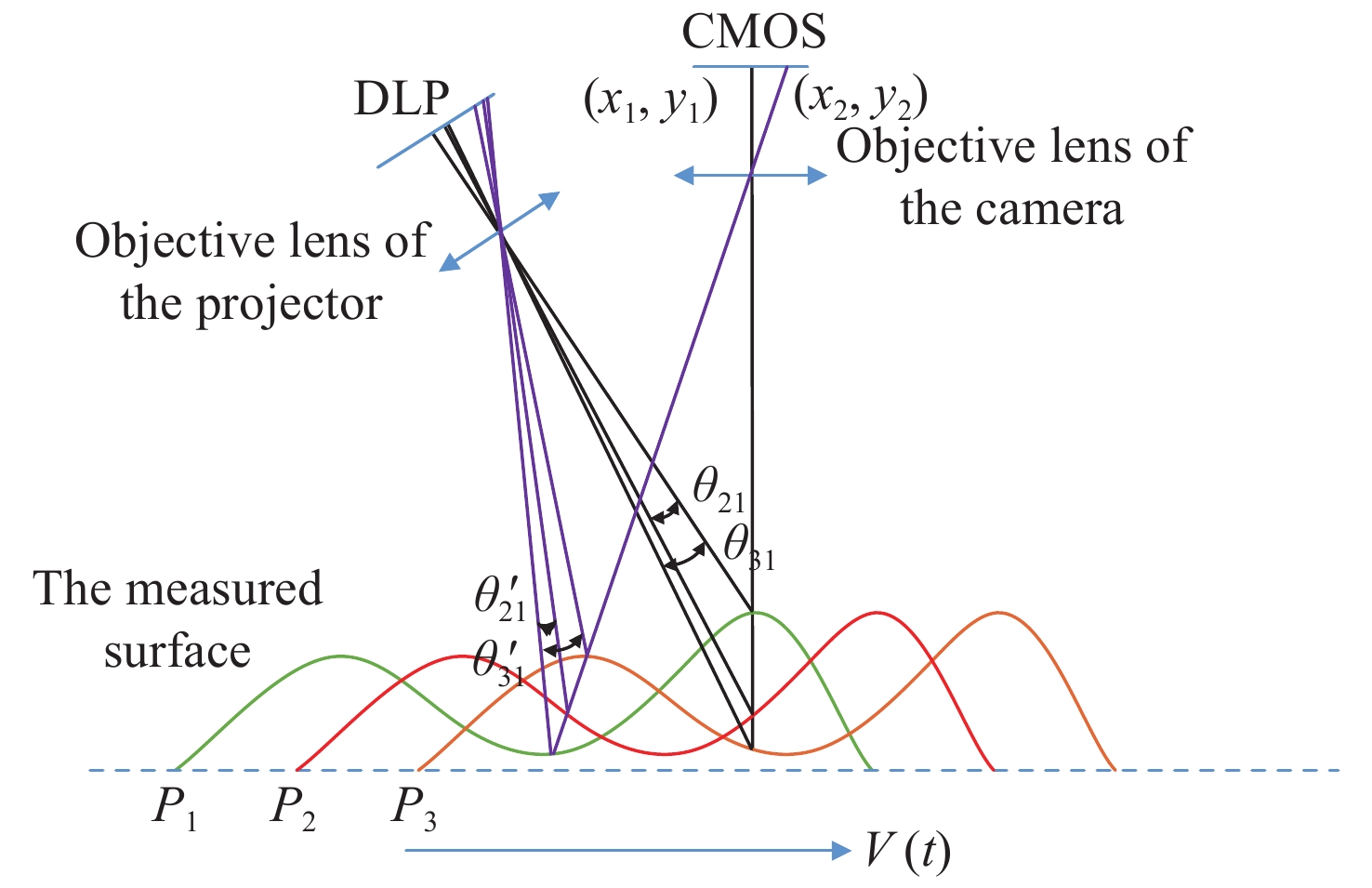

For a moving object, the basic principle of the dynamic 3D measurement errors can be analyzed through reverse thinking, as shown in Fig. 2. If the object moves along the

At this time, there will be significant errors in calculating the phase of this position by using formula (2). Although the errors are caused by the movement of the object, they can be equivalent to the phase-shifting errors of

If the equivalent phase shift between different images can be accurately analyzed, the dynamic 3D measurement error will be effectively suppressed and the dynamic 3D measurement accuracy will be improved.

3 Methods

3.1 The improved Fourier assisted phase-shifting method

The Fourier-assisted phase-shifting method is a global analysis method. At the locations of fringe mutation, the phases calculated by this method have large errors, and the errors will spread around the locations of fringe mutation in the form of gradual attenuation fluctuations. A window function is added to the Fourier transform process to effectively limit the spread of the errors, which helps to improve the calculation accuracy of the global phases. The windowed Fourier transform is as follow:

In the formula,

in the formula,

The spectrum distribution in the window field centered on

in the formula,

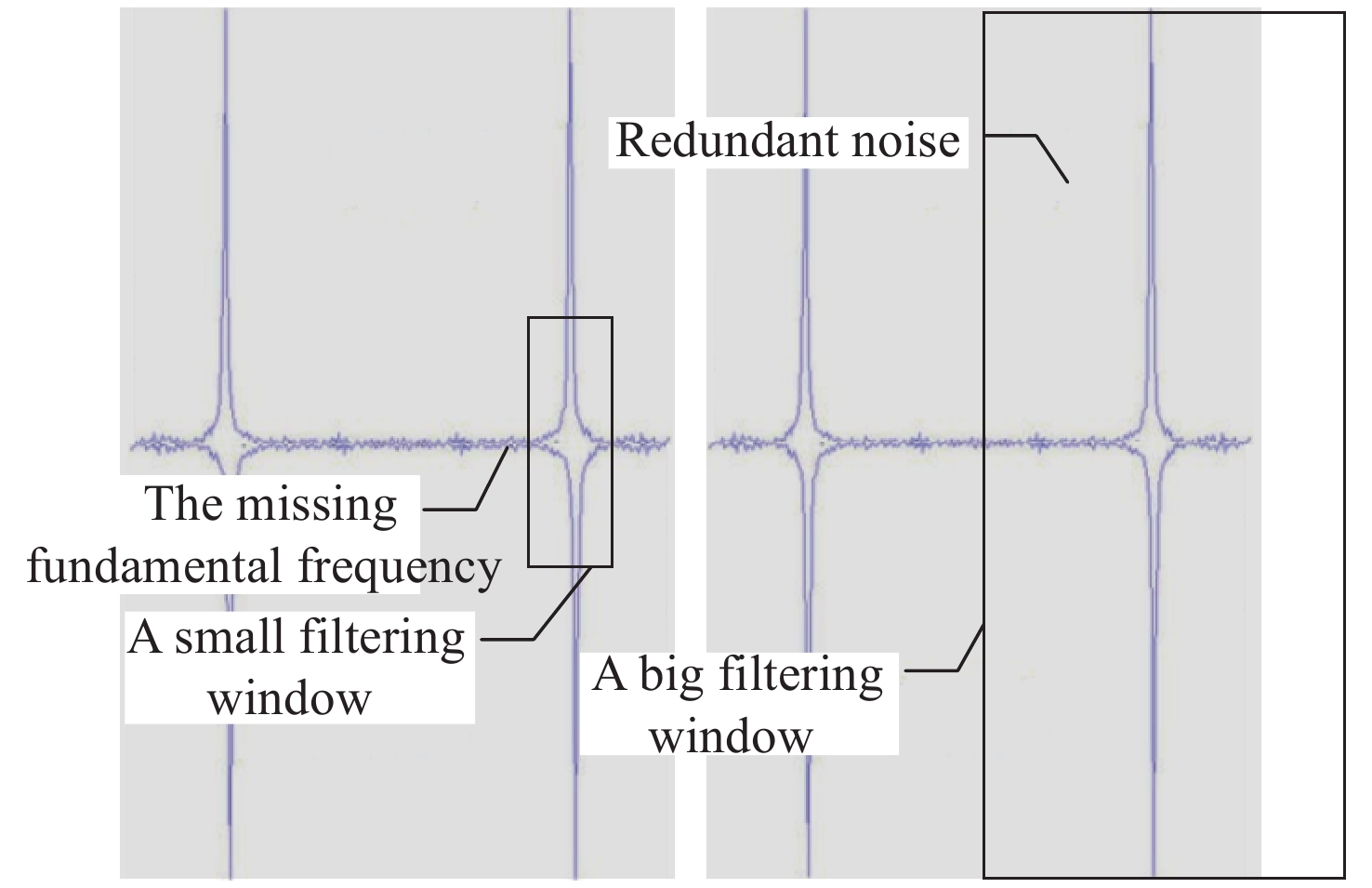

The traditional frequency domain filter uses a rectangular window, as shown in Fig. 3. This type of filter cannot completely extract all the first-order spectrum. Moreover, it retains a large amount of redundant noise data in the extraction area, which affects the analysis accuracy of spectrum distribution. The Gaussian window filter can improve the above problems to a certain extent, but the design of the window shape is still not flexible enough. The existed research shows that the first-order spectrum usually presents an elliptical distribution[27], so a two-way elliptical filter is proposed in this paper, as shown in Fig. 4.

The mathematical expression of the double ellipse filter is as follow:

In the formula,

After the frequency domain filtering is completed, the remaining calculation steps are consistent with the traditional Fourier assisted phase-shifting method. Perform inverse Fourier transform on the extracted fundamental frequency to obtain spatial information, and the phase at different positions can be obtained by moving the Gaussian window. The phase difference of the two fringe images can be used to obtain the phase-shifting of the position.

3.2 The advanced iterative algorithm based on least squares

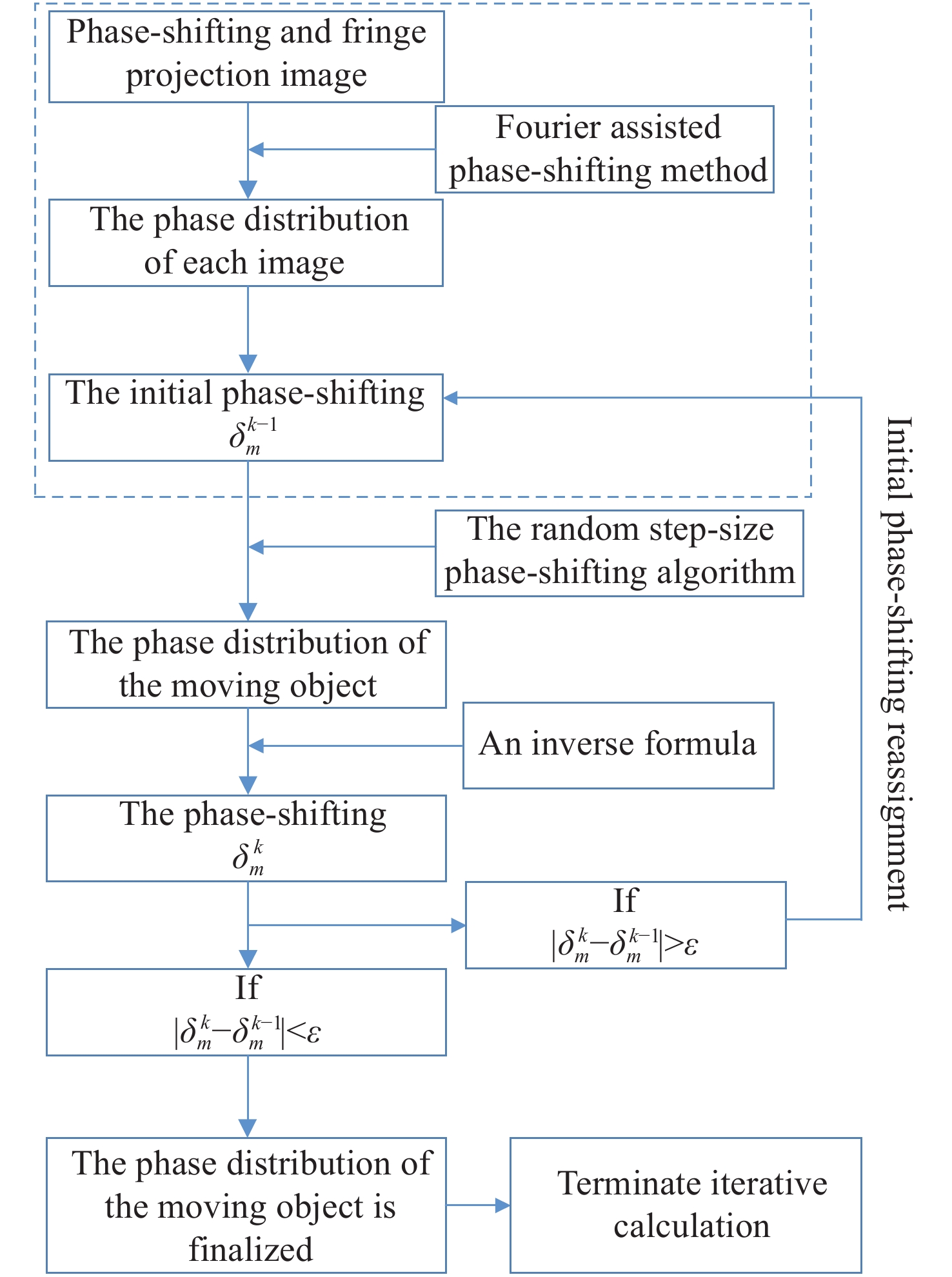

The process of the advanced iterative algorithm based on least squares is shown in Fig. 5.

In Fig. 5,

The initial phase-shifting can be preliminarily calculated by the improved Fourier assisted phase-shifting method. Generally, the phase-shifting is random and unequal-step. In this case, the phase needs to be calculated by the random step-size phase-shifting algorithm based on least squares. The formulas are as follows:

In the formulas, M is the number of the projected fringe image,

In the high-precision dynamic 3D measurements, the accuracy of the phase-shift calculated by the improved Fourier assisted phase-shifting method cannot always meet the requirement. Therefore, the advanced iterative algorithm based on least squares is proposed in this paper, which can calculate the phase-shifting and phase more accurately. The basic ideas of the advanced iterative algorithm based on least square are as follows. First, the phase-shifting calculated by the improved Fourier assisted phase-shifting method is taken as the initial value, and the phase distribution is calculated, where

In the iterative process, a method for calculating the phase-shifting with known phase distribution is proposed. The method is based on the principle of least square, and its objective function is designed as follows:

in the formula,

To minimize

4 Experimental verification

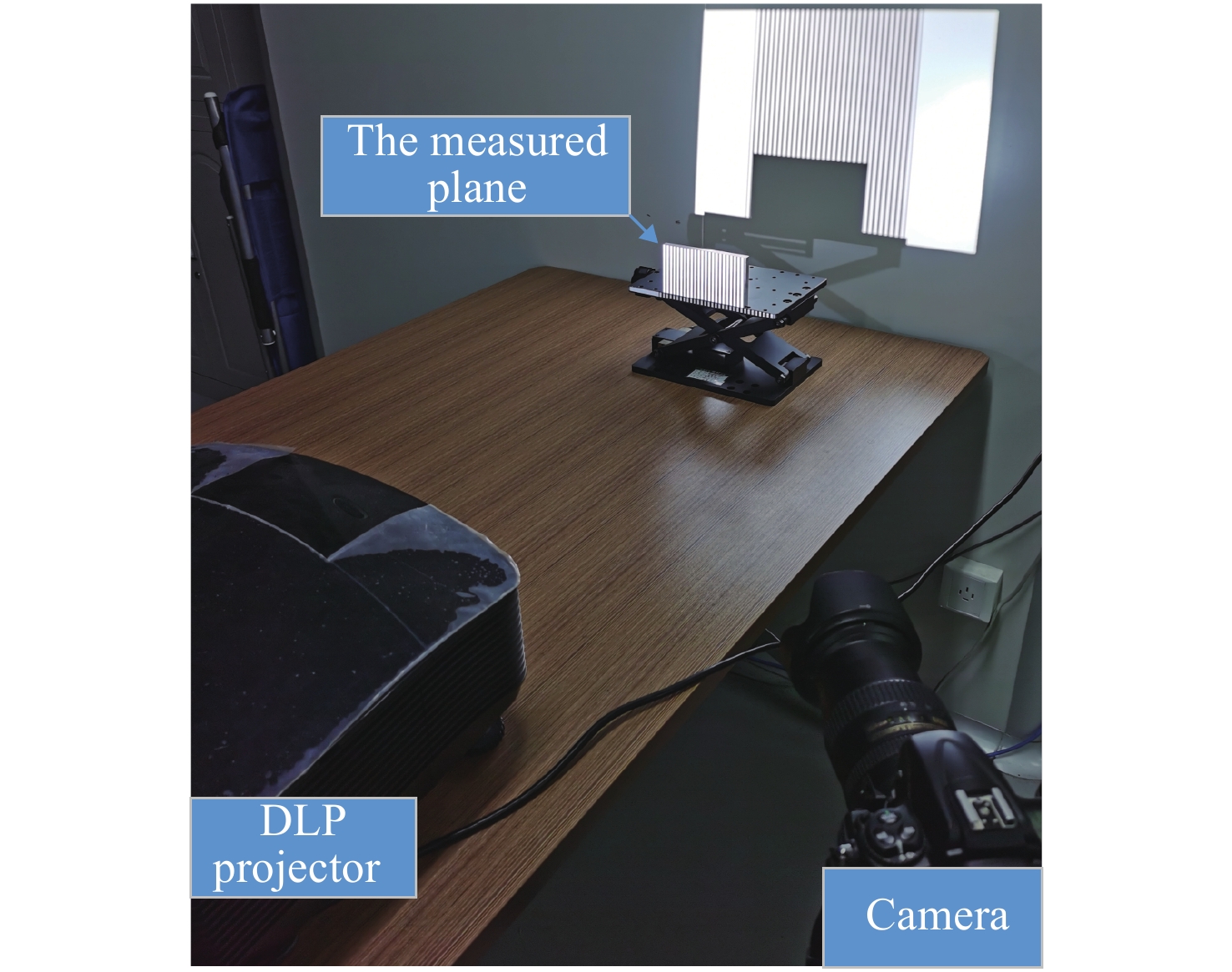

To verify the theory and method, a dynamic 3D measurement system based on phase-shift and fringe projection was established. The measurement system consists of a camera, a projector, and a measured object. The camera is a Nikon d850 SLR, the focal length of its objective lens is 35 mm, and the camera had a CMOS size of 24 mm × 16 mm (DX mode) and a resolution of 2704 × 1800. The projector is Benq W7000 DLP with a resolution of 1920 × 1080. The projection was encoded as a four-step phase-shift fringe, and the period of the phase-shift takes up 32 DLP pixels. The angle between the optical axis of the camera objective and the projection objective was about 15°. The distance between the photographic center and the measured object was about 1500 mm, and the pixel resolution of the camera is about 0.35 mm.

An object with a moving speed of about 100 mm/s was originally planned to be measured. The projector and the camera are connected through a synchronous trigger to implement time match of the projection and imaging. The integration time of single imaging of the camera can be set as 1/1000 s, the time interval between two adjacent projections and imaging is set as 1s. However, to reduce the cost of the experiment, we moved the measured object three times along the direction of gray scale change, and then projected and took pictures of the object in three static states, which equivalently replaces the measurement process of moving object. The biggest difference between this alternative and the actual situation is that it is difficult to simulate the image blur caused by object motion in a single imaging time. However, in our envisaged measurement condition, the moving distance of the object in a single imaging time is only 0.1 mm, accounting for about 0.28 pixels in the image, so the dynamic blur of the image can be ignored.

In order to verify the accuracy of the method, a precision machined object is needed as the measurement benchmark. Because the machining accuracy of the flat plate is the easiest to achieve, a precision grinding aluminum plate with a size of 150 mm × 100 mm × 10 mm and a surface flatness better than 0.01mm is selected as the test object. In order to simulate the dynamic three-dimensional measurement of complex objects, the flat plate is deliberately placed obliquely along the optical axis of the camera, so that the depth distance of each point on the flat plate in the camera coordinate system is inconsistent. At this time, there is no great difference between measuring a flat plate and measuring a complex object, and the high accuracy of the flat plate is more conducive to the accuracy of our evaluation method.The measurement system is shown in Fig. 6.

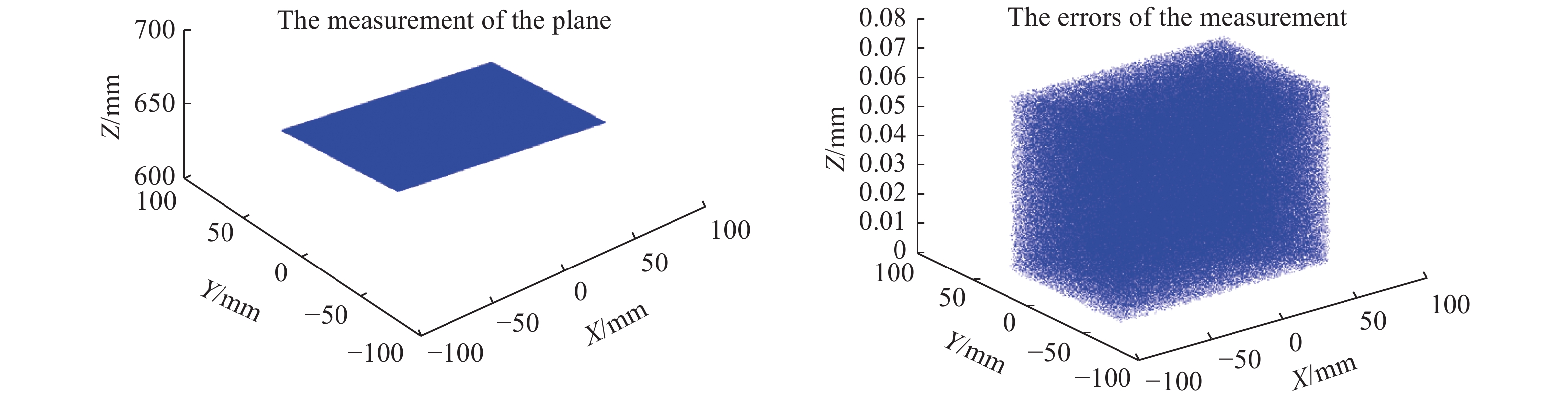

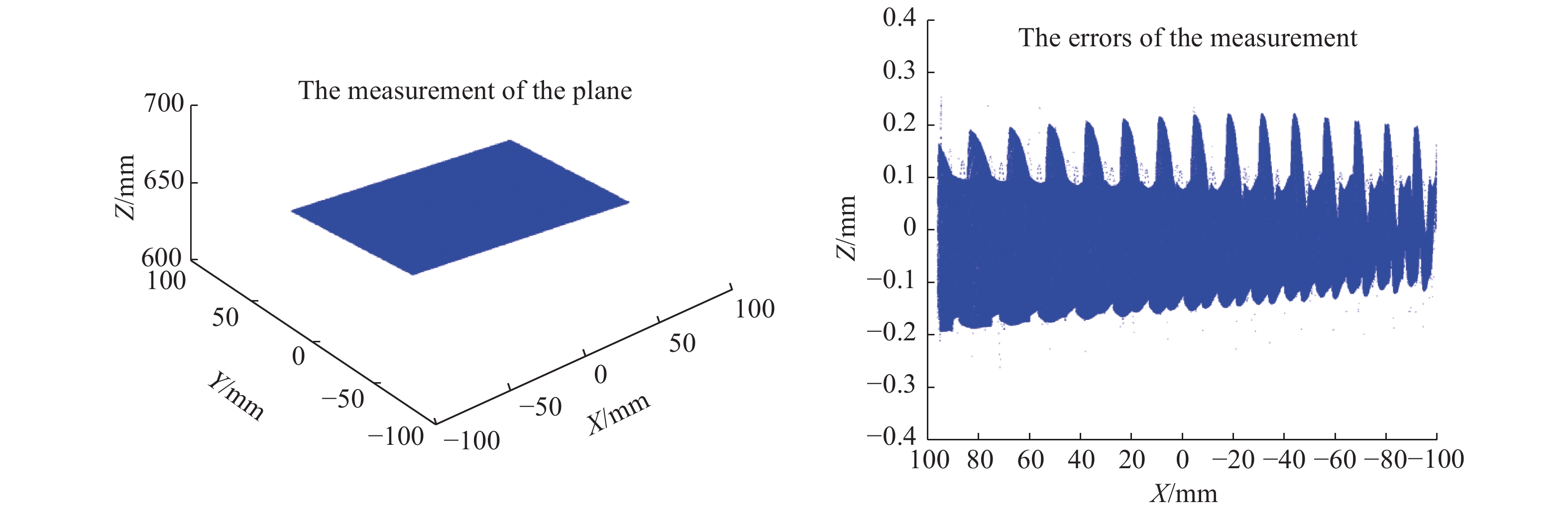

First, the 3D shape of the plane was measured in a static scene with a mean square error of 0.034 mm. The result is shown in Fig. 7.

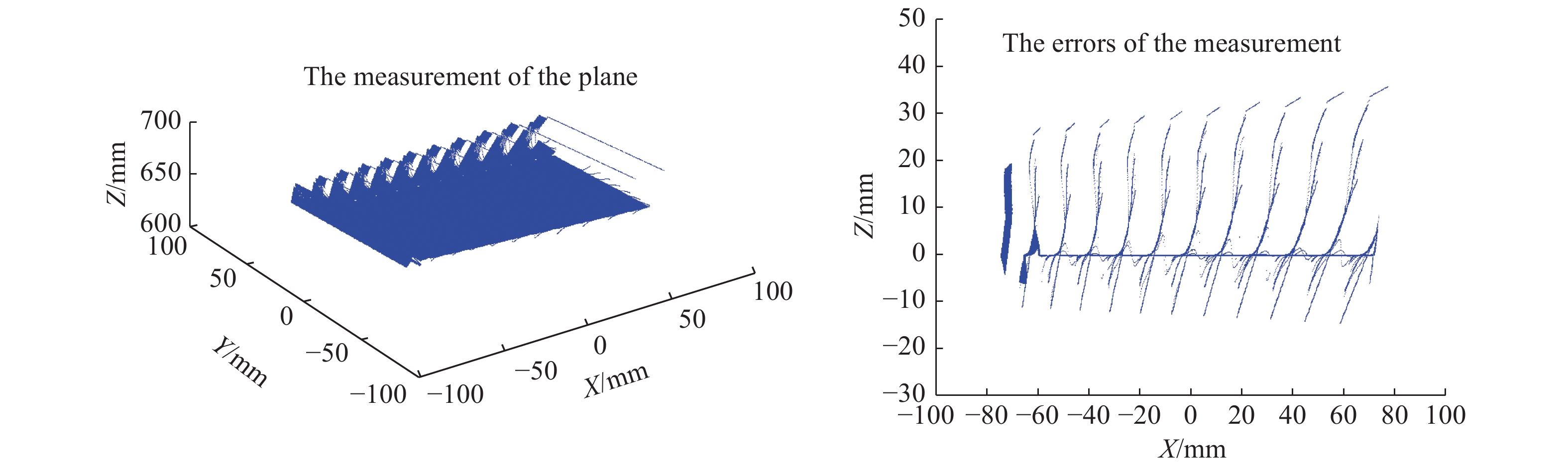

Then, the object was moved 4 mm, 5 mm and 6 mm in the direction of the gray scale change of the fringe. The mean square error of the uncompensated dynamic 3D measurement was 5.241 mm, as shown in Fig. 8.

Finally, the dynamic 3D measurement error compensation technology proposed in this paper was used to measure again. The mean square error of the dynamic measurement was 0.132 mm, as shown in Fig. 9.

The experimental results show that the dynamic 3D measurement error can be greatly reduced by using the error compensation technology proposed in this paper, and the accuracy of the dynamic 3D measurement can be better than 0.15 mm.

5 Conclusion

The basic principle of the dynamic 3D measurement errors is analyzed, and the dynamic 3D measurement error compensation method is proposed. This method combines the advanced iterative algorithm based on least squares and the improved Fourier assisted phase-shifting method to realize the high-precision calculation of random step-size phase-shifting and phase. The experimental results show that the dynamic 3D measurement error can be reduced by more than one order of magnitude by using the error compensation technology proposed in this paper, and the accuracy of the dynamic 3D measurement can be better than 0.15 mm.

[1] MEZA J, CONTRERAS-ORTIZ S H, PEREZ L A R, , et al. Three-dimensional multimodal medical imaging system based on freehand ultrasound and structured light[J]. Optical Engineering, 2021, 60(5): 054106.

[2] HE H H, YUAN J J, HE J Z, , et al. Measurement of 3D shape of cable sealing layer based on structured light binocular vision[J]. Proceedings of SPIE, 2021, 11781: 117811L.

[8] YE W ZH, ZHONG X P, DENG Y L. 3D measurement using a binocular camerasproject system with only one shot[C]. 2019 3rd International Conference on Electronic Infmation Technology Computer Engineering (EITCE), IEEE, 2019.

[11] ZHANG S. High-speed 3D shape measurement with structured light methods: A review[J]. Optics and Lasers in Engineering, 2018, 106: 119-131.

[13] LIU Y J, GAO H, GU Q Y, et al. . A fast 3D shape measurement method f moving object[C]. 2014 IEEE International Conference on Progress in Infmatics Computing, IEEE, 2014.

[14] WEISE T, LEIBE B, VAN GOOL L. Fast 3D scanning with automatic motion compensation[C]. 2007 IEEE Conference on Computer Vision Pattern Recognition, IEEE, 2007.

[18] GREIVENKAMP J E. Generalized data reduction for heterodyne interferometry[J]. Optical Engineering, 1984, 23(4): 234350.

[22] WANG SH SH, LIANG J, LI X, , et al. A calibration method on 3D measurement based on structured-light with single camera[J]. Proceedings of SPIE, 2020, 11434: 114341H.

[23] MARRUGO A, VARGAS R, ZHANG S, , et al. Hybrid calibration method for improving 3D measurement accuracy of structured light systems[J]. Proceedings of SPIE, 2020, 11490: 1149008.

Article Outline

曹智睿. 基于相移条纹投影的动态3D测量误差补偿技术[J]. 中国光学, 2023, 16(1): 184. Zhi-rui CAO. Dynamic 3D measurement error compensation technology based on phase-shifting and fringe projection[J]. Chinese Optics, 2023, 16(1): 184.