Integrated photonic convolution acceleration core for wearable devices

1 Introduction

Wearable devices, characterized by their portability and strong human interaction capabilities, have long represented the future of technology and innovation1. Within the realm of wearable devices, numerous recognition tasks rely on machine vision, such as vehicle detection2, human pose recognition3-6, and facial recognition2, 7-9. These applications primarily rely on the forward propagation of deep learning algorithms to accomplish classification and recognition tasks. However, as the complexity of these applications increases10, the demand for computational power, low power consumption, low heat generation, and high efficiency in wearable devices becomes increasingly challenging to traditional electronic computing because Moore's Law is reaching its limits11. As a result, alternative solutions are imperative.

In recent years, research on optical neural networks (ONNs) has emerged as a potential breakthrough solution to address the bottlenecks of electronic computing12, 13. By mapping the mathematical models of neural networks to analog optical devices, ONNs can achieve computational capabilities superior to electronic computing because optical transmission networks offer the potential for ultra-low power consumption and minimal heat generation14. This makes them well-suited for meeting the energy consumption and heat dissipation requirements of wearable devices. Several ONN architectures have been reported in current researches, including diffractive spatial light networks (DNNs)15-17, wavelength division multiplexing (WDM) based on fiber dispersion18,19, and array modulation using Mach-Zehnder interferometers (MZIs)20-23. While diffractive optical network elements have a large scale of neurons, they are typically bulky and not suitable for integration, and the refresh rate is low. Fiber dispersion-based wavelength division multiplexing schemes also face challenges in the miniaturization of long fiber and precise control of delay dispersion in large-scale networks. Although MZI devices can be implemented for on-chip integration, their relatively large footprint does not provide a significant advantage for large-scale expansion. None of these methods offer substantial advantages in meeting the requirements of future wearable devices. In contrast, the array-based approach using micro-ring resonator (MRR) devices exhibits several advantages that are well-aligned with the breakthrough requirements in wearable device research. MRR arrays are compact and easily integrated, allowing for high-precision and complex calculations through one-to-one assignment during parameter configuration24-26. This makes them suitable for small-size and large-scale applications, meeting the demands of current wearable device research.

In this work, a viable solution has been proposed to address the power consumption and computational speed limitations in wearable devices. The solution is based on an integrated photonic convolution acceleration core (PCAC) with a reconfigurable MRR array that has self-calibration functionality27.

Combined with field programmable gate array (FPGA) control, we utilized this system to conduct parallel convolution for edge detection experiments. Subsequently, we shifted our focus to a typical application in the wearable device domain: first-person perspective gesture recognition tasks. This system enables high-speed computation with 7-bit precision when PCAC chip loading weights, and achieves the same accuracy as traditional electronic computation in blind testing for gesture recognition. It provides an effective approach for wearable devices to achieve complex computational tasks accurately and efficiently while ensuring low power consumption and miniaturization.

2 Results

2.1 The principle

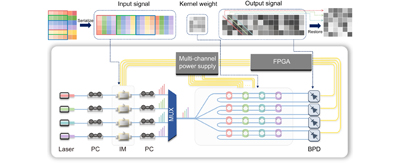

Fig. 1. Schematic of a computing system based on the integrated convolution acceleration core (PCAC) chip.

2.2 The fabrication and characterization of PCAC chip

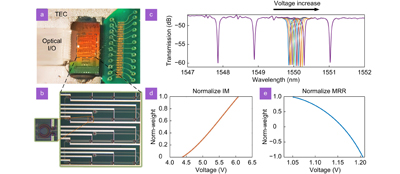

Fig. 2. (a ) Detailed photos of the packaged layout chip show the MRR array in the center, with the photonics chip on the right combined with the leads of the customized printed circuit board (PCB) for computation and control. On the left, there is an optical input/output port using a fiber V-groove, and the entire assembly is mounted on a TEC for heat dissipation. (b ) The micrograph of the MRR array and detailed photo of a single MRR. (c ) The transmission spectra of the MRR array. Different voltages (800–1800 mV, 100 mV/step) are applied to the third MRR. Similar results can be obtained when the voltage is applied to other MRRs. (d ) The transmission rate of a single IM on the chip under voltage tuning. These curves represent the normalized W-V mapping. (e ) The transmission rate of a single MRR on the chip under voltage tuning. These curves represent the normalized W-V mapping.

Moving to the microscopic level,

Based on this method, we have established a look-up table for the weight-voltage mapping of the modulator and MRR array. For modulator calibration, the laser operating wavelength is chosen away from the MRR resonance peak for one path of the MRR array. The reference voltage of the MRR array is fixed, and the voltage applied to the modulator is incrementally adjusted in a step of 0.1 V. The optical power of the pass-through end (THRU) is detected using a balanced photodetector (BPD), allowing the construction of a P-V curve that represents the relationship between power and modulator voltage. After differential and normalization operations, a weight-voltage (W-V) curve is established that describes the relationship between input data weights and modulator voltage.

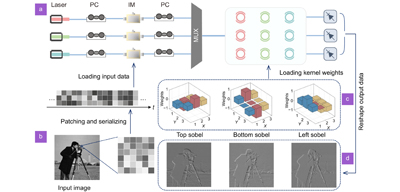

2.3 Operation for convolution and edge detection

In order to verify the convolutional computing capability of the PCAC chip within our system, we conducted a series of experiments using the widely recognized "cameraman" image as a standard test case.

Fig. 3. (a ) Experimental setup of the PCAC chip for performing convolutional operations. (b ) Original image used for demonstrating the convolution effect. (c ) Convolution kernels used: Bottom sobel, Top sobel, Left sobel. (d ) Corresponding convolution image results.

2.4 Application of first-person depth-based gesture recognition using PCAC chip

In this part, we further explore its performance in practical applications for devices. First-person perspective gesture recognition is one of the most widespread applications for wearable devices, such as virtual reality (VR) and augmented reality (AR) glasses, Remote Healthcare Monitoring devices29. Taking this into account, we have developed a digital gesture recognition application that incorporates depth information, specifically designed for wearable devices. This application is capable of recognizing hand gestures representing digits from 0 to 9. We utilized the EgoGesture dataset30, released by the Institute of Automation, Chinese Academy of Sciences in 2017. Each gesture was represented by 1500 training images and 300 testing images, resulting in a total of 18000 images as our dataset. We trained the artificial intelligence model on a computer.

Fig. 4. (a ) Schematic diagram of the convolutional neural network (CNN) architecture suitable for first-person digit gesture recognition with depth information. (b ) Probability of recognition for the 10 gestures after performing the convolutional layer computation using the PCAC chip as a replacement for the computer.

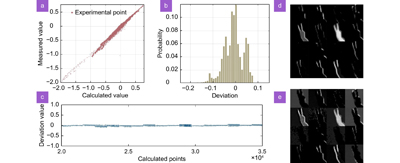

To further investigate the performance of the PCAC chip in computational tasks, we conducted a more detailed analysis of the experimental results.

Fig. 5. (a ) Scatter plot comparing measured results with calculated results for Gesture 2. (b ) Probability distribution of the error offset in the experimental results, resembling a Gaussian curve. (c ) Offset of each point during the computation process. (d ) Results of the first layer convolution computation obtained through electronic computation. (e ) Results of the first layer convolution computation obtained through optical-electronic computation using the PCAC chip.

3 Discussion

3.1 Energy efficiency estimation

Benefiting from the compact size of MRR resonators, the PCAC chip achieves high integration density within a footprint of just 0.2 mm2. In the meantime, it enables basic multiplication and addition operations with same recognition results as electronic computation. For a 4×4 scale PCAC chip with four parallel channels, the footprint increases to approximately 5 square millimeters, allowing for parallel convolution operations and efficient processing of more complex computational recognition tasks. However, despite these advantages, the PCAC chip design still has limitations and potential areas for improvement.

Firstly, the eternal pursuit of photonic computation lies in processing data with high speed and low power consumption. In our concept verification setup, the power consumption is primarily attributed to the laser, silicon photonic chip, modulator, TEC, and digital backend. Based on the components utilized in our measurement setup, the estimated power consumption in the computation system is approximately 7.716 W, resulting in a total power consumption of around 40.973 W. Consequently, 80% of the power is attributed to these benchtop instruments.

Table 1. Estimated power consumption of the proof-of-concept system.

|

Using phase-change materials as thermal shifters can further optimize the energy efficiency of the system. With the development of tunable optical frequency combs31-33, replacing lasers with microcombs as light sources can significantly reduce power consumption. This will unlock the full potential of the optoelectronic computing system, offering higher scalability, higher integration, and lower power consumption. It is important to note that with the development of hybrid integration and monolithic integration techniques, advancements in light sources, silicon photonic circuits, and related electronic components (including modulators, drivers, trans-impedance amplifiers (TIA), digital-to-analog converters (DAC), and analog-to-digital converters (ADC)) can be integrated onto the same motherboard or even onto a single chip. This integration trend has the potential to significantly reduce power consumption. Therefore, the power and integration performance demonstrated in this work have the potential for further enhancement, although there is still a long road ahead.

3.2 Throughput estimation

Furthermore, as a key metric for evaluating computational hardware performance, throughput is defined as the number of operations per second (OPS) performed by a processor in high-performance computing (HPC) domain. The throughput of photonic computing hardware can be calculated using

where T represents the throughput in OPS (operations per second) excluding the time spent on off-chip signal loading during photonic computation, m is the number of layers implemented by the photonic computing hardware, N2 is the size of the on-chip weight library, and r is the detection rate of the photodetector (PD). Since the PCAC chip can naturally perform multiplication and addition (MAC) operations, and each MAC operation consists of one multiplication and one addition operation, one MAC operation corresponds to two operations. With a typical photodetection rate of 100 GHz, our PCAC concept validation chip (N2=4×4) can achieve 3.2 TOPS, which still lags behind leading electronic processors such as Google's tensor processing unit (TPU)34 and other chips. However, due to the chip's strong scalability, in future large-scale chips of 16×16 dimensions with auxiliary optical frequency combs as multiple light sources is possible to reach the theoretical computational power of 51.2 TOPS. This will enable outstanding performance in complex computational tasks with ultra-high integration and ultra-low power consumption, helping to alleviate the high cost of electronic computing while ensuring high computational power. It serves as an effective solution for breakthroughs in the field of wearable devices. Although, there are various challenges in photonic computing that include limitations posed by components such as ADCs, DACs, modulators, PDs, in terms of their speed and bandwidth. While these challenges are not the primary focus of our current work, they are certainly within the broader scope of the field. We believe that with concerted efforts from the entire photonic computing community, these challenges can be addressed and overcome. As the field progresses, it is reasonable to expect advancements that will lead to breakthroughs in addressing the speed and bandwidth limitations of photonic components.

3.3 Scalability

To further improve the computational performance of PCAC chips, ensuring scalability is an extremely important requirement. The main source of losses in PCAC chip arises from the coupling gratings. Therefore, the scalability of PCAC chip is not primarily limited by its loss performance. Instead, it is predominantly determined by the free spectral range (FSR) of each MRR. Since each MRR requires individual tuning, and precision is essential to avoid resonance overlap during thermal tuning for high-precision computations, the scalability within a given operational wavelength range is somewhat constrained. This constraint emerges as we conduct experiments within a specific wavelength range. Our future endeavors are aimed at addressing this limitation by designing MRR with larger FSR. This design approach will enable the development of larger-scale PCAC chips operating with a greater range of wavelengths, thereby delivering enhanced computational performance. Ultimately, this advancement will expand the horizons for exploring more complex applications in the field, offering a broader spectrum of possibilities.

3.4 Wearable application potential

Finally, it is important to note that in this work, we have only showcased one application scenario for wearable devices. In this work, we have successfully demonstrated the capabilities of optical-electrical computation in a practical context by implementing first-person perspective gesture recognition tasks using the PCAC chip and accompanying algorithms (We have provided a demo video in the attachment that showcases the real-time interaction of this application). Unlike previous tests limited to MNIST handwritten digit recognition (with small input images and a few convolutional kernels), our application involves larger input images (32×32 pixels) and a more intricate network structure (with a first-layer convolution containing 16 kernels). These factors pose a greater challenge to the sustained high-precision computational capability of the photonic chip. The successful completion of the recognition task demonstrates the photonics hardware's capacity to handle such complex tasks. Compared to the previous tasks involving simple MNIST digits or edge detection, this work holds higher practical value due to its ability to address more intricate recognition tasks. However, the photonic convolution acceleration core computational system presented here can be applied to various scenarios involving convolution operations. Especially when considering the inherent advantages of photonics such as low power consumption and minimal heat generation, which align perfectly with the requirements of wearable devices. Building upon the previously mentioned approaches, further optimizations can be pursued to enhance integration, energy efficiency, and scalability. These improvements aim to achieve higher computational power while maintaining efficiency and compactness. We believe that this computational system has the potential to play a significant role in a broader range of wearable device applications.

4 Conclusions

In this work, we propose a convolutional acceleration processor based on an MRR array and have successfully fabricated a prototype PCAC chip. When combined with the computational control module programmed on an FPGA, the PCAC chip is capable of performing convolution operations with a maximum precision of 7 bits. We demonstrate the application of the PCAC chip in complex gesture recognition tasks, specifically in first-person depth information gesture recognition. With parallel and precise convolution operations, we obtain the same recognition results as traditional electronic computation in all blind tests, achieving a high level of recognition accuracy. The outstanding performance in accomplishing complex recognition tasks and high-precision forward propagation tasks opens up new possibilities for intuitive human-machine interaction. Furthermore, the advantages of optical computation, including reduced power consumption and faster data processing, make this application particularly important in the development of wearable devices. Accurate and efficient gesture recognition enables seamless control and interaction with the device, enhancing user experience and convenience. Additionally, the compact and easily integrable nature of the device provides opportunities for higher computational power and lower power consumption in future large-scale expansions. These advantages offer an effective solution to address the challenges of heat dissipation and integration in wearable devices when dealing with complex, high-precision, multi-scenario computational recognition tasks. It paves the way for efficient computation by effectively surpassing the limitations of electronic processors.

5 Acknowledgements

This work was partially supported by the National Natural Science Foundation of China (U21A20511), the Innovation Project of Optics Valley Laboratory (OVL2021BG001).

BH Zhao and JW Cheng proposed the original idea, JJ Dong supervised the project, BH Zhao fabricated the chip and performed the measurements, all authors contributed to the writing of the article.

The authors declare no competing financial interests.

[33] KippenbergTJ, GaetaAL, LipsonM, GorodetskyML. Dissipative kerr solitons in optical microresonatorsScience2018361eaan8083

Article Outline

Baiheng Zhao, Junwei Cheng, Bo Wu, Dingshan Gao, Hailong Zhou, Jianji Dong. Integrated photonic convolution acceleration core for wearable devices[J]. Opto-Electronic Science, 2023, 2(12): 230017.