Programming nonlinear propagation for efficient optical learning machines  Download: 632次

Download: 632次

1 Introduction

Machine-learning architectures have come to be dominated by artificial neural networks (ANNs). There are several reasons why this architecture is used so broadly. Initially, their similarity with biological neural networks1 provided strong motivation to explore ANNs. At the same time, the fact that ANNs are universal machines2 that are able to approximate any function breeds confidence that ANNs can carry out useful and difficult tasks. Perhaps most significantly, the fact that error backpropagation3 has proven very effective in training such networks catapulted their application to a wide variety of problems. Ever-larger networks4 have been adopted for tackling challenging tasks.5 Empirically, it has been found that larger networks tend to perform better given a sufficiently large database of training examples. This has led to a “bigger is better” mentality.6 However, the disadvantage of this mentality is the energy required to train and use very large networks. For instance, only training the language model GPT-3, which has 175 billion parameters, consumed 1.3 GWh of electricity, which is the energy required to fully charge 13,000 Tesla Model S cars.7 Optics can help overcome this downside, since light propagation through a nonabsorbing, nonscattering medium is a lossless linear operation.

Several approaches have been reported for the optical realization of ANNs. Wavefront shaping by diffractive surfaces or modulators, followed by propagation can implement ANNs and perform different tasks, such as classification and imaging.8

Moreover, as optical ANNs are analog systems, their training is cumbersome. The gradient descent optimization algorithms can be applied ex situ8 or in situ;17 however, the system should be completely calibrated, or perfectly modeled. As an alternative, stochastic or deterministic gradient-free optimization algorithms could be utilized for the training. Stochastic, evolutionary algorithms, such as genetic algorithms, found themselves implementations18,19, as they do not require any model of the systems and through efficient trial and error mechanisms, they can directly optimize the NNs with experiments, however, they require a very high number of trials.20,21 On the other hand, deterministic, surrogate-based optimization algorithms are guided by a simple metamodel between input parameters and loss function directly and are proven to be able to provide excellent results in digital NNs with a smaller number of iterations.22

In contrast to determination and rigorous mapping of individual weights of ANN models to photonics devices, ANNs based on high-dimensional, fixed, and nonlinear connections, such as reservoir computers25 or extreme learning machines26 can directly be implemented with diverse optical dynamics, such as multimodal speckle formation,27,28 random scattering,29 and transient responses.30 The necessary nonlinearity between neurons could be introduced by electronic feedback,31 optoelectronic conversion,29 saturable absorption,32 and second-harmonic generation.33 Moreover, the Kerr effect inside both single-mode34 and multimode35 fibers (MMFs) was shown recently to be an effective dynamic for realizing computing systems. Another set of studies showed also that the nonlinear interactions inside MMFs are tunable by wavefront shaping.36

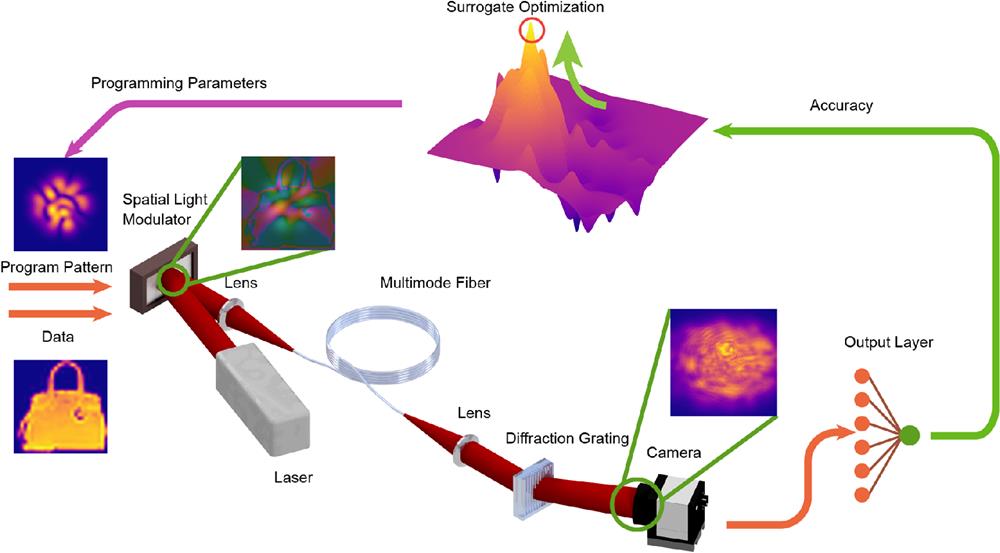

In this paper, we present an optical ANN architecture that combines a relatively small number of digitally implemented parameters to control the very complex spatiotemporal transformation realized by optical wave propagation. Our experimental studies show that with a spatial light modulator (SLM), by simultaneously shaping light with data and a fixed programming pattern, we can induce the nonlinear transformation inside the MMF to perform desired computations. We find that the optimization of a small number of programming parameters (PPs), around 50 in our experiments, results in a remarkable performance of the optical computer. For instance, we will show that the system with total parameters (TPs), PPs, and RWs combined, performs as well as a digital ANN with over 400,000 parameters for the face classification task on the CelebA40 dataset (Table 2). Moreover, we demonstrate that the same method can be used to program the propagation inside MMFs to perform all-optical classification without the digital readout stage. In this case, the classification can be directly read out with a simple beam location sensor, further decreasing the number of TPs.

2 Methods and Results

2.1 Nonlinear Fiber Propagation and Its Programming for Higher Classification Performance

Our method consists of a nonlinear optical transformation and a programming algorithm. In this study, the optical experiment is selected to be the propagation of spatially modulated laser pulses through an MMF. The nonlinear propagation of an ultrashort pulse inside an MMF is a highly complex process that entails spatial and temporal interactions of electromagnetic waves coupled with hundreds of different propagation modes. In addition to light transfer between different channels due to linear mechanisms, such as bending and defects in the fiber, at high intensity levels light–matter interactions start to occur, and this results in energy exchange between different modes depending on products of these modes. These interactions can be concisely described with the multimode generalized nonlinear Schrödinger equation,41

Here is the complex coefficient of the ’th normalized mode, where is the k’th order propagation constant for this mode. is the linear coupling coefficient between modes and , which becomes nonzero when there are nonidealities in the waveguiding structures, such as ellipticity, bending, or impurities. is the nonlinear refractive index of the material, is the center angular frequency, and is the effective mode area of mode in the fiber. is the nonlinear coupling coefficient between modes; it mainly depends on the similarity between spatial shapes of modes () and can be calculated as . Due to the short propagation length in the study, the higher-order dispersion effects are not shown. Similarly, Raman scattering is not observed dominantly in the experiments (see Fig. 6); hence in the nonlinear mode coupling part, only the contribution of the Kerr effect is shown.

The triple multiplications with varying strengths of interaction () between different sets of modes demonstrate the nonlinear and immensely multimodal aspect of the interactions such that modeling the transform of a single pulse on the setup provided in Fig. 1 would take 50 min with a graphics processing unit (GPU).42 The physical optical system carries out this complex spatiotemporal transformation “effortlessly.” The transformation is programmed with a relatively small number of PPs. PPs are selected to customize the MMF processor for the specific task by wavefront shaping, controlling the optical power, and the placement of the data and diffraction angle on the SLM. This way the PPs can modify implicitly the distribution of , which determines the nonlinear computation the MMF performs on the data. For instance, changing the diffraction angle on the SLM away from the optical axis moves the beam’s focus from the center of the MMF, which is placed in the focal plane of the coupling lens. This primarily excites higher-order modes and effectively changes the part of tensor that acts on the data. The combination of wavefront shaping and data encoding as shown in Fig. 1 can be formalized as follows, where the complex encoded value on the SLM is given by

Fig. 1. Experiment flow for programming optical propagation for a computational task. The SLM modulates the laser pulses with the data sample overlaid with a fixed programming pattern calculated by the programming patterns. The beam is coupled to an MMF; the pattern after propagation is recorded with a camera. A trainable output classification layer calculates the task accuracy, which is fed back to the surrogate optimization algorithm. The algorithm improves the task performance by exploring different PPs and refining potential solutions.

The amplitude modulation with the phase-only SLM is realized by modifying the strength of a blazed grating (see Method 3 in the Supplementary Material), whereas the data [] are encoded as a phase pattern. The wavefront controlling shape [] is a complex combination of different linearly polarized fiber modes , and the coefficient of each mode is controlled by two parameters, for real and imaginary parts. Even though other bases for controlling the wavefront can also be effective, we used the propagation modes of the optical fiber, , since they are interpretable, orthonormal, and guaranteed to be within the fiber’s numerical aperture. The portion of the wavefront shape that contains the PPs () is

During each step of the programming procedure, the optical system processes the dataset for a set of values for PPs, and the task performance is measured. Depending on the task, the performance metric could be the training accuracy of the final RWs (Fig. 2), or the ratio of correctly placed outputs for all-optical tasks (Fig. 3). Throughout the process of programming optical system, a surrogate optimization algorithm22 selects values of PPs to explore the dependency between them and the loss function, which is the negative of the accuracy in classification tasks, and finally finds the globally optimal set of PP values for the best computational performance. The same procedure is also widely used in the optimization of NN architectures;43 its details are provided in the Appendix.

![Programming the MMF propagation for higher classification performance on Fashion-MNIST dataset. (a) Training accuracy during the progress of the programming procedure. The horizontal line labeled “without programming” shows the accuracy level when PPs are set to zero and “with programming” indicates the level when the PPs found by the programming algorithm are used. The colors of circles indicate their sequence in the training. (b) Relation between wavefront shaping parameters and training accuracy. Forty-six different wavefront shaping parameters are shown in two dimensions by means of random projection into two dimensions for visibility. (c) Peak power of pulses during the programming procedure. (d) Change of the diffraction angle on the SLM in horizontal and vertical directions (Δϕ and Δθ). (e) Shift of image on the SLM in horizontal and vertical directions (Δx and Δy). (f) Confusion matrix and average accuracy on the test set, without and with the programming of the transform (Video 1, MP4, 2.31 MB [URL: https://doi.org/10.1117/1.AP.6.1.016002.s1]).](/richHtml/ap/2024/6/1/016002/img_002.png)

Fig. 2. Programming the MMF propagation for higher classification performance on Fashion-MNIST dataset. (a) Training accuracy during the progress of the programming procedure. The horizontal line labeled “without programming” shows the accuracy level when PPs are set to zero and “with programming” indicates the level when the PPs found by the programming algorithm are used. The colors of circles indicate their sequence in the training. (b) Relation between wavefront shaping parameters and training accuracy. Forty-six different wavefront shaping parameters are shown in two dimensions by means of random projection into two dimensions for visibility. (c) Peak power of pulses during the programming procedure. (d) Change of the diffraction angle on the SLM in horizontal and vertical directions (Video 1 , MP4, 2.31 MB [URL: https://doi.org/10.1117/1.AP.6.1.016002.s1 ]).

![Programming procedure for all-optical classification of chest radiographs. (a) The schematic of the experiment, the data, and the control pattern are sent together to the SLM, and the fiber output pattern is imaged onto a camera. (b), (c) Distribution of the beam center locations and corresponding confusion matrices for the test set, without and with the programming of the transform. (d) Distribution of training accuracies with respect to the selection of wavefront shaping parameters. (e) Selected power levels for each iteration of the programming procedure. (f) Progression of training accuracy during training. The color map relates the color of circles to their sequence in the training, and it applies to (d)–(f) (Video 2, MP4, 4.17 MB [URL: https://doi.org/10.1117/1.AP.6.1.016002.s2]).](/richHtml/ap/2024/6/1/016002/img_003.png)

Fig. 3. Programming procedure for all-optical classification of chest radiographs. (a) The schematic of the experiment, the data, and the control pattern are sent together to the SLM, and the fiber output pattern is imaged onto a camera. (b), (c) Distribution of the beam center locations and corresponding confusion matrices for the test set, without and with the programming of the transform. (d) Distribution of training accuracies with respect to the selection of wavefront shaping parameters. (e) Selected power levels for each iteration of the programming procedure. (f) Progression of training accuracy during training. The color map relates the color of circles to their sequence in the training, and it applies to (d)–(f) (Video 2 , MP4, 4.17 MB [URL: https://doi.org/10.1117/1.AP.6.1.016002.s2 ]).

In the experimental realization of the method, a commercially available mode-locked laser at 1030 nm (Amplitude Laser, Satsuma) with 125 kHz repetition rate is used, and the pulse length is set to be the longest possible, 10 ps, with an internal dispersive grating stretcher for obtaining a longer dispersion length. This maximizes the length in which nonlinear interactions effectively occur in the 5 m-long graded-index multimode fiber (OFS, bend-insensitive OM2, core diameter, 0.20 NA, 240 modes at 1030 nm), without being hindered by dispersion-induced pulse broadening. Before coupling to the MMF, the laser beam is sent onto a reflective phase-only SLM (Meadowlark HSP1920), which encodes the data to be transformed by the optical system to 0 to phase retardation at each pixel location and combines them with a complex pattern containing the PPs, as described in Eq. (1). The intensity of the coupled pulsed laser beam is also treated as one of the PPs; it is controlled via a half-wave plate mounted on a motorized rotation stage followed by a polarizing beam splitter and is optimized through the surrogate model. Once the PPs are determined, the data portion of the modulation changes with every sample, while the programming part stays the same. Upon exiting the fiber, the beam is collimated with a lens and sent to a blazed grating. The dispersion due to the grating leads to a camera recording, in which both spatial and temporal characteristics of the output beam are present.

The classification accuracies are calculated by training a simple regularized linear regression algorithm with L2 regularization on the pixel intensity values of the recorded images on the camera in Fig. 1. The linear regression maps the recorded image to classification results by pointwise multiplication with RWs and summation. Finally, the pairs of PPs and corresponding task performance of the system are supplied to the surrogate algorithm that optimizes the optical transform. After acquiring the performance metric for different sets of PPs, the surrogate optimization algorithm creates mappings between the performance of the system and any given set of PPs. The surrogate algorithm continuously refines the model and increases the performance on the task at the same time. Video 1 and Fig. 2 illustrate such an experiment, where the data transform with the MMF was programmed for a higher classification accuracy on a small subset (2%) of the Fashion-MNIST dataset, which consists of 1200 training images and 300 test images of 10 different classes of fashion items.44

As shown in Fig. 2, for the first 105 iterations, the surrogate optimization algorithm broadly samples the parameter space to create the initial mapping between PPs and the performance metric. After this phase, an area with the potential of yielding the best result is selected and sampled in finer steps. Gradually, the changes become smaller, and the algorithm converges to a solution. Figure 2(b) provides a closer look at the progression of the process, where each data point represents an iteration, the two-dimensional random projection of 46 wavefront shaping parameters versus the training accuracy. The initial homogeneous sampling of the parameter space and final fine-tuning can be observed. Similarly, Fig. 2(c) shows that after exploring various levels of optical intensity, hence nonlinearity, the convergence led to a higher light intensity for obtaining a more efficient nonlinear optical transform. Even after converging, the search algorithm probed different intensity levels to ensure not being stuck in a local minimum but came back to the same level confirming it is the optimum. This is also made possible by converging to a preferred oblique excitation of the fiber, as shown in Fig. 2(d), allowing for stronger coupling to higher-order fiber modes as well, hence benefiting from the multimodality of the fiber. Overall, programming the optical propagation by optimized PPs improved the classification accuracy both on the training and the test sets by about 5% compared to using a not programmed propagation35 and reached 77% accuracy on the test set. In comparison, seven-layer digital convolutional neural network (CNN) LeNet-545 yields 77.9% accuracy when trained with the same dataset on a GPU (for details see Method 1 in the Supplementary Material). Later, we present another approach for programming the propagation, in which PPs are combined with data through convolution, and 79.0% test accuracy is reached on the same task.

2.2 All-Optical Computing with Propagation in Optical Fiber

In Fig. 2, the PPs were optimized to modify the optical transform inside the MMF to improve the performance of the combination of the optical system and the digital readout layer. To further demonstrate the programming capacity of our approach, the inference on input samples was done all-optically without any RWs by only using the center location of the output speckles, as shown in Fig. 3. For a binary classification problem, the input is classified as either “0” or “1,” depending on which side of the classification line the center of the output beam resides. Hence, only three parameters are required at the output for defining any line in two dimensions. Figure 3 illustrates the programming procedure for all-optical classification of the dataset, consisting of 1200 training and 300 test chest radiography images, equally sampled from patients with and without COVID-19 diagnosis.46

Before programming the system, a linear classifier received the distribution of center locations without any control patterns and drew a classification boundary between positive and negative samples. As the transform is random, the classifier could only produce training and test accuracies around 50%. Then the decision boundary is kept the same, and the training accuracy is improved by optimizing the PPs on the SLM with the surrogate model to separate the center location distributions for samples with positive and negative labels, as shown in Video 2. This procedure improved the accuracy on the test set from 46% to 77%, as shown in Table 1. This performance, realized all-optically with only 55 TPs, compares favorably to LeNet-5, which uses about 61,000 parameters. A state-of-the-art, pretrained ANN (EfficientNetB647) with parameters achieves an 88.3% test accuracy when fine-tuned for the same task. Similarly, on the task of classifying skin lesions of equally sampled benign (nevus) and malignant (melanoma) case images,48,49 1200 samples being training and 300 test, all-optical system yields a 61.3% test accuracy.

Table 1. Comparison between neural networks and all-optical classification system.

|

2.3 Different Wavefront Shaping Approaches for Programming the Optical Transform

For the two experiments shown previously, the optical transform was programmed through the multiplication of fields by encoding data and PPs [i.e., and terms in Eq. (2), respectively] as shown in Fig. 2 and detailed in Fig. 4(a). In this section, we demonstrate that the transform can be achieved with two additional ways of wavefront shaping, as depicted in Fig. 4.

Fig. 4. Programming the optical transform using (a) phase addition and amplitude modulation, (e) multiplication with phase, and (i), (m) convolution. (b), (f), (j), (n) Example of programmed patterns on the SLM and recorded intensity patterns after the propagation inside the optical fiber for the given input pattern; for (f), (j), and (n), the intensity is not modulated. (c), (g), (k), (o) depict the progression of training accuracies during programming iterations. The confusion matrices on (d), (h), (l), (p) illustrate the classification performance of the programmed optical transform with different methods.

For the modification of the phase, the control pattern was again formed, as described in Eq. (2). Thus this function is elementwise digitally multiplied with the input image from the dataset and placed on the SLM as visualized in Fig. 4(f). For the ’th sample of the dataset, the field diffracted by the SLM becomes . After optimization of the PPs, the test accuracy on the subset of Fashion-MNIST reached 78%.

Alternatively, convolutional filters can be used to amplify or attenuate different parts of the angular spectrum of the field—hence its mode decomposition inside the MMF. Importantly, convolutional filters can be applied fully optically by filtering in the Fourier plane.50Figure 4(k) depicts that convolution can also program the nonlinear propagation and could reach 79% test accuracy on the same dataset when each element of the convolution kernel is set as PPs. Hence, the convolution kernel could be written as in terms of programming PPs. Then the field modulated by the convolution filtered N’th sample of the dataset is . Similarly, on the 1200 training and 300 test samples from the MNIST-digits dataset 94.3% accuracy, which is comparable to the 94.9% accuracy of a nine-layer digital ANN with parameters, could be reached with the same approach, demonstrating that different wavefront shaping strategies could realize the enhanced interactions within the optical fiber.

2.4 Transferring Programming Parameters across Different Tasks and Datasets

The optimization of PPs from scratch requires processing the selected dataset on the experimental system more than 100 times while modifying the PPs. With frames per second rate, this process takes a few hours for a dataset of 1500 images. In addition to switching to faster optoelectrical devices, the ability to transfer previously optimized PPs to new tasks or datasets would boost the practical utility of this approach as a general-purpose component that can be quickly deployed on different problems. This ability is first demonstrated with the reusability of PPs on different tasks for the same dataset. After finding the set of optimal PPs for the task of classifying the gender of the person in an image from Celebrity Face Attributes dataset (CelebA),40 the same set of PPs is used for determining the age of the person. The only training required for the transfer between tasks is the determination of RWs without any new surrogate optimizations involving the optical system. Table 2 compares the performance of the PP transfer between age and gender tasks by fully programming the system for each task separately, showing that the test accuracy with the parameter transfer follows the accuracy of programming from scratch. Without programming the fiber, the test accuracy on the age classification task is 59.0% and 2026 RWs are used. After optimizing additional 52 PPs (wavefront shaping and experimental parameters, reaching 2078 TPs), the test accuracy reaches 67.0%, performing better than a digital nine-layer CNN with about 412,000 parameters. When these optimized 52 PPs are used on the gender classification task on the same dataset only with retraining of the RWs, the accuracy on the new task is 76.0%, which is similar to the 76.3% achieved by programming the system from scratch with the gender database. The same findings hold true when the initial programming is done on the gender task and parameters are transferred to the age task.

Table 2. Performance of different CNNs and optical computing methods on the CelebA dataset.

|

Furthermore, we find that with transfer learning,51 the optimized PPs can also be utilized with a different dataset after only a short corrective programming. The PPs optimized for the COVID-19 classification dataset with the all-optical approach are transferred to the task of classifying skin lesions between benign (nevus) and malignant (melanoma) case images48 (Fig. 5). However, directly transferring the PPs from former to latter resulted in a test accuracy of 47.67%, which is similar to a random prediction. In corrective programming, a smaller set of parameters (11 in total) is designated for optimizing the previously acquired set of PPs. These 11 parameters are combined with 52 PPs by repetition of each element multiple times and element-wise addition. Thus an optimal set of PPs is found in the proximity of the initial expectation by optimizing in a lower dimensional search space. Decreasing the dimensionality enables a convergence in fewer iterations. Compared to the complete programming of the system in 300 iterations, corrective programming starts from a similar initial accuracy, and after 80 iterations instead of 300, reaches the same final test accuracy.

Fig. 5. Using previously dedicated parameters on a new dataset with corrective programming. (a) Procedure for transferring the PPs. (b)–(d), (h), (i) The experiment when the PPs are fully programmed without any prior knowledge. (e)–(g), (j), (k) Corrective programming of parameters. (b), (e) Relation between wavefront shaping parameters projected to two dimensions and the training accuracy. (c), (f) Peak power of pulses at the fiber entrance. (d), (g) Color bar for coding the iteration number related to each data point on (b), (c), (h) and (e), (f), (j). (h), (j) Training accuracy during the progress of the programming procedure. (i), (k) Confusion matrix and average accuracy on the test set.

Fig. 6. Dependency of the training accuracy on the CelebA gender classification task, diffracted beam shape, and spectrum on the optical intensity level, with all other PPs set to zero. (a) Camera images for the same input image and the task accuracy for different pulse peak powers. (b) Optical spectrum after propagating in the fiber at different power levels for the same sample from the dataset.

2.5 Role of Nonlinearity in the Optical Transform

Nonlinear activation functions between linear mappings in NNs allow them to approximate complex, nonlinear functions. Without nonlinearities, an NN would be limited to representing only linear transformations of the input data, which would severely limit its ability to model real-world data and solve complex problems. Similarly, in the proposed method, nonlinearities play a crucial role for the generalization performance. In addition to fixed nonlinearities acting on the input data, such as phase modulation and intensity detection, optical nonlinear effects provide controllable means to introduce nonlinearity to the information transform. One of the main factors affecting the extent of optical nonlinearity is the intensity level of the beam. In Fig. 6, the effect of the peak power of laser pulses on the optical and data processing characteristics of the experiment is analyzed. Since the pulse length and repetition rate of the laser are measured beforehand, the peak power level is calculated from the average laser power by dividing it by the pulse length and repetition rate. In accordance with Kerr nonlinear effects in graded-index MMFs, the spectra become broader with higher peak powers, and the intensity of the beam focalizes to the center due to Kerr beam self-cleaning. This also affects the performance of the computational task, and, as Fig. 6(a) depicts, up to a peak power level of 7 kW, increased nonlinearity improves accuracy. Above this value, performance monotonously decreases, possibly due to the deleterious nature of beam self-cleaning on the modal distribution. This process couples the energy from higher-order modes to the fundamental mode.52 Depending on the original distribution of energy between propagation modes, the optimal power for the task can change. For instance, in contrast to the one in Fig. 6, the experiment in Fig. 2 utilized other PPs than the intensity level, and especially with oblique coupling, higher-order modes are excited more; hence, the optimal peak power level is found to be much higher, around 13 kW.

3 Discussion

3.1 Computation Speed and Energy

The speed of inferences is limited by the refresh rate of the liquid crystal SLM. This limitation can be overcome by switching to a faster wavefront shaping method, for instance, by utilizing commercial digital micromirror devices, which can reach 30,000 frames per second.53 Since the number of modes in the MMF is much smaller than the number of pixels on commercial SLMs, different lines of the SLM could be scanned with the beam by a resonant mirror, allowing up to 25 million samples per second data input rate. Moreover, the fixed complex modulation or convolution operations can be implemented with optical phase masks, bringing the digital operation count further down. Similarly, instead of the digital readout layer, a broadband diffractive element can realize the linear projection step. As it is analyzed in Note 2 in the Supplementary Material and visualized in Fig. 7, implementing the same optical computer with a selection of commercially available, high-speed equipment such as digital micromirror devices and quadrant photodiodes, 25 TFLOP/s performance could be reached with a total power consumption of 12.6 W, which is significantly lower than 300 W consumption of a GPU with a comparable performance.54

Fig. 7. Power efficiency and speed comparison between different computational approaches. The possible optimization refers to incorporating a digital micromirror device, a resonant mirror, and an optical phase mask in the optical computer.

3.2 Stability and Reproducibility

The reproducibility of experiments is crucial for consistent comparison between different sets of PPs during programming and long-term usability of determined PPs. To investigate reproducibility, the inference experiment is repeated every 5 min for the same PPs and RWs on the same task over 15 h. As shown in Fig. S1 of the Supplementary Material, the first and final test accuracies are the same, and the standard deviation of the test accuracy over time is 0.3%, indicating a very stable experimental inference.

In conclusion, programming nonlinear propagation inside MMFs with wavefront shaping techniques can exploit complex optical interactions for computation purposes and achieve results on par with multilayer neural networks while decreasing the number of parameters by more than 97% and potentially consuming orders of magnitude less energy for performing the equivalent number of computations. This shows the capacity of nonlinear optics for providing a solution for the exponentially increasing energy cost of the machine-learning algorithms. Not being limited to nonlinear optics, the presented framework could be used for efficiently programming different high-dimensional, nonlinear phenomena for performing machine-learning tasks.

4 Appendix: Programming Procedure

The optical experiment is considered as a whole with the final classifier, called the optical classifier in Fig. 8. For each set of PPs given by the sampling strategy, the optical classifier returns a training score to the surrogate model. First, the data are transformed by the optical system as detailed in Method 2 in the Supplementary Material. For the experiments that perform classification with grating-dispersed fiber output images, initially those images are downsampled from 180 × 180 to 45 × 45 by average pooling. Then these downsampled images are flattened to 2025 features, and the ridge classification algorithm from Python’s scikit-learn library is used for the determination of RWs with an L2 regularization strength, alpha, set to 3000. For all-optical classification experiments, the output beam shape is imaged onto the camera without grating dispersion, and the center of mass, , of the beam shape, , is calculated by , . This is the same information as the one provided by simple beam location sensors. In the first iteration, the classification line in 2D is drawn similarly with the ridge classification algorithm, but by only using two features and only training for the first iteration, keeping the classification line fixed for the rest of the iterations. For each iteration, the accuracy in the training set becomes another sampling point for the surrogate model. This model is a cubic radial basis function with a linear tail, implemented with Python Surrogate Optimization Toolbox (pySOT) and initiated by sampling Latin hypercube points, M being the number of parameters to be optimized. After the initial fixed sampling, DYCORS55 sampling strategy explores the parameter space for the optimal set of parameters.

Ilker Oguz is a doctoral student at the Doctoral Program in Photonics, École Polytechnique Fédérale de Lausanne (EPFL), Switzerland. Currently, he works on efficient physical computing architectures and training algorithms. Previously, he finished his bachelor's degree in electrical engineering at Middle East Technical University (METU), Turkey, in 2018, and his master's degree in bioimaging in the Department of Information Technology and Electrical Engineering, ETH Zürich, Switzerland, in 2020, as an awardee of Excellence Scholarship and Opportunity Program.

Jih-Liang Hsieh is a PhD student at Swiss Federal Institute of Technology Lausanne (EPFL), Switzerland. He specializes in developing photonics neural networks and optical computing systems. He employs both nonlinear and linear optical manipulation techniques to harness the power of light in either optical fibers or free space. His work aims to revolutionize computing paradigms and drive innovation toward a more sustainable and energy-efficient future for computational technologies.

Niyazi Ulas Dinc is a postdoctoral researcher at École Polytechnique Fédérale de Lausanne, Switzerland. He obtained his BSc degrees in electrical engineering and physics from METU, Turkey, his MSc and PhD degrees in microengineering from EPFL, Switzerland. He is currently working on computer-generated optical volume elements to achieve the desired mapping of arbitrary input–output fields in the spatiotemporal domain for computing and beam shaping purposes.

Uğur Teğin is an assistant professor in electrical and electronics engineering at Koç University. He received his BSc and MSc degrees from Bilkent University, Ankara, Turkey, in 2015 and 2018, respectively. He obtained his PhD from EPFL, Lausanne, Switzerland, in 2021. He then pursued his postdoctoral studies in medical and electrical engineering at the California Institute of Technology, United States. His research interests include nonlinear optics, optical computing, machine learning, fiber optics lasers, and ultrafast optics.

Mustafa Yildirim earned his bachelor's degree from the Middle East Technical University and followed up with his master's degree from EPFL, both in electrical engineering. Currently, he is engaged as a doctoral candidate at EPFL's Doctoral Program in Photonics. His primary focus lies in advancing optics-based neural network architectures, aiming for efficient and sustainable compute solutions.

Carlo Gigli is postdoctoral researcher at the Laboratory of Applied Photonics Devices, EPFL, Lausanne. He received his MS degree in physical engineering from Politecnico di Torino and Université Paris Diderot in 2017, and his PhD in physics from Université de Paris with a thesis on the design, fabrication, and characterization of dielectric resonators and metasurfaces for nonlinear optics. His current research activity focuses on AI-assisted bioimaging and photonic devices design.

Christophe Moser is a full professor at the Institute of Electrical and Microengineering and currently the director of the Microengineering Section at EPFL. He obtained his physics diploma degree from EPFL and his PhD from California Institute of Technology. He was the CEO of Ondax, Inc., prior to joining EPFL. His current research topics include light-based additive manufacturing—tomographic volumetric, two photon—and neuromorphic computing using linear and nonlinear propagation in optical fibers.

Demetri Psaltis received his BSc, MSc, and PhD degrees from Carnegie-Mellon University, Pittsburgh, Pennsylvania, United States. He is a professor of optics and a director of the Optics Laboratory at EPFL, Switzerland. His research interests include imaging, holography, biophotonics, nonlinear optics, and optofluidics. He has authored or coauthored more than 400 publications in these areas. He was the recipient of the International Commission of Optics Prize, the Humboldt Award, the Leith Medal, and the Gabor Prize.

[1] B. Fasel. An introduction to bio-inspired artificial neural network architectures. Acta Neurol. Belg., 2003, 103(1): 6-12.

[5]

[20]

[31] F. Duport, et al.. All-optical reservoir computing. Opt. Express, 2012, 20(20): 22783.

[35] U. Teğin, et al.. Scalable optical learning operator. Nat. Comput. Sci., 2021, 1(8): 542-549.

[40]

[49]

[53]

Article Outline

Ilker Oguz, Jih-Liang Hsieh, Niyazi Ulas Dinc, Uğur Teğin, Mustafa Yildirim, Carlo Gigli, Christophe Moser, Demetri Psaltis. Programming nonlinear propagation for efficient optical learning machines[J]. Advanced Photonics, 2024, 6(1): 016002.