Target-independent dynamic wavefront sensing method based on distorted grating and deep learning

1. Introduction

Phase difference (PD) methods eliminate the uncertainty of phase recovery through two (or multiple) images, which have the advantages of having a simple structure. Such methods are suitable for point and extended source imaging, and can recover continuous and discontinuous phase distribution. Therefore, the PD algorithm has been widely used in the fields of image reconstruction[1,2] and image-based wavefront sensing[3–6]. However, the PD algorithm based on numerical iteration leads to heavy calculation and low efficiency, which is limited in some scenarios where real-time wavefront sensing is required. Moreover, such a method is easily getting into a local optimum and results in inaccurate results under a large-scale phase aberration situation.

In recent years, deep learning has been introduced into the field of image-based wavefront sensing[7–12], which can be used to fit the nonlinear mapping relationship between the wavefront phase and the intensity image. These methods avoid a time-consuming iterative process so that they provide an effective way to restore a larger wavefront phase without local minimum problem after appropriate training. Current studies mainly focus on either point target imaging scenes[7–9,11] or on specific extended target imaging scenes (such as MNIST dataset)[10,12]. However, once the imaging scene is switched, the neural network model can hardly complete the wavefront sensing task effectively. There are also some network models that improve the generalization ability by increasing the diversity of training scenes[10,13]. However, these models lead to huge calculations, which are time-consuming and difficult to apply to real-world situations. When deep learning is used for extended target wavefront sensing, it is impossible to restore the phase directly if both the imaging target and the wavefront phase are unknown. Therefore, it is significant and meaningful to develop an image-based wavefront sensing method that is independent of the imaging target. Such a method can be applied to both a point source target and any extended source target imaging, especially for special application scenarios where the target on the image is dynamically changing, e.g. remote imaging or laser beam transmission where the distance between the target and the observer is changing.

Qi et al.[14] proposed a time-domain feature through mathematical operations to realize target-independent wavefront sensing. Li et al.[15] also used sharpness information of the in-focus and defocus images to get rid of the dependence of the images. These methods provide a feasible way for studying extended target wavefront sensing. However, in order to collect the in-focus and defocus images, it is usually necessary to use a beamsplitter and two cameras to work together at the same frequency or move one camera to collect the images twice. These methods may lead to some limitations in application scenarios with a high demand for dynamic wavefront sensing. Blanchard et al. first proposed a diffractive element called distorted grating and applied it to curvature wavefront sensing[16,17]. Distorted grating is widely used in multi-plane imaging, fluid velocity measurement, and particle tracking due to its strong real-time performance and high efficiency. Compared with a beamsplitter, the distorted grating ensures that the captured images are strictly corresponding.

In this Letter, we propose a target-independent wavefront sensing method based on distorted grating and deep learning. The distorted grating is designed to acquire positive and negative defocus images on an imaging plane simultaneously. Then a normalized fine feature is defined, which eliminates the information of the target and keeps the wavefront information, and we provide a lightweight and efficient network combined with an attention mechanism (AM-EffNet) to invert the unique wavefront aberration. In particular, targets in different scenarios are considered, and the optimal defocus amount of distorted grating is quantitatively analyzed. It is verified that our method has irreplaceable advantages in accuracy and efficiency, which provides potential for much more complicated wavefront sensing tasks.

2. Design of Distorted Grating

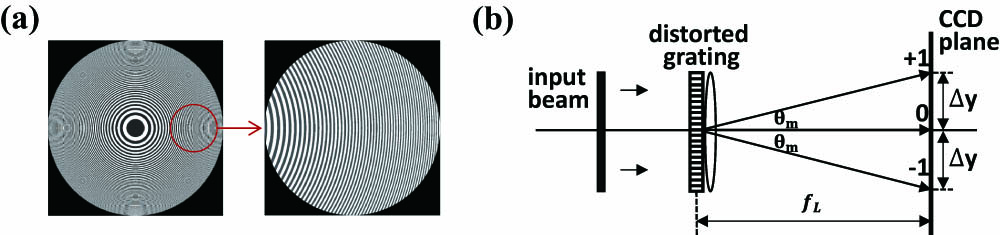

When using the PD method for wavefront sensing, it is necessary to ensure that more than two images are acquired synchronously in the same exposure time. In this Letter, the distorted grating is designed in detail to realize it. Such an optical element is, in fact, an off-axis Fresnel zone plate[16].

In Ref. [16], the defocus amount represents the defocusing ability of the grating, , and the off-axis amount is the distance from the pupil center to the center of the Fresnel zone plate, , where is the radius of the grating, is the focal length of the grating, represents the incident wavelength, and represents the grating period at the center of the aperture.

In this Letter, we further consider two key parameters, lateral distance and splitting ratio. As shown in Fig. 1, when the incident light is vertical to the distorted grating, the grating equation is

Fig. 1. (a) Schematic of the distorted grating and (b) geometric relationship of the ±1st diffraction order imaging positions.

Another key parameter is the splitting ratio, which determines the diffraction efficiency of the three diffraction orders of ±1 and 0. In order to make the light intensity distribution of the three images as uniform as possible under the same exposure intensity, the step width is set as a bisection, and the height of the phase step is set as [18].

In our work, the distorted grating is designed based on the principles described above. The designed parameters are the wavelength , the lens focal length , the defocus amount , the entrance pupil radius , the lateral distance , and the off-axis amount .

The structure diagram of the proposed target-independent wavefront sensing method is shown in Fig. 2. First, Zernike coefficients conforming to the Kolmogorov turbulence distribution are randomly simulated to generate the phase screen. Then targets with aberration are imaged by the distorted grating, and the features irrelevant to the targets are extracted as the input of the neural network. Finally Zernike coefficients for wavefront sensing are output.

3. Feature Extraction of Arbitrary Target

The intensity distribution of the image captured by a camera can be expressed as

4. Proposed Neural Network

A lightweight and efficient network based on attention mechanism (AM-EffNet) is proposed to obtain an efficient dynamic wavefront restoration capability. As shown in Fig. 3(a), the proposed network consists of three EffNet-blocks, three attention mechanisms, and one full connection layer. Since the feature map is a centrosymmetric figure, using half the image () as input provides the same information with fewer network parameters and computations than the original size image. Piston and tip/tilt aberrations are not considered in our work, and the network outputs the 4th to 35th order Zernike coefficients.

Figure 3(b) shows the structure of the EffNet-block and the overall attention process. EffNet-block uses depth-wise separable convolution to replace the traditional convolution operation, which reduces the computational complexity and makes the network more real-time. Channel and spatial attention modules are sequentially applied to learn “what” and “where” to attend in the channel and spatial axes, respectively[19]. represents the input feature maps, expresses the channel attention module, expresses the spatial attention module, denotes the element-wise multiplication, and and express the result of the channel attention and the final refined output, respectively.

The AM-EffNet is trained with an Adam optimizer at an initial learning rate of 0.001 over 150 epochs, which is based on the PyTorch deep learning framework with NVIDIA GeForce 3070 GPU. The mean-square error (MSE) loss (regression error of the predicted Zernike coefficients) is used as the loss function, and the batch size is set to 32.

Designed distorted grating generated 24,000 pairs of data, which are divided into 10:1:1 for training, validation, and testing, respectively. Each set of data consists of the feature image and corresponding label, which is the wavefront aberration represented as the 4th to 35th order Zernike coefficients conforming to the Kolmogorov turbulence distribution with different degrees of distortion [original wavefront RMS (root-mean-square) ranging from 0 through ].

In particular, for the method proposed in this paper, it does not need to use multiple targets for training. On the contrary, it only relies on the simple point target scenes to collect positive and negative defocus images for fine feature extraction, and then uses the AM-EffNet to establish an accurate mapping between the features and the incident wavefronts. It can be used to achieve wavefront sensing of multiple scenes while the network is well trained.

5. Comparison of Feature Extraction Methods

The effectiveness of the proposed normalized fine feature for the wavefront sensing task is verified under a variety of features. Figure 4 shows the comparison results of the different features extracted from the same group of positive and negative defocus extended target images.

Fig. 4. (a) Time domain feature. The red boxes in (b) are the enumerated power features, and the blue boxes in (c) are the sharpness features. We can see that the normalized fine feature in (d) includes the information of the sharpness and the power features with data distributed between 0 and 1. (e) Comparison of loss for different features in training.

In Fig. 4, the normalized fine features realize normalization while combining the sharpness feature and the power feature, which increases both the feature’s numerical stability and the detail representation ability. Four feature extraction methods are used to realize wavefront sensing for the same sets of test data (labels are the same) and the same network (AM-EffNet). Figure 4(e) shows the variation of loss for training using different features from Figs. 4(a)–4(d). After testing, the RMSE between the groundtruth and the recovered wavefront phase of methods [Figs. 4(a)–4(d)] is , , , and , respectively, which has demonstrated that the proposed feature can better achieve wavefront sensing with higher accuracy.

6. Comparison of Defocus Degree

When using the PD method for wavefront sensing, the selection of the defocus amount is critical. It can be known from Refs. [20,21] that in order to obtain accurate wavefront sensing results, the PV value of defocus aberration is generally within the range of . Based on this, we simulate and design five different defocus amounts of distorted grating with a step size of referring to this range.

For the same network, hyperparameters, and environment, the datasets obtained by distorted grating imaging with five different defocus amounts were used for training and testing, and the results are shown in Fig. 5. We can see that the distorted grating with a defocus amount of has the highest wavefront sensing accuracy, and from the outliers, the value of the RMSE shows that our method still has relatively stable wavefront sensing ability for major wavefront distortion with this defocus amount.

Fig. 5. Residual wavefront at different defocus amount. The letters A, B, C, D, and E in the abscissa express gratings with different defocusing degrees, and the numbers 1, 2, and 3 represent three different degrees of atmospheric turbulence in the range of 0–0.5λ, 0.5λ–1.0λ, and 1.0λ–1.5λ of the original wavefront RMS, respectively. The red circles represent outliers.

7. Applicability of Different Scenarios

In order to explore the applicability of this method to other imaging scenes, 2000 groups of mixed data from different targets are used for further testing. The targets include a point target and four types of extended targets with 400 groups each. The positive and negative defocus extended target images can be obtained by convolving with the positive and negative defocus point spread functions respectively. In order to closely simulate the application scenarios where targets are constantly switched and changed, the five types of targets are randomly and uniformly distributed.

Figure 6 shows a group of the wavefront sensing results with our method in each scenario, and Table 1 shows the average restoration results among five scenarios. After testing, the average SSIM between the restored wavefronts and the original wavefronts is 0.948. The RMSE of the residual wavefront is 0.042, the RMSE/Truth is 5.6%, and the inference time is about 2.0 ms. The simulation results show that our method has robust and high-accuracy wavefront sensing capability in a variety of extended target scenes.

Table 1. Testing Results Based on Our Method

|

Fig. 6. A group of wavefront sensing results of targets in five different scenarios with our method. The RMSE/Truth represents the ratio of the residual wavefront to the true wavefront.

8. Conclusion

In this Letter, a distorted grating is designed to acquire the positive and negative defocus images of arbitrary targets simultaneously, and the normalized fine features irrelevant to the imaging target itself are extracted as the input of the AM-EffNet, which can achieve fast wavefront sensing with an inference time of about 2.0 ms. Furthermore, the quantitative analysis shows that the distorted grating with defocus amount has the best wavefront sensing accuracy when the RMS of the original wavefront is within . It has been proved that our method has an effective wavefront sensing ability under diversified application scenarios, which provides potential for future applications like image restoration and real-time distortion correction.

Article Outline

Xinlan Ge, Licheng Zhu, Zeyu Gao, Ning Wang, Wang Zhao, Hongwei Ye, Shuai Wang, Ping Yang. Target-independent dynamic wavefront sensing method based on distorted grating and deep learning[J]. Chinese Optics Letters, 2023, 21(6): 060101.