光子学报, 2023, 52 (1): 0110002, 网络出版: 2023-02-27

基于双模态融合网络的目标检测算法

Object Detection Algorithm Based on Dual-modal Fusion Network

摘要

针对红外图像和可见光图像的融合目标检测问题,提出一种基于双模态融合网络的目标检测算法。在同时输入红外和可见光图像对后,利用设计的红外编码器提取红外图像空间特征信息;通过设计的可见光编码器将可见光图像从垂直和水平两个空间方向聚合特征,通过精确的位置信息对通道关系进行编码;最后,采用提出的门控融合网络自适应调节两路特征的权重分配,实现跨模态特征融合。在KAIST行人数据集上,与基准算法YOLOv5-n单独检测可见光图像和红外图像的结果相比,所提算法检测精度分别提升15.1%和2.8%;与基准算法YOLOv5-s相比,检测精度分别提升14.7%和3%;同时,检测速度在两个不同基准算法模型上分别达到117.6 FPS和102 FPS。在自建的GIR数据集上,所提算法的检测精度和速度也同样具有明显优势。此外,该算法还能对单独输入的可见光或红外图像进行目标检测,且检测性能与基准算法相比有明显提升。

Abstract

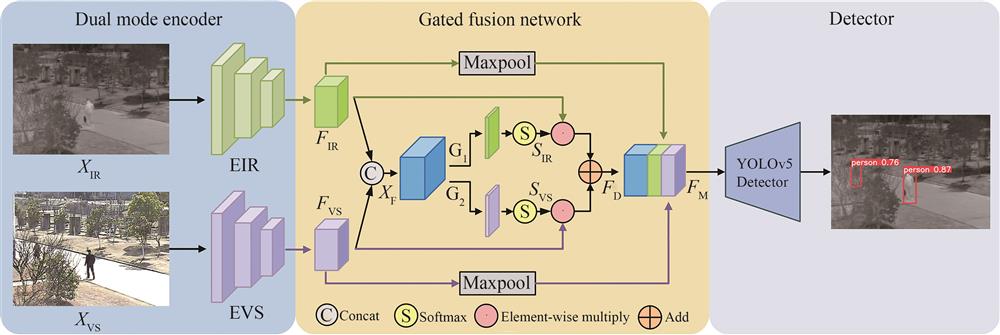

In object detection, unimodal images related to the detection task are mainly used as training data, but it is difficult to detect targets in actual complex scenes using only unimodal images. Many researchers have proposed methods using multimodal images as training data to address the above problems. Multimodal images, such as Infrared (IR) images and Visible (VS) images, have complementary advantages. The advantage of IR images is that they rely on the heat source generated by targets and are not affected by lighting conditions but cannot capture the detailed information of targets. The advantage of VS image is that it can clearly capture the texture features and details of targets, but it is easily affected by lighting conditions. Therefore, for the object detection problem of IR and VS image fusion, an object detection algorithm based on the dual-modal fusion network is proposed. The algorithm can input IR images and VS images at the same time. Due to the imaging differences and their respective characteristics of IE images and VS images, different networks are used to extract features. Among them, IR images use the designed infrared encoder, Encoder-Infrared (EIR), and the SimAM module is introduced into the EIR to extract different local spatial information in the channel. This module optimizes an energy function based on neuroscience theory, thereby calculating the importance of each neuron and extracting spatial feature information by weighting. VS images adopt the designed visible encoder, Encoder-Visible (EVS), and introduce the Coordinate Attention (CoordAtt) mechanism into the EVS to extract cross-channel information, obtain orientation and position information, and enable the model to more accurately extract feature information of the target. To obtain spatial feature information with precise location information, the global average pooling (AvgPool) operation is used to extract features from both vertical and horizontal directions, respectively, and aggregate features from both vertical and horizontal spatial directions. The precise location information encodes the channel relationship. Finally, this paper proposes a gated fusion network with the help of Multi-gate Mixture of Experts (MMoE) in multi-task learning. A gated fusion network is used to learn unimodal features and contributions to detection. According to MMoE, the extraction of infrared image features and the extraction of visible light features are regarded as two tasks, that is, EIR and EVS are expert modules, and EIR is only used to extract features of IR images. Similarly, EVS is only used to extract features of VS images. The results of the two expert modules are integrated to achieve the purpose of specialization in the surgical industry. In the process of fusion, it is necessary to have a certain bias for a certain task, that is, the output results of the expert modules EIR and EVS are mapped to the probability. The proposed gated fusion network adapts the weight distribution of the two-way features to achieve cross-modal feature fusion. This paper uses two datasets to evaluate the algorithm, the first is the public KAIST pedestrian dataset, and the second is the self-built GIR dataset. Each image in the KAIST pedestrian dataset contains both VS and IR versions. The dataset captures routine traffic scenes, including campus, street, and countryside during daytime and nighttime. The GIR dataset is general targets dataset created in this paper. These images are from the RGBT210 dataset established by Chenglong Li's team, and each image contains two versions of the VS image and the IR image. Among them, all types of images share a set of labels. On the KAIST pedestrian dataset, we validate our algorithm on two models of YOLOv5. Compared with YOLOv5-n, the detection accuracy of the proposed algorithm is improved by 15.1% and 2.8% respectively for VS and IR images; compared with YOLOv5-s, the detection accuracy is improved by 14.7% and 3%; at the same time, the detection speed reaches 117.6 FPS and 102 FPS respectively on two different models. On the self-built GIR dataset, compared with YOLOv5-n, the detection accuracy of the proposed algorithm is improved by 14.3% and 1% respectively for IR and VS images; compared with YOLOv5-s, the detection accuracy is improved by 13.7% and 0.6%. At the same time, the detection speed reaches 101 FPS and 85.5 FPS respectively on two different models. The algorithm in this paper is compared with the current classical object detection algorithms, and the effect has obvious advantages. In addition, the proposed algorithm can also perform object detection on separately input VS or IR images, and the detection performance is significantly improved compared with the baseline algorithm. In the process of visualization, the algorithm in this paper can flexibly display the visualization results of detection on VS images or IR images.

孙颖, 侯志强, 杨晨, 马素刚, 范九伦. 基于双模态融合网络的目标检测算法[J]. 光子学报, 2023, 52(1): 0110002. Ying SUN, Zhiqiang HOU, Chen YANG, Sugang MA, Jiulun FAN. Object Detection Algorithm Based on Dual-modal Fusion Network[J]. ACTA PHOTONICA SINICA, 2023, 52(1): 0110002.