1 中国科学院光电技术研究所,四川 成都 610209

2 中国科学院大学电子电气与通信工程学院,北京 100049

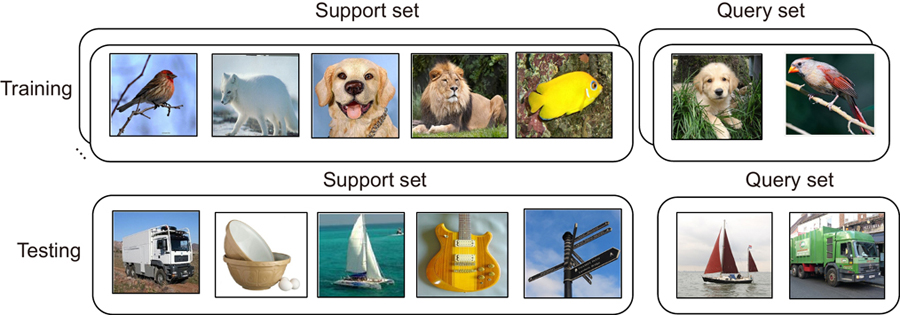

To improve the performance of few-shot classification, we present a general and flexible method named Multi-Scale Attention and Domain Adaptation Network (MADA). Firstly, to tackle the problem of limited samples, a masked autoencoder is used to image augmentation. Moreover, it can be inserted as a plug-and-play module into a few-shot classification. Secondly, the multi-scale attention module can adapt feature vectors extracted by embedding function to the current classification task. Multi-scale attention machine strengthens the discriminative image region by focusing on relating samples in both base class and novel class, which makes prototypes more accurate. In addition, the embedding function pays attention to the task-specific feature. Thirdly, the domain adaptation module is used to address the domain shift caused by the difference in data distributions of the two domains. The domain adaptation module consists of the metric module and the margin loss function. The margin loss pushes different prototypes away from each other in the feature space. Sufficient margin space in feature space improves the generalization performance of the method. The experimental results show the classification accuracy of the proposed method is 67.45% for 5-way 1-shot and 82.77% for 5-way 5-shot on the miniImageNet dataset. The classification accuracy is 70.57% for 5-way 1-shot and 85.10% for 5-way 5-shot on the tieredImageNet dataset. The classification accuracy of our method is better than most previous methods. After dimension reduction and visualization of features by using t-SNE, it can be concluded that domain drift is alleviated, and prototypes are more accurate. The multi-scale attention module enhanced feature representations are more discriminative for the target classification task. In addition, the domain adaptation module improves the generalization ability of the model.

小样本图像识别 注意力机制 领域自适应 相似性度量 few-shot image classification attention mechanism domain adaptation similarity metric