Enhanced imaging through turbid water based on quadrature lock-in discrimination and retinex aided by adaptive gamma function for illumination correction

1. Introduction

Underwater image restoration plays a crucial role in object detection, object recognition, and video tracking[1]. The visibility of underwater images is degraded by the scattering and absorption of the incident light field. The imaging quality deteriorates with the increased distance between the target and the sensor as well as with the turbidity.

In recent years, many de-scattering techniques have been put forth to cope with the degradation of the image. These methods are typically divided into two categories: image restoration methods based on the physical model and image recovery methods based on image enhancement[2,3]. The image restoration methods use the atmospheric scattering model or prior knowledge to reverse the degradation caused by the scattering of light, which includes using the dark channel prior (DCP) method[4], the polarization imaging method[5], and the intensity modulation of an active light source[6]. The other is built on image enhancement algorithms, such as the histogram equalization (HE)[7], the contrast limited adaptive histogram equalization (CLAHE)[8], and the retinex algorithms[9]. These algorithms can improve image contrast, but they are ineffective at restoring visibility range.

A simpler and more competitive approach is to use an intensity modulated continuous-wave light source[6,10,11]. The theory builds on the hypothesis that the modulating frequency and phase of the captured ballistic photons, in contrast to those of the multiply scattered photons, remain the same as that of an incident modulated light source. This method requires demodulation of the received signal at the modulating frequency. Typical ballistic filtering requires modulation at high frequencies[12]. However, low modulation frequencies can be chosen, at the expense of fewer ballistic or snake-like photons, to meet the requirements of available imaging systems[13]. Sudarsanam et al. used low frequencies to demonstrate imaging through spherical polydisperse scatterers, and the demodulation was performed by using quadrature lock-in discrimination (QLD)[13]. An instantaneous all-optical single-shot technique demonstrated demodulation at higher frequencies () up to the radio frequency range[14]. However, the aforementioned technique has a few shortcomings, such as a smaller field of view, an increased cost of optical elements, and system complexity.

Imaging through the real fog has been realized over hectometric distances to validate the performance of the QLD technique[15]. In our previous work, we developed a tracking method for active light beacons to realize underwater docking in highly turbid water. The QLD technique was employed to lock on the blinking frequency of the light beacons located at the docking station and to successfully suppress the effect of unwanted light and stray noise at other frequencies[16]. Recently, imaging through flame and smoke was demonstrated by employing a blue light-emitting diode (LED) and by using the QLD algorithm.[17] Although the QLD algorithm is well studied for imaging using scattering media such as polydisperse scatterers, real-time fog, smoke, and flame, it is still not thoroughly investigated for underwater image restoration where an LED is used to illuminate the target object.

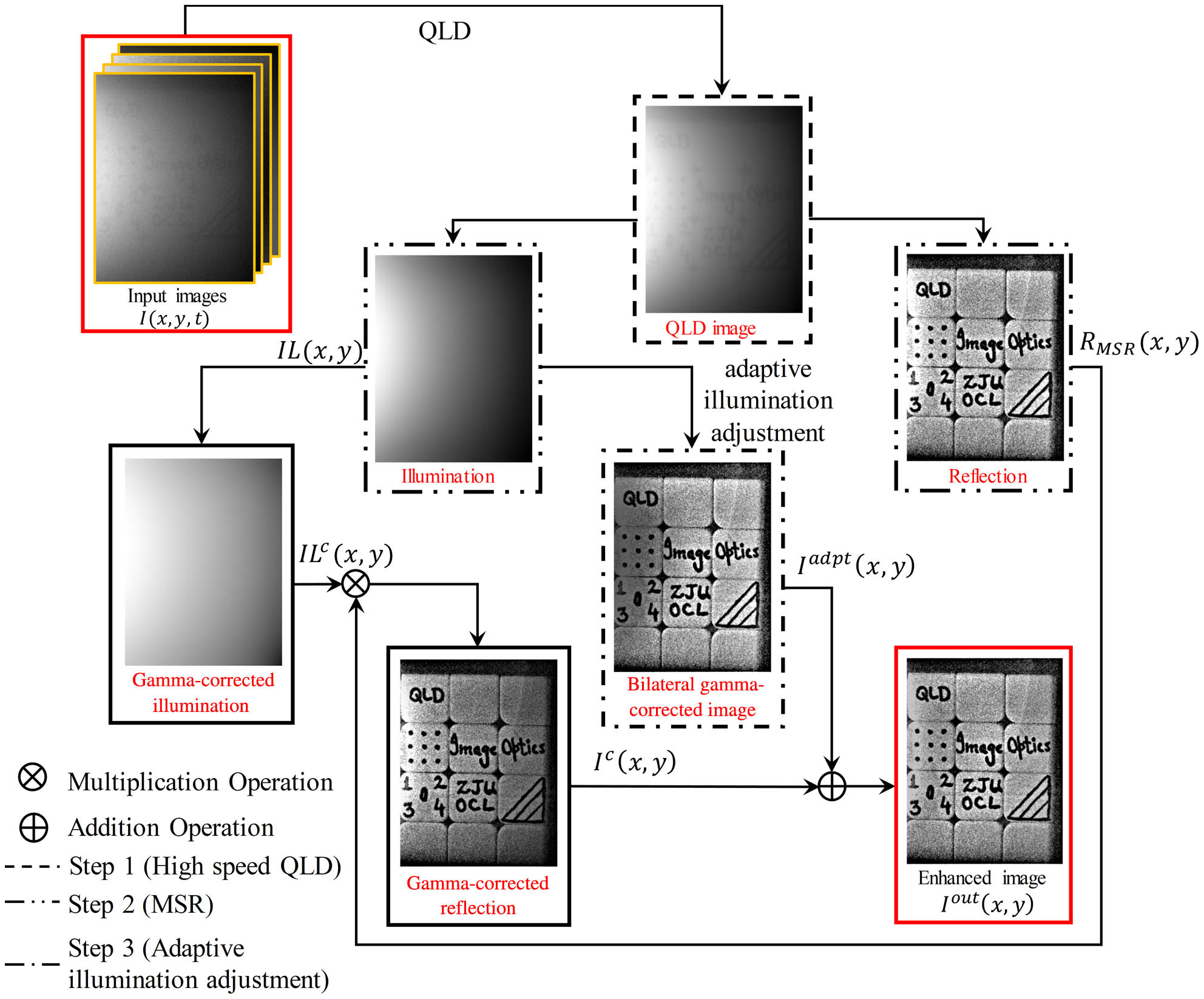

In this Letter, we presented a novel underwater image recovery method based on a cascade method. It benefits from the strengths of the image restoration method, such as the traditional QLD technique, to improve visibility and mitigate the noise. The high-speed QLD method is proposed to help implement our cascaded approach in real-world systems. The well-known image enhancement method, such as the multiscale retinex (MSR) technique, is employed to recover the contrast of the output image. In the MSR, the multiscale guided filter is used instead of the multiscale Gaussian filter to avoid the halo artifacts at the boundaries, information loss, and the blurring effect of the output image. Additionally, the adaptive illumination correction algorithm is optimized and incorporated to overcome the non-uniform illumination in the output image. The weighted fusion method is then developed to obtain the final enhanced output image.

2. Proposed Method

The proposed approach consists of three main steps described in the following sections.

2.1. High-speed quadrature lock-in discrimination algorithm

The quadrature lock-in discrimination technique works based on the principle of a lock-in amplifier. Consider the captured light modulated at a frequency of (Hz) and modulation index ; the intensity at the receiver is written as for the average received intensity . When the signal is multiplied by a sine wave of the known modulating frequency and a relative phase followed by the time averaging over a few cycles, one obtains an in-phase component . Meanwhile, the multiplication of the signal with the cosine wave of the known modulating frequency and the time averaging over a few cycles give rise to the quadrature component . The quadrature components can be squared and added to retrieve the amplitude , and the relative phase difference between the source and the detector can also be obtained. In our experiments, we used a scientific complementary metal-oxide-semiconductor (sCMOS) camera to capture signals as 2D images of a scene over a certain length of time. The images are then processed offline by the QLD algorithm to reconstruct an output image by computing the amplitude of the received signal at each pixel using MATLAB as a programming tool.

The frame rate (or sampling frequency ) of the camera is times the modulation frequency in the traditional QLD method. Moreover, should be greater than or equal to 2, i.e., , in accordance with the Nyquist sampling criterion. When the multiple is four (i.e., ), a periodic sequence of sine and cosine signals can be written as and , respectively, and the and components can be written in the form of Eqs. (1) and (2)[18].

2.2. Multiscale retinex method

Since the underwater image is degraded due to low contrast and uneven illumination, the retinex method is used to overcome these problems[19]. Retinex theory states that the perceived image can be broken down into illumination and reflection images, as shown in Eq. (3),

The Gaussian filtering of the resulting illumination component is done at the highest scale to remove the noise. We used the simplest color balancing algorithm[22] as a post-processing method, which clips a certain proportion of pixels on either side of the image histogram to stretch the values of the image to the widest possible range [0, 255].

2.3. Improved bilateral gamma function for adaptive intensity correction

The resulting image from the previous step still has an impact of uneven illumination, especially at high turbidity levels. Here, to reduce the impact, we employed an improved bilateral gamma function in the adaptive intensity correction algorithm[23] to adaptively update the illumination component from the previous step. The equations of the improved bilateral gamma function are as follows:

We proposed an adaptive illumination correction algorithm for underwater images based on the bilateral gamma function by using both the reflection and illumination images. Meanwhile, the gamma-corrected illumination image is calculated and added back to the reflection image to restore the naturalness of the image[24]. The corrected reflection image is expressed as

The final output image is the weighted fusion of the two different illumination corrected images,

We chose in our experiments. The flowchart of the proposed method is shown in Fig. 1.

3. Experiments and Results

The experimental setup is shown in Fig. 2. We used a 625-nm red LED (M625L4) as a light source, and the current through the LED is modulated (modulation index ) using the internal sinusoidal modulation function. Two types of objects, such as a Rubik’s cube and a rubber toy, are used as underwater targets with the corresponding modulation frequencies for the LED being adjusted to 37 Hz and 38 Hz, respectively. The modulated LED illuminates the target, and the image, formed by the reflection of light from the target, is captured using a camera (16-bit Dhyana 400BSI sCMOS camera). The volume of the transparent water tank is . We added up to 21 mL of milk into the water tank to simulate a high-turbidity environment. The distance between the target object and the camera is 90 cm. The frame rate of the camera is adjusted to four times the modulating frequency of the LED. The images are captured for a time duration of 2 seconds. We did not observe any improvement in the final results for a longer time series.

To demonstrate that our approach can realize image restoration, we used an image of a rubber toy, which is a multi-level gray image and is more prone to degradation caused by noise and turbidity. The performance comparison of our approach with other traditional image restoration and image enhancement methods is shown in Figs. 3 and 5. It is important to mention that the time averaging of 100 images is performed to minimize the effect of noise prior to applying traditional methods. It can be seen in Fig. 3 that the grayscale span of the output image of the CLAHE is more widely distributed, and the overall contrast is enhanced. Despite the enhancement in visibility, the problems of uneven illumination and poor performance for high-turbidity images cannot be solved. The guided filter used in the MSR method contributed to the high contrast and elimination of the halo artifacts along the boundaries. The adaptive gamma correction adjusts the illumination adaptively by increasing the intensity in low illumination areas and vice versa. Thus, the shadow caused by uneven illumination is eliminated considerably, and from the results, it can be seen that our method is more efficient for a high-turbidity environment. We select a zoomed-in region of the high-turbidity image, and the intensity profiles at colored dashed lines in the zoomed-in view of Fig. 3 are plotted and shown in Fig. 4. For a fair comparison, the minimum grayscale intensity value of each curve is subtracted from the original value to shift its lowest point to the horizontal axis. The intensity profile of a clear image (image captured in clear water) is also plotted. It can be seen that the trend of the curve in our method is similar to that of a clear image. Furthermore, it is clear that the proposed approach has the highest contrast and signal-to-noise ratio (SNR) as compared with other methods. The MSR method tends to have a loss of details, bleaching of image information, and lower contrast as compared to our method, and the DCP and DehazeNet[25] suffer from a low contrast and uneven illumination problem.

Fig. 3. Comparison results for the images captured in low-turbidity (first row) and high-turbidity (second row) and their zoomed-in views (of high-turbidity in the third row) of different methods using the rubber toy as a target object.

Fig. 5. Comparison results for the images captured in low-turbidity (first row) and high-turbidity (second row) and their zoomed-in views (of high-turbidity in the third row) of different methods using the Rubik’s cube as a target object.

The universality of the proposed method is verified by imaging the Rubik’s cube with handwritten words on it as a target. Different methods at different turbidities and their zoomed-in views are shown in Fig. 5. We compute various evaluation metrics of the image quality for the zoomed-in view in Fig. 5 to quantify and compare the image quality for various methods in the absence of reference images. The metrics include the standard deviation (STD), the peak signal-to-noise ratio (PSNR), the average gradient (AG)[26], the entropy[27], the value of the measure of enhancement (EME)[28], the blind-reference-less image spatial quality evaluator (BRISQUE)[29], and the natural image quality evaluator (NIQE)[30]. The values of these metrics are reported in Table 1, and the best indicators are marked in bold. One may find that our method achieved the highest values for most of the metrics, which further supports the effectiveness of the proposed method over the other traditional methods.

Table 1. Quantitative Comparison of Zoomed-in View of Fig. 5

|

The performance of the proposed method is also evaluated for the modulated light source with different modulation indexes. The zoomed-in parts of the high-turbidity images of the target objects from Fig. 5 (Rubik’s cube) are used to evaluate the effect by taking the PSNR as an evaluation metric. Two modulation indexes have been chosen, first by taking a modulation index of and second . The PSNR values of the recovered images are shown at the top of the corresponding images in Fig. 6. It is clear that the small modulation index leads to poor image recovery results for high-turbidity images. Compared with each other, the PSNR value has been decreased by 1.42 dB. It is deduced that the higher values of the modulation index aid in obtaining a better quality of the recovered image in our experiments.

Fig. 6. Comparison results for different modulation indexes in a high-turbidity environment.

4. Conclusion

We present a three-stage processing method for recovering underwater images. Initially, we preprocessed the series of images of the scene, illuminated with a modulated source of light, using the high-speed QLD technique at the known modulating frequency. The QLD technique helped reduce the noise caused by turbidity and increased the visibility of the scene by filtering the small number of ballistic photons. Next, we performed retinex enhancement using a guided filter to separate the illumination component, which restores the contrast of the image and reduces uneven illumination at the cost of increased processing noise. The proposed approach makes better use of the retinex method to improve contrast by using a QLD-processed image instead of the original underwater image. Finally, the usage of the bilateral gamma function for adaptive illumination correction aids the visual quality by reducing the over-exposure effects that then preserve details in the image. The results show that our method has distinct benefits in contrast enhancement, detail recovery, uneven illumination correction, and noise reduction in both low and high-turbidity environments.

Article Outline

Riffat Tehseen, Amjad Ali, Mithilesh Mane, Wenmin Ge, Yanlong Li, Zejun Zhang, Jing Xu. Enhanced imaging through turbid water based on quadrature lock-in discrimination and retinex aided by adaptive gamma function for illumination correction[J]. Chinese Optics Letters, 2023, 21(10): 101102.